Organizations accumulate vast amounts of data from various sources such as CRMs, marketing platforms, website analytics, and social media channels. To maintain a competitive edge, this diverse data must be analyzed to make informed, data-driven business decisions. However, disparate data formats and silos make it challenging to effectively analyze and derive actionable insights.

Traditional Extract, Transform, Load (ETL) processes have been the standard for consolidating data into a unified format suitable for advanced analytics. However, a new approach known as Zero ETL is gaining traction among data professionals.

In this article, we'll give an overview of Zero ETL, explore its benefits, explain how it works, and highlight the top Zero ETL tools that can streamline your data integration processes.

What Is Zero-ETL?

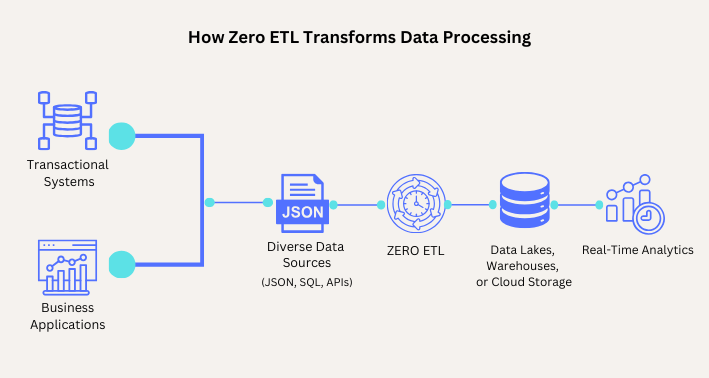

Zero ETL is an emerging approach to data integration that eliminates the need for traditional Extract, Transform, Load (ETL) processes. Instead of moving and transforming data through separate stages, Zero ETL aims to provide real-time access to data across independent sources without the overhead of conventional ETL data pipelines. This approach leverages modern technologies like data virtualization, real-time streaming, and federated queries to enable effortless data access and integration.

In a Zero ETL architecture, data remains in its original source systems, and integration occurs at the time of query execution. This allows organizations to access and analyze data without the delays and complexities associated with traditional ETL processes. By minimizing data movement and transformations, Zero ETL reduces latency, improves data freshness, and simplifies the data infrastructure.

Zero ETL vs Traditional ETL

Traditional ETL involves three main steps:

- Extract: Data is extracted from various source systems.

- Transform: Extracted data is transformed into a consistent format suitable for analysis.

- Load: Transformed data is loaded into a target system, such as a data warehouse or data lake.

This process is often run in batches, leading to a delay between data generation and availability for analysis. Traditional ETL pipelines can be complex to build and maintain, require significant developer resources as well as infrastructure, and it does not support the needs of organizations requiring real-time, instant-updates in their analytics or machine learning workflows.

Zero ETL, on the other hand, eliminates these stages by providing direct access to data in its source systems. Key differences include:

- Data Movement: Traditional ETL involves physically moving data between systems. Zero ETL minimizes or eliminates data movement, accessing data in place.

- Latency: Traditional ETL processes can introduce latency due to batch processing schedules. Zero ETL enables real-time or near-real-time data access.

- Complexity: Building and maintaining ETL pipelines can be complex and time-consuming. Zero ETL reduces operational complexity by removing the need for separate ETL processes.

- Flexibility: Traditional ETL requires predefined schemas and transformations. Zero ETL allows for more flexible data access without extensive upfront modeling.

Zero ETL vs ELT

ELT (Extract, Load, Transform) is a variation of the traditional ETL process where data is first extracted and loaded into a target system, such as a data lake or data warehouse, and then transformed within that system. ELT leverages the processing power of modern data platforms to perform transformations more efficiently.

Comparing Zero ETL to ELT:

- Data Loading:

- ELT involves loading all data into a central repository before transformation.

- Zero ETL skips this step by accessing data directly from source systems.

- Transformation Location:

- ELT performs transformations within the target system after loading.

- Zero ETL may perform transformations on-the-fly during query execution or utilize data virtualization techniques.

- Latency:

- ELT reduces latency compared to traditional ETL but still involves data loading delays since it includes that extra step of loading from the source system into a data warehouse or data lake.

- Zero ETL offers even lower latency by eliminating the loading and transformation stages; everything happens all in one go.

- Infrastructure Requirements:

- ELT requires robust target systems capable of handling large volumes of raw data and transformation workloads as well as additional tooling for handling data ingestions as well as pipeline monitoring and alerting.

- Zero ETL reduces infrastructure demands by minimizing data duplication and processing within source systems itself, eliminating the need for excessive bloat of tools in your data stack.

What Are the Benefits of Using Zero ETL?

Implementing Zero ETL offers several advantages:

- Real-Time Data Access: Provides immediate access to the most current data as it exists in the source system, providing increased confidence in derived insights and data-driven decisions.

- Reduced Complexity: Eliminates the need to build and maintain complex ETL pipelines, reducing development and operational overhead for the data team.

- Cost Savings: Reduces infrastructure and storage costs by avoiding data duplication and extensive processing in intermediate systems. Additionally, since data teams can spend more time on business initiatives rather than building and maintaining intermediate pipelines, Zero ETL also helps businesses save on operational costs.

- Improved Data Governance: By accessing data directly from source systems, Zero ETL can enhance data governance and compliance by maintaining a single source of truth rather than having several duplicates and iterations of the same data across a web of intermediate systems.

- Scalability: Simplifies the scaling of data ingestion processes, as there's no need to re-engineer already-existing ETL pipelines when adding new data sources or handling increased data volumes.

How Does Zero ETL Work?

Zero ETL introduces a lean approach that simplifies your data workflows. Here's a breakdown of how it works:

Direct Load and On-Demand Transformation

In Zero ETL architectures, data is loaded directly into the target system in its raw form, and transformations are performed on-demand during query execution. In contrast, in Traditional ETL, data is constantly extracted, transformed, and loaded in a series of intermediate stages before it’s usable for analysis.

On-Demand Transformation (Zero ETL):

- Customization: Users apply transformations as needed for specific analyses.

- Resource Efficiency: Reduces the need to store multiple transformed versions of the same data.

Scheduled Transformation (Traditional ETL):

- Lack of Flexibility: Fixed transformation schedules may not meet immediate data needs, making it difficult to address ad-hoc analysis or rapidly changing requirements.

- Higher Resource Consumption: Regularly transforming and storing multiple versions of data can increase storage costs and inefficiently use processing resources.

Direct Load (Zero ETL)

- Immediate Availability: Data becomes available in the target system without waiting for transformation processes.

- Reduced Latency: Eliminates delays associated with batch transformations.

Batch Load (Traditional ETL):

- Delayed Availability: Data becomes accessible in the target system only after scheduled transformation and loading processes, leading to wait times that can hinder timely decision-making.

- Increased Latency: The reliance on batch processing introduces delays, making it challenging to achieve real-time data insights and react promptly to new information.

Schema-on-Read vs. Schema-on-Write

Zero ETL leverages the Schema-on-Read approach, where data is stored in its raw, untransformed state, and a schema is only applied at the time of query execution. This contrasts with Schema-on-Write, used in traditional ETL, where data is transformed into a predefined schema before storage. This gives developers and analysts a great degree of flexibility to manipulate the data for their specific use case instead of relying on (or building out new) fixed, intermediate models.

Schema-on-Read (Zero ETL):

- Flexible: Allows for dynamic data interpretation based on the query context.

- Agile: Supports rapid integration of new data sources without extensive upfront modeling.

Schema-on-Write (Traditional ETL):

- Predefined Structure: Requires data to conform to a specific schema before storage.

- Rigidity: Adding new data types often necessitates schema redesign and data reprocessing.

What Drives Zero ETL?

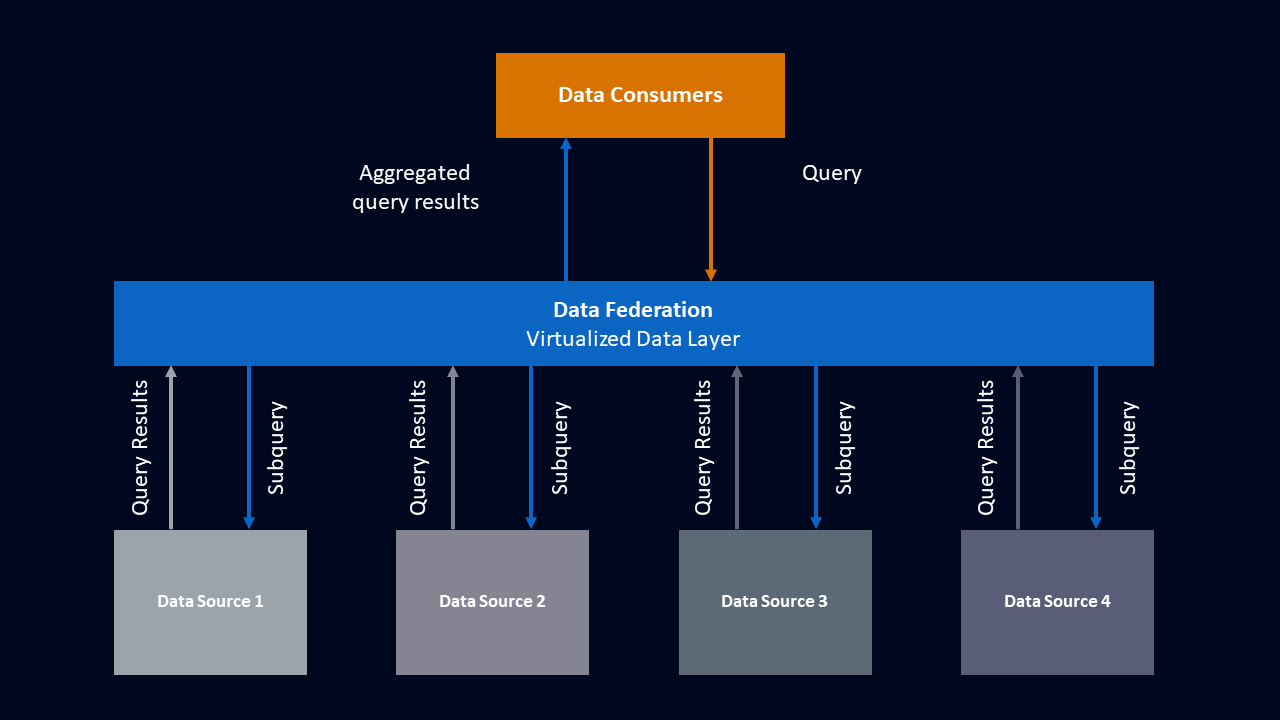

The key item driving the Zero ETL architecture is data federation. Data federation allows you to interact with a variety of independent data sources under a single, unified platform and interact with these isolated systems as if they were one system.

Federated Querying

Unified Data Access Across Multiple Sources

Zero ETL enables federated querying, allowing users to execute queries across independent data sources without actually moving the data out of those source systems and into intermediary systems. With federated querying, you can do things like:

- Cross-Data Source Joins: Combine data from different database systems (for example, MySQL and Postgres) in real-time using a single query interface.

- Ad Hoc Analysis: Perform exploratory data analysis without the need to pre-define schemas or write complex ETL processes to create intermediary database objects.

Data Virtualization

- Abstraction Layer: Presents a virtualized view of data, hiding the complexity of underlying sources. You can build your analytics or machine learning processes under one platform and don’t need to deal with the nuances of each independent source system.

- Consistent Security and Governance: Applies uniform access controls and policies across all data sources, simplifying access management as all data is processed through one system.

Use Cases of Zero ETL

Zero ETL offers a modern approach to data integration. It simplifies data management, making it well-suited for several use cases.

Machine Learning and AI Models

Real-Time Data for Training and Inference

Zero ETL enables machine learning models to access the most recent data directly from source systems without the delays introduced by traditional ETL processes. This is crucial for applications requiring up-to-date information, such as:

- Predictive Analytics: Models that forecast trends or behaviors benefit from real-time data to improve accuracy.

- Recommendation Systems: Providing personalized recommendations based on the latest user interactions enhances relevance and user engagement.

On-Demand Feature Engineering

- Dynamic Feature Extraction: Zero ETL allows data scientists to perform feature engineering on-demand, using fresh data without waiting for the next scheduled batch, which is often several hours or even a full working day.

- Experimentation: Data scientists and ML Engineers can rapidly prototype and test different variations of models with different data subsets and transformations without having to rely on another team to build intermediary models for them.

Business Outcome:

- Accelerated Model Deployment: Zero ETL greatly reduces time from model development to deployment in production.

- Improved Accuracy: Access to instantly-updated, real-time data improves model precision.

- Operational Efficiency: Streamlines workflows, allowing data scientists to focus on modeling rather than data preparation or waiting for another team to prepare the data for them.

Customer Experience Analytics

Personalization and Real-Time Engagement

With Zero ETL, you can integrate and analyze data in real-time from various customer touchpoints, enabling organizations to deliver highly personalized experiences to their customers in online products:

- Unified Customer View: Aggregate data from the CRM, marketing platforms, social media, and more to build a comprehensive customer profile, create segmentations, and predict customer behavior.

- Real-Time Offers and Promotions: Adjust marketing strategies on-the-fly based on current customer behavior and preferences.

Customer Support Optimization

- Immediate Access to Data: Customer service representatives can access up-to-date data on a customer, their history, the related issue ticket, and more in order to resolve issues promptly which drives customer retention and improves satisfaction.

- Sentiment Analysis: Real-time monitoring of customer feedback allows for immediate alerting and response to negative sentiments or growing trends.

Business Outcome:

- Increased Customer Satisfaction: Personalized interactions enhance customer loyalty.

- Higher Conversion Rates: Timely, relevant offers improve sales effectiveness.

- Competitive Advantage: Differentiates the brand through superior customer experiences.

Fraud Detection and Prevention

Real-Time Monitoring and Alerts

Zero ETL facilitates immediate detection of fraudulent activities by processing data as it is generated. In this way, your organize can react fraudulent activity with lightning speed and prevent harmful actors from inflicting much damage:

- Transaction Monitoring: Analyzes financial transactions in real-time to identify and alert on suspicious patterns.

- Anomaly Detection: Uses machine learning models to detect deviations from normal behavior, flag suspicious behavior, and prevent suspected fraud in an automated way.

Integration with Security Systems

- Event Correlation: Combine data from multiple sources such as transaction logs, access controls, and network activity to monitor and alert on any signs of compromise without delay.

- Automated Response: Trigger alerts or actions (e.g., blocking a transaction) instantly upon detecting fraud indicators or unauthorized access to a system.

Business Outcome:

- Reduced Financial Losses: Immediate detection minimizes the impact of fraudulent activities.

- Regulatory Compliance: For organizations in highly regulated industries such as financial services and healthcare, Zero ETL can enhance adherence to compliance requirements by allowing you to analyze anomalies without any delay.

- Trust Building: Using Zero ETL to build robust, reactive fraud prevention systems strengthens relationships with customers and improves customer loyalty.

Business Outcome:

- Enhanced Agility: Quickly access and analyze data, supporting faster decision-making.

- Cost Savings: Reduces the need for data replication and storage costs associated with centralized data warehouses.

- Simplified Data Management: Eases the burden on IT teams by minimizing the technical debt created by building out intermediary data integration systems.

Supply Chain Optimization

Real-Time Visibility and Decision-Making

Zero ETL provides up-to-date information across the supply chain, enabling organizations to respond swiftly to changes that are happening on the factory floor or in the shipping warehouse as they happen. With Zero ETL, you can do the following:

- Better Inventory Management: Monitor stock levels in real-time to prevent overstocking or stockouts. Alert when stock level low the moment that it occurs and kick-off the process for re-stocking.

- Demand Forecasting: Adjust production schedules based on current sales data, market trends, and operational capacity. With real-time analytics, you can get notified instantly if an SLA is at risk of not being fulfilled and pivot your resources accordingly or proactively get ahead of the issue with the customer.

Integration of IoT Data

- Sensor Data Processing: Incorporate data from IoT devices such as RFID tags, GPS trackers, and environmental sensors, which send thousands of data points per second. With Zero ETL processes and IoT data, you can simplify the analysis of all these data points in one, unified system.

- Predictive Maintenance: Monitor IoT system data in real-time to predict device failures and schedule maintenance proactively.

Business Outcome:

- Increased Efficiency: Optimizes operations by aligning supply with demand.

- Cost Reduction: Minimizes waste and reduces operational costs through better resource utilization.

- Improved Customer Satisfaction: Ensures timely delivery of products, enhancing service levels.

Zero ETL vs Traditional ETL: Key Differences

Zero ETL and Traditional ETL (Extract, Transform, Load) represent two distinct approaches to data integration and analytics. Here we recap their key differences, as understanding these differences is crucial for designing efficient data architectures that meet modern business requirements.

Comparative Analysis

Below is a detailed comparison of Zero ETL and Traditional ETL across key dimensions:

Features | Zero ETL | Traditional ETL |

| Process | Direct data integration from the source system to analysis without explicit extraction, transformation, and loading phases. | Involves separate extraction, transformation, and loading steps implemented as distinct processes. |

| Data Latency | Offers real-time or near real-time data access, enabling immediate insights and actions. | Data is refreshed on a fixed schedule with delays in-between; these delays cause the data to become stale and inefficient for critical analyses depending on real-time information. |

| Complexity | Simpler setup with minimal infrastructure requirements; reduces operational overhead by eliminating complex ETL pipelines. | Complex to develop, maintain, and scale ETL pipelines; requires significant engineering and infrastructure resources in order to set up and maintain. |

| Flexibility | High flexibility with schema-on-read capabilities; supports agile data exploration and integration of new data sources without extensive reconfiguration. | Less flexible; relies on predefined schemas and transformations (schema-on-write), making adaptation to new data sources or formats more cumbersome. |

| Data Freshness | Ensures up-to-date data by accessing sources directly; critical for highly time-sensitive analytics and decision-making. | Data is often outdated between ETL job runs; not suitable for applications requiring immediate data freshness and immediate reactions. |

| Infrastructure Cost | Cost-effective due to reduced data duplication and movement; leverages existing resources and computing for efficiency. | Higher costs associated with dedicated ETL tools, storage for intermediate data, and processing resources; often leads to redundant data storage. |

| Use Cases | Ideal for real-time analytics, machine learning models, operational dashboards, and scenarios requiring access to immediately-refreshed data. | Suited for complex data transformations, historical reporting, integrating with legacy systems, and ensuring data consistency across long-term storage. |

How Estuary Flow Can Contribute to Your Zero ETL Approach

Zero ETL approaches have gained attention for promising easy, scalable access to all of your data without traditional extract, transform, and load processes; however, these strategies often fall short since setting up a proper Zero ETL stack is fraught with challenges such as limited connectivity to various common and niche data sources, difficult-to-configure security measures, lack of complex data quality monitoring, high operational costs to set up, and insufficient support for ongoing maintenance.

Estuary Flow solves these issues while providing you with real-time, up-to-date data without the high complexity of going all-in on a Zero ETL stack. With its extensive library of pre-built connectors, real-time data replication with change data capture, and hands-off scaling, among other features, Estuary Flow enables your data teams to focus on what truly matters—deriving insights and driving value. Below, you’ll find a deeper dive into Estuary Flow’s features and see how it fits into your stack.2

Real-Time Data Replication with Change Data Capture

Estuary Flow leverages Change Data Capture (CDC) to monitor and replicate data changes from source systems in real-time. This ensures that your data warehouses and analytical platforms always have the most up-to-date information without the delays inherent in batch processing.

- Sub-Second Latency: Achieve data synchronization with less than 100 milliseconds of latency, enabling real-time analytics and highly reactive decision-making.

- Efficient Data Movement: By replicating only the incremental changes, Estuary Flow reduces unnecessary data transfer, leading to much lower compute and storage costs.

- High Throughput: Handle high-velocity data streams (up to 7gb/second) effortlessly, ensuring that your data pipelines scale with your business needs without putting in additional engineering hours towards refactoring.

Extensive Library of Pre-Built Connectors

With over 200 pre-built connectors, Estuary Flow simplifies integration across a wide array of data sources and destinations:

- Broad Source Support: Connect to transactional databases like MySQL, PostgreSQL, NoSQL databases like MongoDB, cloud storage, and APIs from SaaS applications such as Facebook Marketing and HubSpot.

- Flexible Destinations: Materialize data into warehouses like Snowflake, BigQuery, or into search indexes like Elasticsearch, and even into data lakes like Amazon S3 or lakehouses like Databricks without the need for custom coding.

- Ease of Configuration: Set up data pipelines through an intuitive interface, reducing setup time from weeks to minutes.

Scalability and Elasticity Without the Hassle

- Hands-Off Scaling: Estuary handles massive volumes of data with ease, taking the burden of scaling your infrastructure off of your plate. With the ability to process up to 7gb/second, organizations from small to large can focus on delivering valuable insights rather than optimizing infrastructure.

- Set-It-and-Forget-It: Once configured, there is little to no maintenance required from engineering teams to maintain Estuary pipelines. Changes to pipelines are easy to implement in our no-code platform, and more technical teams can use SQL or Typescript to transform data on the fly.

- High Availability: Designed with fault tolerance in mind, your data pipelines are resilient and reliable when they’re run on Estuary. We have a 99.9% uptime guarantee for customers on our Cloud Tier, and Enterprise customers are also provided with 24/7 support.

Enhanced Security for Enterprise Customers

For customers on our Enterprise tier, Estuary Flow offers enhanced security features that provide significant benefits over a strict Zero ETL solution:

- Secure Private Deployments: Estuary Flow offers Private Deployments which allow you to run data infrastructure entirely within your own private cloud. This ensures that all data processing for your zero ETL solutions remains within your secure network, enhancing data security and compliance with stringent industry standards.

- Compliance and Governance: With SOC 2 and HIPAA compliance reports, Estuary Flow meets rigorous security requirements for organizations in healthcare and other highly regulated industries.

- Dedicated Network Connectivity: Features like PrivateLink and Google Service Connect enable secure, private connectivity between your network and Estuary Flow.

Simplified Monitoring and Troubleshooting

Maintain visibility and control over your data pipelines with robust monitoring tools:

- Comprehensive Metrics: Access real-time metrics on pipeline performance, latency, and data throughput.

- Effortless Integration with Monitoring Tools: Use the Datadog HTTP ingest (webhook) source connector to integrate Estuary Flow with your existing monitoring stack.

- Proactive Alerting: Set up notifications for any anomalies, ensuring quick response to potential issues.

Cost-Effective Data Integration

Estuary Flow offers a pricing model that's approximately one-tenth the cost of competitors like Fivetran or Confluent:

- Transparent Pricing: Pay only for the data volume that you process, with no hidden fees or surprises.

- Free Tier and Trial Options: Get started with a generous free tier or explore advanced features with a 30-day trial of the paid tier.

- Operational Savings: Reduce the total cost of ownership by minimizing infrastructure expenses and administrative overhead.

Exceptional Support and Community

Join a vibrant community and access dedicated support:

- Community Slack Group: Connect with Estuary's team and other Flow users for quick assistance, knowledge sharing, or just to talk shop!

- Active Development: Continuous improvements and updates based on community feedback and the latest industry trends.

Accelerate Time-to-Value and Empower Your Team

By adopting Estuary Flow as part of your Zero ETL strategy, you will:

- Improve Data Freshness: Eliminate delays caused by traditional ETL, providing stakeholders with timely access to accurate, up-to-date data.

- Enhance Data Reliability: Ensure consistency and accuracy across all your data systems.

- Focus on Innovation: Allow your data engineers to concentrate on building advanced analytics and machine learning models instead of managing data pipelines.

Estuary Flow not only simplifies data integration but also empowers you to harness faster, more actionable insights from your data.

To learn more about Estuary Flow’s features, you can refer to the official documentation.

Wrapping It up

The advent of Zero ETL signifies a transformative approach to data integration, addressing the pressing need for access to real-time data. By eliminating the barriers of traditionals data movement processes, Zero ETL empowers organizations to derive more accurate insights, make decisions immediately, and foster innovation.

Estuary Flow is at the forefront of this paradigm shift, offering a powerful platform that embodies the principles of Zero ETL while still maintaining the robust capabilities that make Traditional ETL shine. It bridges the gap by eliminating the latency bottlenecks typically associated with Traditional ETL, yet provides the necessary tools for complex data manipulation and integration. This means your data teams can take advantage of the real-time data access promised by Zero ETL without sacrificing the essential features of traditional ETL.

By integrating Estuary Flow into your data infrastructure, you can:

- Unlock Real-Time Analytics: Access up-to-the-moment data across all sources, enabling timely and informed decisions.

- Reduce Operational Overhead: Eliminate the complexities of building and maintaining traditional ETL pipelines, allowing your team to focus on strategic initiatives.

- Optimize Costs: Benefit from a cost-effective solution that's approximately one-tenth the price of competitors like Fivetran or Confluent.

- Foster Collaboration: Join a vibrant community through Estuary's community Slack group.

In an era where data is a critical asset, Estuary Flow positions your organization to stay ahead of the curve by bringing data teams the best of both worlds from Traditional ETL and Zero ETL. Whether you're deploying machine learning models, enhancing customer experiences, detecting fraud in real-time, or optimizing supply chains, or something else that requires scalable, real-time data across a web of data source systems, Estuary Flow provides the solution needed to drive success.

FAQs

How can Estuary Flow's real-time CDC transform our data architecture and eliminate data latency? And are we underestimating the impact of data latency on our business decisions?

Estuary Flow's Change Data Capture (CDC) functionality enables sub-second replication of data changes from source systems to destinations. By eliminating data latency, you ensure that your analytics, machine learning models, and operational dashboards are always working with the most current data.

Consider how data latency affects your business:

- Delayed Insights: Traditional ETL processes introduce delays that can result in missed opportunities or delayed responses to critical events.

- Inefficient Decision-Making: Outdated data can lead to decisions based on incomplete or inaccurate information.

- Competitive Disadvantage: In fast-paced markets, real-time data access can be the difference between leading and lagging behind competitors.

By integrating Estuary Flow, you can rethink your data architecture to prioritize immediacy and responsiveness, enabling real-time analytics and decision-making that were previously unattainable with traditional ETL processes.

Is our ETL complexity hindering productivity, and can Estuary Flow free up our data engineers for strategic projects?

Maintaining traditional ETL pipelines often requires significant time and resources:

- Complex Maintenance: ETL processes involve intricate workflows that are prone to errors and require constant monitoring and updating.

- Resource Drain: Data engineers spend a disproportionate amount of time troubleshooting pipelines and putting out fires instead of driving innovation.

- Scalability Challenges: As data volume grows, traditional ETL pipelines can become bottlenecks and need to be highly optimized in order to keep the system afloat and avoid exploding costs.

Estuary Flow's fully managed, serverless architecture solves these problems in an easy-to-use and cost-effective way:

- Minimal Maintenance: Estuary Flow’s ability to handle up to 7gb/second with ease and built-in fault tolerance removes the need for manual intervention from the data team to handle issues related to scaling and system availability.

- Rapid Deployment: Pre-built connectors and an intuitive UI enables non-technical and technical teams alike to set up data pipelines in a matter of minutes.

- Focus on Value Creation: Engineering resources can be redirected to support strategic initiatives instead of fiddling with the typically complex configurations of data ingestion pipelines.

By adopting Estuary Flow, you can eliminate the overhead associated with traditional ETL, empowering your team to contribute more strategically to the organization's goals.

Can Estuary Flow's cost-effective solution meet our real-time data needs without straining our budget?

First, let’s establish that modern organizations demand real-time data processing for a few reasons:

- Customer and Stakeholder Expectations: Users expect instantaneous responses and up-to-date information, and they expect it today. Not yesterday, not a few hours ago, they expect it right now.

- Emerging Technologies Demand It: IoT devices, AI, and machine learning models require real-time data feeds to be leveraged most effectively.

Despite this being a critical need for many organizations, budget constraints often limit the ability to adopt cutting-edge solutions for a few more reasons:

- High Costs of Other SaaS Solutions: Platforms like Fivetran or Kafka Confluent are prohibitively expensive for high-volume, real-time integrations. A simple MySQL <> Snowflake connector on Fivetran could run you a five figure bill each month if you have even moderately low data volume (which most organizations have in this day and age).

- Infrastructure Investments: Taking the “build” route over the “buy” route means that you need to provision all of the infrastructure yourself. But it’s never just provisioning infrastructure. It’s also scaling, monitoring, optimizing, debugging, and a dozen other tasks just to get a simple pipeline going.

- Hidden Operational Expenses: Ongoing maintenance and operational costs add to the total cost of ownership, which is often overlooked. Every engineering hour spent on building out a custom data integration application is another hour that could’ve been spent on strategic initiatives (and you can trust us when we tell you that it takes a lot of engineering hours to build out a data integration application. We would know!)

To address these blockers, Estuary Flow is unique in the SaaS data integration landscape in that it offers a solution for real-time data integrations that is both low-effort and very cost-effective relative to others in the space.

By integrating Estuary Flow, you can meet the real-time demands of modern applications without overextending your budget, positioning your organization to capitalize on new opportunities and stay ahead of the competition.

About the author

Dani is a data professional with a rich background in data engineering and real-time data platforms. At Estuary, Daniel focuses on promoting cutting-edge streaming solutions, helping to bridge the gap between technical innovation and developer adoption. With deep expertise in cloud-native and streaming technologies, Dani has successfully supported startups and enterprises in building robust data solutions.

Popular Articles