Since modern-day businesses rely mostly on data, storing it in large amounts can get quite complex. This is where the concept of a traditional data warehouse turns up. With emerging cloud technologies, you may think that traditional data warehouses are the talk of the past. But the truth of the matter is these data warehouses continue to prove their worth by providing reliable, structured, and historical insights critical for accurate analytics.

If we take a look at where the data warehousing market is headed, experts predict it's going to reach $7.69 billion by 2028. This clearly shows that we need seriously efficient data management strategies.

Just like any other technology, traditional data warehouses won't be without their fair share of challenges. But, we can tackle these obstacles head-on, and the key is getting back to the basics.

This guide explores the various layers of a traditional data warehouse and provides a comprehensive overview of its key elements and framework that form the blueprint of data warehousing.

What Is A Traditional Data Warehouse?

A traditional data warehouse is a comprehensive system that brings together data from different sources within an organization. Its primary role is to act as a centralized data repository used for analytical and reporting purposes.

Traditional warehouses are physically situated on-site within your business premises. So, you have to take care of acquiring the necessary hardware, setting up server rooms, and employing staff to manage the system. These types of data warehouses are sometimes called on-premises, on-prem, or on-premise data warehouses.

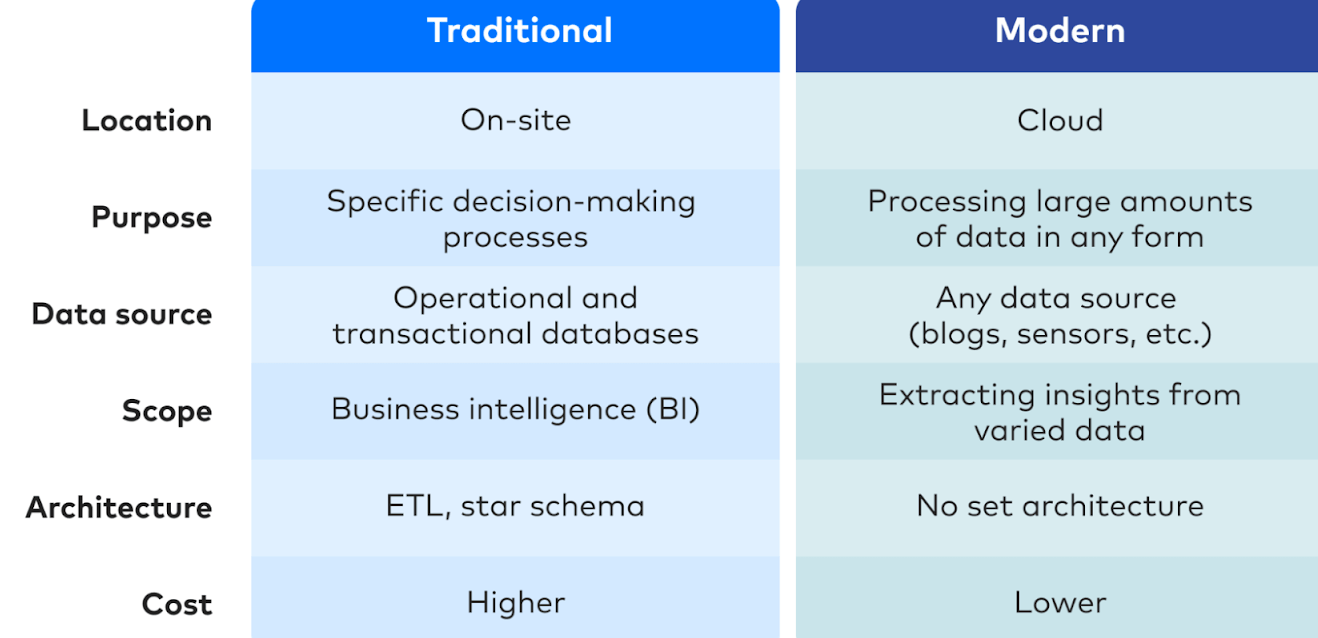

Traditional Vs. Cloud Data Warehouse: Exploring The Differences

Traditional data warehouses are multi-tiered structures comprising servers, data stores, and applications. Typically stationed on-premises, they handle large volumes of data. But still, these systems have their limitations, especially in interoperability and system orchestration. The burden of managing and updating their technology adds to the overhead.

On the other hand, cloud data warehouses make the most out of cloud computing so that the data teams get to enjoy the benefits of massively parallel processing (MPP). These modern data warehouses are managed by third-party providers so you don't have to worry about software updates or managing hardware degradation anymore.

While cloud-based data warehouses have their benefits, there are many areas where traditional data warehouses shine. Here are a few points to consider:

- Predictable Costs: You have better control of your long-term costs since you don't incur variable pricing based on data storage or usage.

- Legacy Systems Integration: If your company heavily relies on legacy systems, traditional data warehouses have an edge when it comes to integration.

- Network Dependency: In remote or less-developed areas with limited internet connectivity, traditional data warehouses provide more reliable access to stored data.

- Compliance & Regulation: For industries with strict compliance and regulatory requirements, traditional data warehouses provide a more straightforward path to meeting those standards.

- Data Sovereignty: In certain regions or industries, data sovereignty is a major concern. Traditional data warehouses provide a sense of assurance that data remains within the physical boundaries of the organization or the country.

- Security & Control: Traditional data warehouses provide a higher level of security and control. Since the data is stored on-premises, you feel more confident in having full control over your sensitive information and infrastructure.

Core Elements Of A Traditional Data Warehouse: Facts, Dimensions, & Measures

Traditional data warehouses use some critical components to store and structure data. Let’s take a look.

Facts

Facts are key units of information within a data warehouse that represent a specific event or transaction. To give you an example, consider a bookshop. Every book sold in this bookshop at specific prices is a fact recorded in the data warehouse.

Measures

Measures are numerical values associated with facts that provide detailed information about each fact. They help in quantitative data analysis and decision-making.

Continuing with our bookshop example, some measures associated with the fact ‘sold 20 copies of a particular book’ will be:

- Number of books sold - 20

- Price per book - $15

Dimensions

Dimensions offer context to facts and measures. They act as labels or categories to simplify data interpretation. For the bookshop example, some dimensions associated with the fact ‘sold 20 copies of a particular book’ include:

- The title of the book sold

- The date and time of the sale

- The location of the bookshop

The Normalization & Denormalization Processes

Data in a traditional data warehouse undergoes processes called normalization and denormalization to optimize efficiency. Let’s take a look.

- Normalization organizes related pieces of data into separate tables to eliminate duplicate data and improve data accuracy. This helps in faster search and sorting, simpler tables for quicker data modification, and reducing redundant data to save disk space.

- Denormalization involves deliberately introducing redundancy into the normalized data. This makes reading the data and manipulating tables easier and provides a more efficient workflow for data analysts.

Structuring A Traditional Data Warehouse: The 3-Step Design Process

When you're setting up a traditional data warehouse, you can't just put all the data into one big table. Instead, the data warehouse breaks down the data into several smaller tables that are designed to be joined together. The main table is called the fact table and it's surrounded by other tables known as dimension tables.

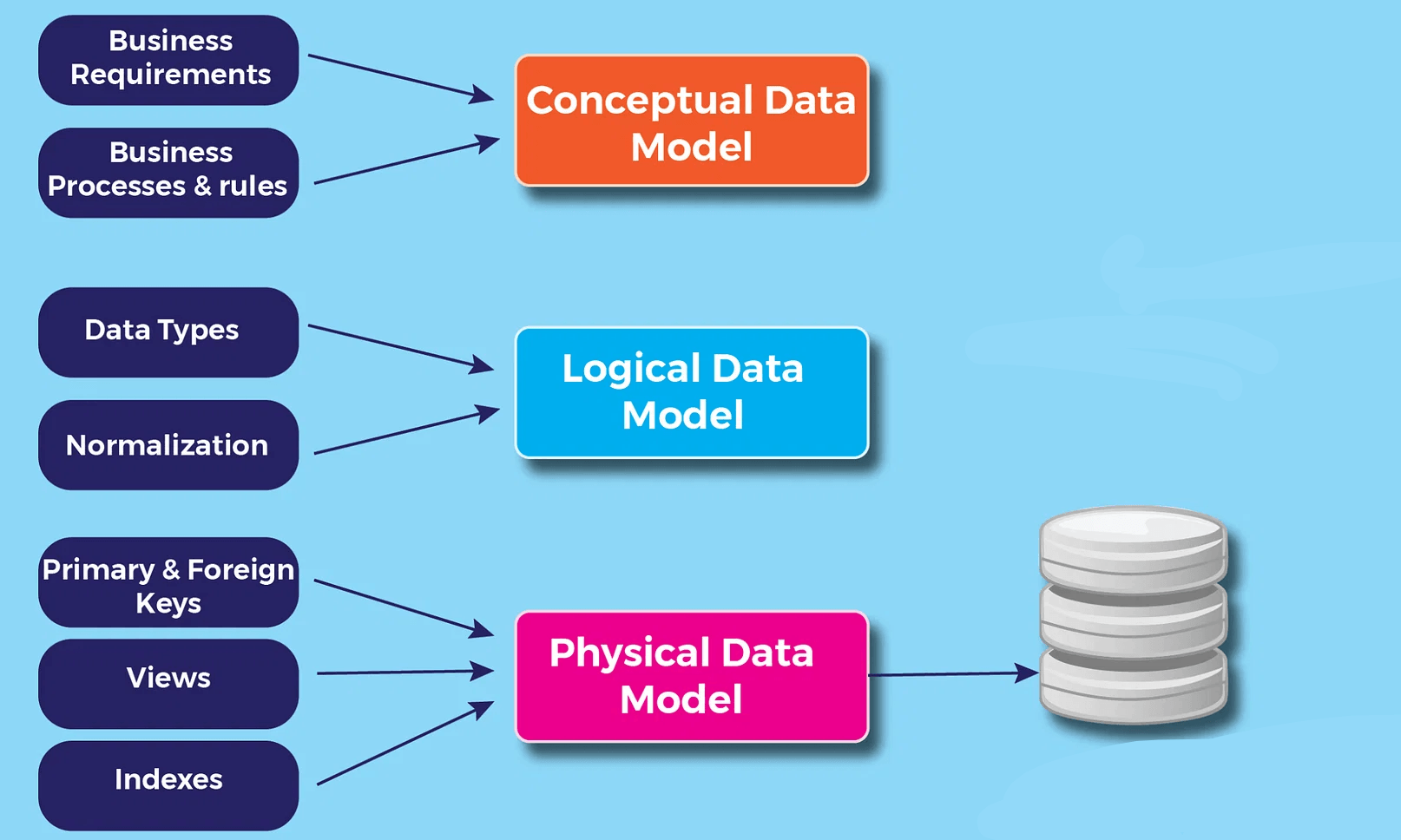

Designing a traditional data warehouse typically involves 3 key stages:

Conceptual Data Model: Defining Data & Relationships

The design journey begins with the creation of a conceptual data model. This model maps out the desired data and the overarching relationships between them. Let's take an example where we need to store sales data. Here, the main fact table is 'Sales,' while 'Time,' 'Product,' and 'Store' are dimension tables offering detailed information about each sale.

Logical Data Model: Describing Data In Detail

The second stage is crafting a logical data model that explains the data in detail in simple, understandable language. Here, each table's content is fleshed out. For instance, the dimension tables - Time, Product, and Store - would display their primary key, along with the corresponding data they hold. The Sales table, serving as the fact table, includes foreign keys for joining with other tables.

Physical Data Model: Implementation In Code

The last step is about establishing a physical data model. This provides the guidelines for implementing the data warehouse in code. It outlines tables, their structure, and the relationships among them, and specifies data types for each column.

Naming conventions play a critical role at this stage. For instance, dimension tables commence with 'DIM_', while fact tables start with 'FACT_', all written in uppercase and separated with underscores.

The Components, Processes, & Schemas Of Data Warehousing

Let’s explore the different components of a traditional data warehouse and see how they work.

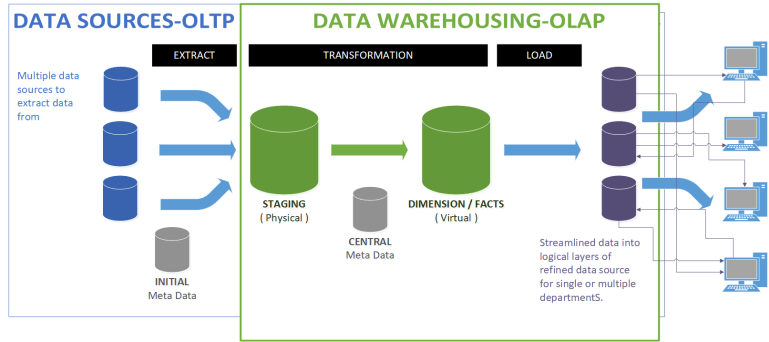

OLTP & OLAP In Data Architecture

The functionality of an enterprise data warehouse depends on 2 elements: Online Transaction Processing (OLTP) and Online Analytical Processing (OLAP).

OLTP: The Front-End Transactions

OLTP is known for short-write transactions that interact directly with front-end applications. These databases prioritize fast query processing and handle exclusively current data. OLTP is an important tool for businesses as it captures data for business procedures and supplies raw data for the data warehouse.

OLAP: For Historical Data

On the other hand, OLAP powers complex read queries for comprehensive analysis of historical transactional data stored in the data warehouse. With OLAP, you can slice the data, get into specific details, and pivot the information to get valuable insights and trends.

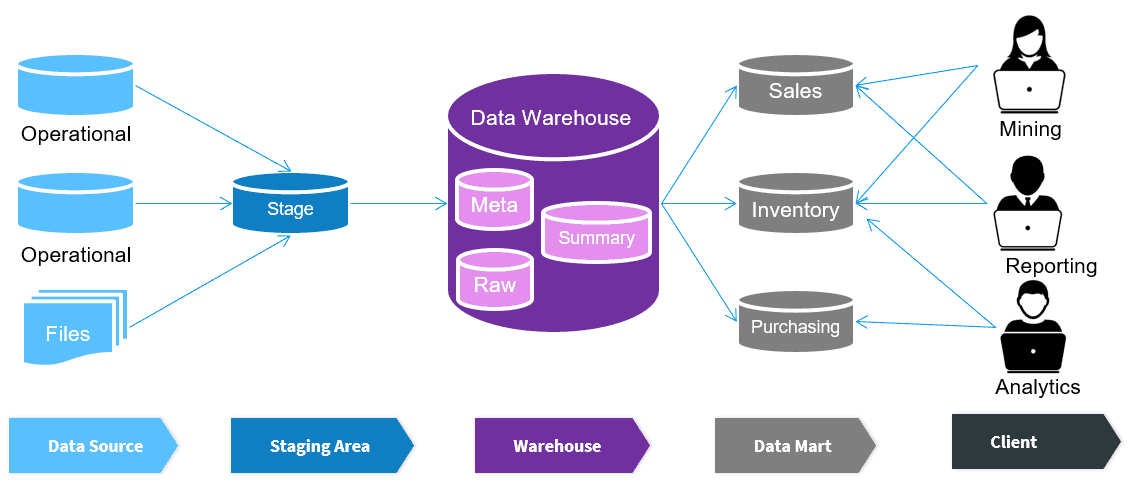

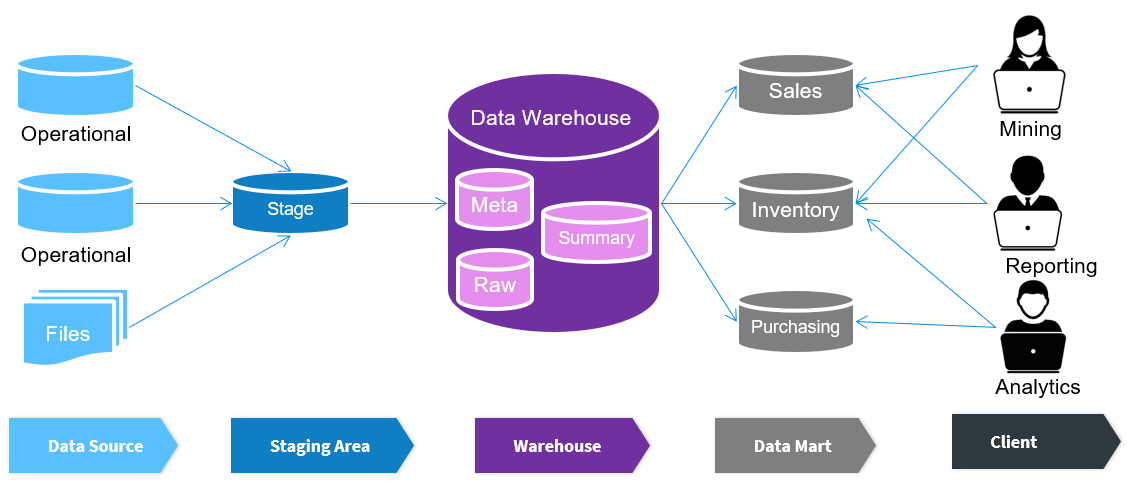

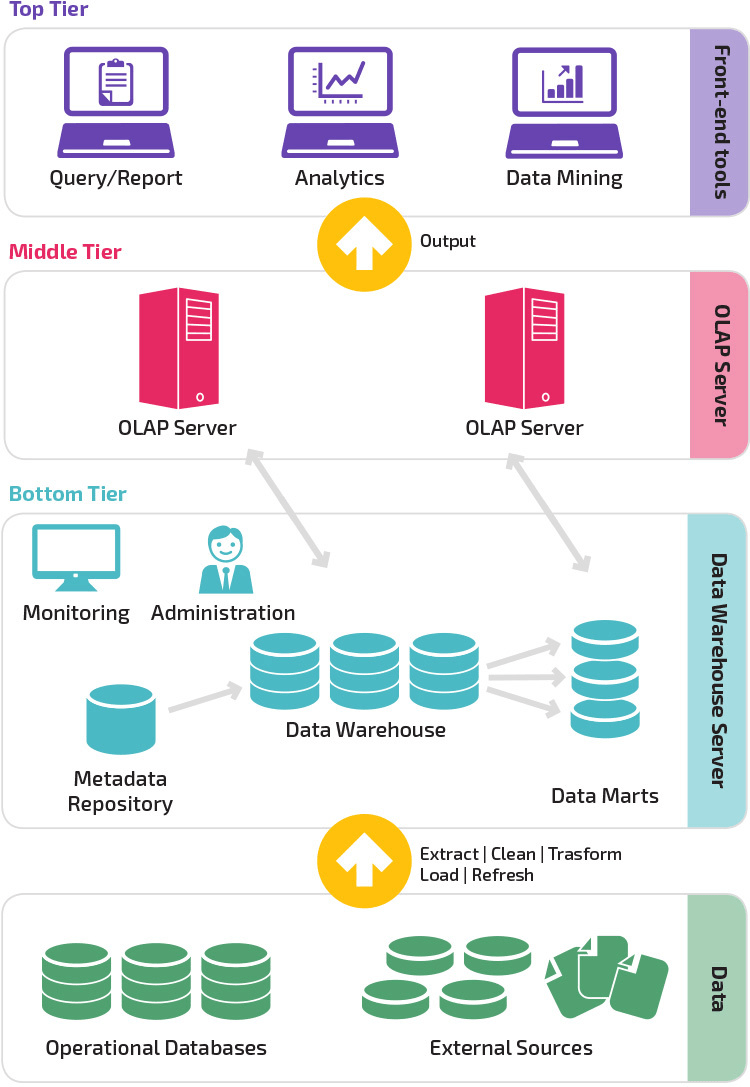

The 3-Tier Architecture Of Traditional Data Warehouses

Traditional data warehouse architecture generally employs a 3-tier structure.

Bottom Tier: The Database Server

The bottom tier houses a database server, usually an RDBMS. It extracts data from various sources with the help of a gateway. This tier ingests data from operational databases and different types of front-end data like CSV and JSON files.

Middle Tier: The OLAP Server

The middle tier comprises an OLAP server that performs one of two tasks. It either directly implements operations or transforms operations on multidimensional data into standard relational operations.

Top Tier: Tools For Analysis & Intelligence

The top tier contains the querying and reporting tools to provide data analysis and business intelligence capabilities.

Virtual Data Warehouse & Data Mart

Virtual data warehousing executes distributed queries on several databases which eliminates the need for a physical data warehouse.

Data marts, on the other hand, are data warehouse subsets designed for distinct business functions like sales or finance. A typical data warehouse amalgamates information from multiple data marts, each serving a specific business function.

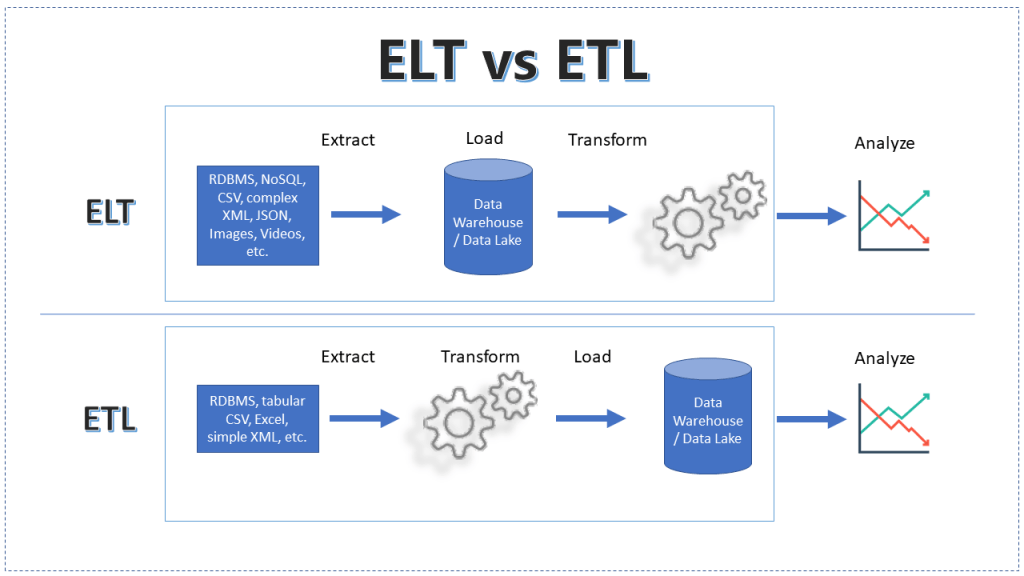

Data Loading Approaches: ETL vs. ELT

Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT) are 2 methods of loading data into a system.

Extract, Transform, Load (ETL)

In ETL, data is extracted from source systems, typically transactional systems, transformed into a format suitable for analysis, and then loaded onto the data warehouse. This method uses a staging database and applies a series of rules to the extracted data before it is loaded.

Extract, Load, Transform (ELT)

ELT fetches data from various data sources and loads it directly into the target system. The system then transforms the data as needed for analysis. While ELT enables faster loading than ETL, it needs an advanced system to perform on-demand data transformations.

Schemas In A Traditional Data Warehouse

Schema is the logical description of the entire database, including the name and description of records and data items. There are 3 main types of schemas used in a traditional data warehouse.

- Star Schema: It is the simplest, with each dimension represented by a single table containing a set of attributes. A fact table at the center contains keys to each dimension and additional attributes. However, this schema may cause data redundancy because it can have only one dimension table per dimension.

- Snowflake Schema: It normalizes some dimension tables and splits the data into additional tables to address the redundancy issue.

- Fact Constellation Schema: Also called galaxy schema, it is more complex and consists of multiple fact tables, each with its own dimensions. These fact tables can share dimension tables for a flexible and comprehensive view of data relationships.

The Challenges Of Traditional Data Warehouses

Traditional data warehouses have been a reliable tool for businesses for quite some time. They've done a good job so far. But as the data and business needs get more and more complex, so do the challenges. Let’s discuss them.

Maintenance Cost & Inflexibility

The traditional data warehouse infrastructure can be quite expensive to maintain. Changing reporting requirements because of evolving data privacy laws and compliance demands modifications.

The rigid structure of these warehouses makes such changes costly and time-consuming, failing to meet real-time data requirements. Licensing costs and maintenance expenses are high, especially when running on SQL Server, Teradata, or Oracle.

Unreliable Results

Traditional data warehouses are known to have high failure rates. This inconsistency means businesses can only trust the results half the time. Decisions made without data-driven insights risk losing customer trust.

Issues With Data Management

Managing the structure and optimization of data is crucial for effective processing. As data volume grows, it becomes increasingly complex to structure data and maintain efficient ETL processes.

High Costs & Data Quality Concerns

Data quality in traditional data warehouses is a major issue. When data is extracted from various sources and loaded into the data warehouse, discrepancies and errors occur, causing inconsistent and misleading results.

Data duplication is another common problem in traditional data warehouses. Data aging and staleness are additional concerns since the data is not updated frequently enough. Integrating data from disparate sources with varying formats and structures can result in integration challenges and data transformation errors.

Limitations In Speed & Scalability

Traditional data warehouses lack the agility and scalability that some modern businesses require, especially in the security industry. Changes can be hard to implement quickly because of the rigid architecture, making swift scalability an uphill task.

Inefficiencies With Non-Technical Users

Traditional data warehouses can be difficult for non-technical users. The need for data teams to manage and fulfill data requests often results in delays and inefficiencies, especially in larger teams.

Applications Of Traditional Data Warehouses: A Look At 2 Real-World Examples

Let’s consider 2 case studies to understand the versatile applications and the potential challenges of implementing traditional data warehouses in different sectors.

Example 1: Using A Traditional Data Warehouse For Environmental Research

Traditional data warehouses can be transformative in managing and analyzing environmental data. The Marine Institute Ireland's SmartBay project is a prime example. The institute wanted to create a platform for testing and demonstrating new environmental technologies, potentially fostering new markets for Irish companies.

For this, the institute, in collaboration with a technology partner, developed an information system that funnels environmental data into a data warehouse. This warehouse processes and analyzes the data to present it in innovative ways that can be used for various applications.

A key aspect of the technical implementation was the centralization of data. This made the information more accessible and actionable. It combined sensor data with other databases to create a system that could quickly and easily develop and deploy new applications.

This approach helped in different ways, such as:

- Fishery managers could monitor water quality issues throughout the bay.

- Technology developers conducted sophisticated studies in near real-time.

- Alternative energy developers could assess the effectiveness of prototype wave-energy generators.

Example 2: Improving Operational Efficiency In Energy Production With A Traditional Data Warehouse

Traditional data warehouses play a key role in the energy sector, as demonstrated by a multinational energy corporation's experience. The company faced challenges in improving safety, reliability, and maintenance while looking to decrease production costs. Lack of timely access to detailed equipment data led to increased maintenance costs and reduced productivity.

To address these issues, the company implemented a traditional data warehouse. This integrated data from multiple sources and provided near real-time insights. The scope was expanded to include financial data, assisting in better portfolio management.

This solution brought about significant improvements. It helped in the effective monitoring of safety-critical equipment and production-impact equipment. It also lets the company meet Health, Safety, and Environment (HSE) regulatory deadlines.

The new system dynamically adjusted maintenance activities based on the current condition of the asset, increasing equipment availability and reducing maintenance costs. This meant fewer trips to well sites and higher maintenance priority for critical equipment.

Addressing Traditional Data Warehouse Challenges With Estuary Flow

Managing a traditional data warehouse comes with its share of challenges. Data integration and scalability are just a few of the hurdles to overcome. At Estuary, we developed a platform called Flow that tackles these issues head-on. Let’s see how Estuary Flow steps up to transform the landscape of traditional data warehouses.

Real-Time Data Integration

Estuary Flow provides near real-time data capture from various sources, including databases and SaaS applications. This lets you make decisions based on the most current data and identify trends in a volatile market.

Flexible Data Transformations

Data transformation is another challenge in traditional data warehouses, especially when dealing with unstructured data. Estuary Flow provides streaming SQL and JavaScript transformations so you can process and analyze your data in real time. It also features schema inference capabilities to convert unstructured data into a structured format, simplifying the data management process.

Scalability & Reliability

Estuary Flow, built with a fault-tolerant architecture, can scale to accommodate large data volumes. It also guarantees transactional consistency with its exactly-once semantics to provide a precise worldview of data.

Extensibility & Monitoring

Flow supports an open protocol for adding connectors, making it flexible and customizable. It also offers live reporting and monitoring features so you can see your data flows in real time.

Conclusion

Having stood the test of time with its established architecture and methodologies, the traditional data warehouse has demonstrated its value across various industries throughout the years. While newer technologies, like big data solutions and cloud-based platforms emerged, the traditional data warehouse remains relevant and indispensable for enterprises with established infrastructures and legacy systems.

Constant evolution in the field of data warehousing led to the development of tools like Estuary Flow that can assist in managing and leveraging these data warehouses more efficiently.

It offers real-time data integration and transformation capabilities that can improve the efficiency of traditional data warehouses. Estuary stands out with its automated schema management, fault-tolerant architecture, and its ability to scale according to data volumes.

Sign up for free and join hundreds of companies that have already benefited from the power of Estuary Flow. For more information or assistance, feel free to contact us.

Author

Popular Articles