The power and implications of data materialization

The simple concept of materialization can tell us a lot about how (and why) data is stored and represented. In this post: logs and tables; data loading and queries; exports, and more.

By Phil Fried and Olivia Iannone

If you’ve worked with relational databases, chances are you’re familiar with the concept of materialized views. On a basic level, a materialized view is an object that stores the pre-computed results of a query. This simple but powerful concept has been successfully applied in other data systems as well, and raises lots of interesting questions about how we handle data.

In this post, I’ll compare the processes of materialization and export, and the implications on how data is stored and represented. To understand this properly, we’ll get into logs and tables; data loading and queries. These interrelated concepts are key to understanding Estuary Flow, but are broadly applicable across technology stacks.

Note: This post was inspired by a recent discussion of Estuary’s data connectors that drive materializations. While writing, I realized there was a lot more to say on this particular topic, but be sure to check out that post for a broader discussion of how we build materialization connectors.

Materialization vs export: a primer

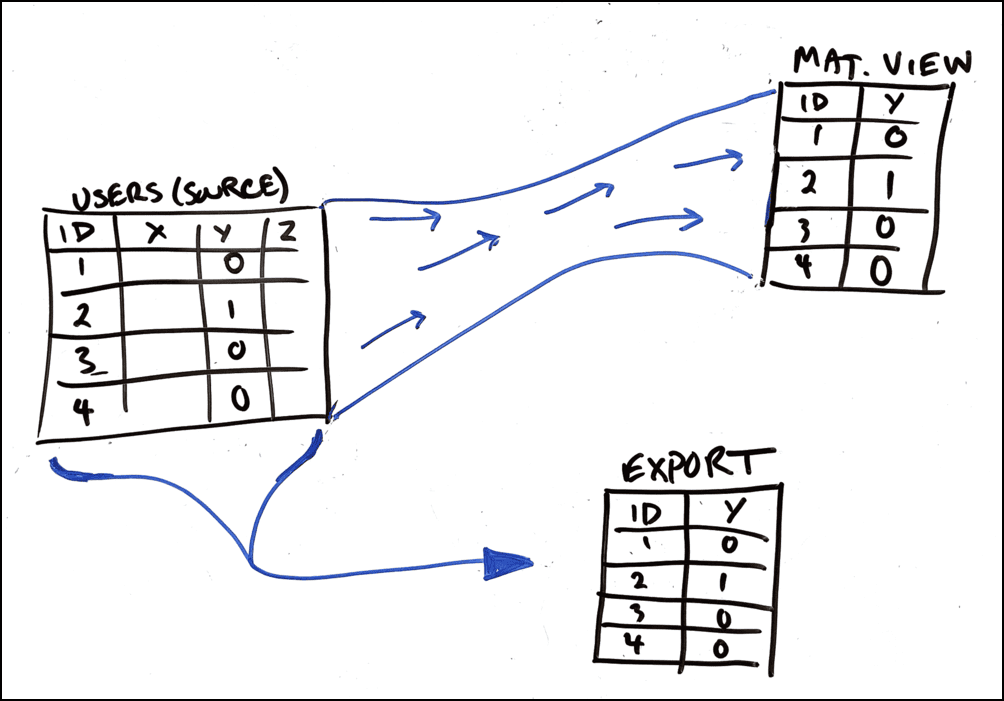

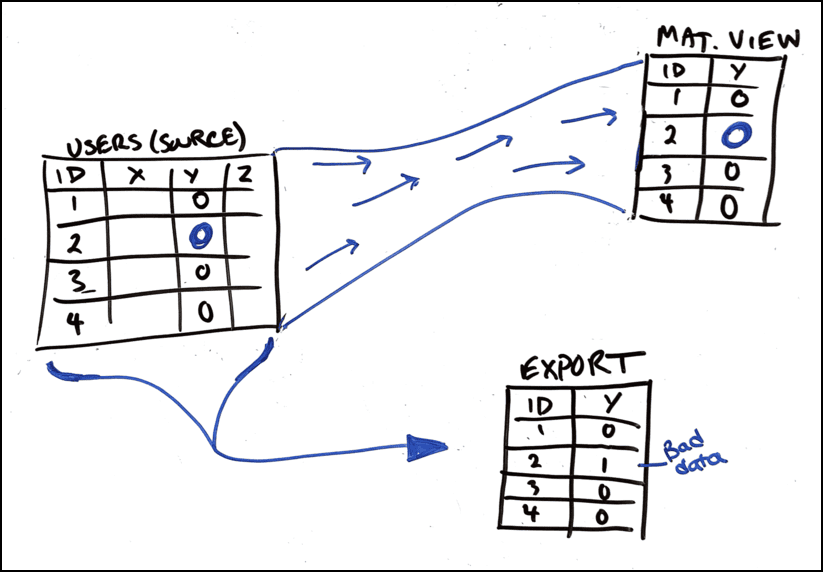

The materialization process yields a view that remains consistent, within some defined bounds, with the source of truth. Say you have a production database with a `USERS` table, and you create a materialized view of that table with sensitive columns redacted. The real `USERS` table is still the single source of truth, and the database server automatically keeps the materialized view in sync. When you update a user in the source table, that same user is automatically updated in the materialized view.

An export, in contrast, simply copies data from a source to a destination. Say you took that same `USERS` table and instead exported it to a CSV file. When you then update a user in the source table, the CSV file simply becomes out of date. There is, of course, nothing that automatically updates the CSV file. You need to export another one if you want it to include the latest updates.

In practical terms, you could also think of the difference between materialization and export in this way: Materialization will both insert and update records in the destination, while export can only insert. This may seem obvious, but there’s a lot of interesting stuff that follows from this.

You might ask yourself, “If materialization results in a view that’s always up to date, then why would we ever use exports instead?”

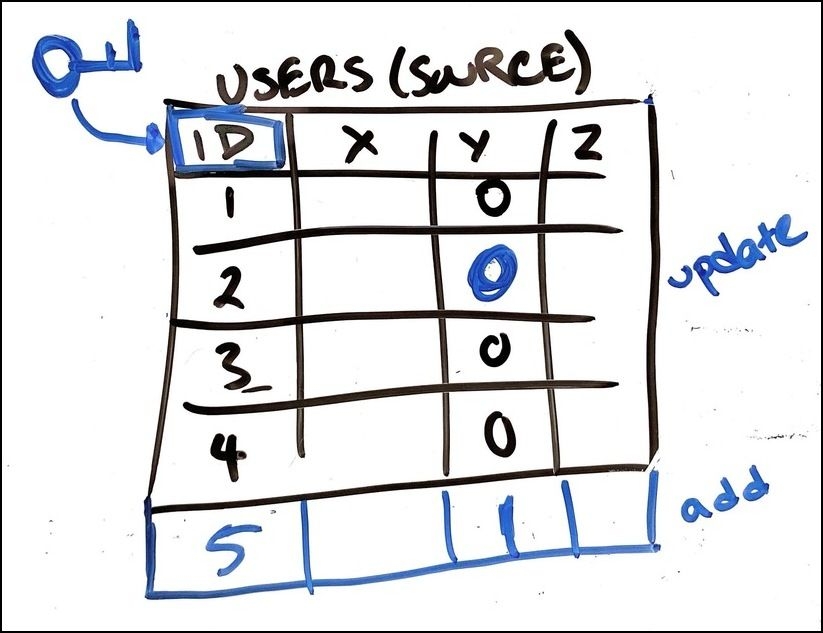

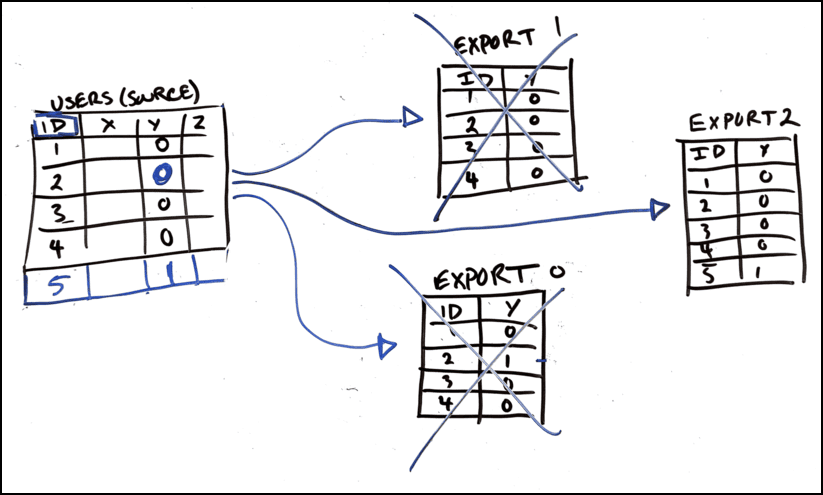

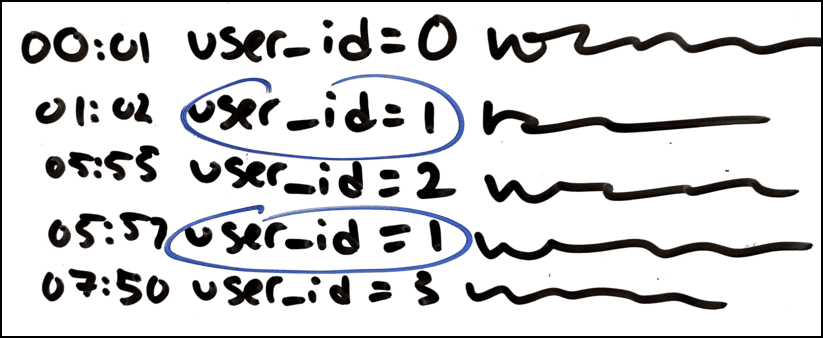

The first and most obvious reason is that, in order to materialize a dataset, you need to know the key for each record. This is how you know whether to update an existing record or insert a new one. For example, a `USERS` table might have a `user_id` column. If a new record has the same `user_id` as an existing one, you should update it. Otherwise, insert a new one. In order to materialize, you need to know the key of each record, or else you’d have no way of knowing whether to insert or update.

This is why the export paradigm has become so ubiquitous. You don’t need to know much about the data in order to export it, because it’s just a simple copy. Additionally, it’s really easy to write a new export data connector because they only require a few simple APIs to read data from a source or load into a destination.

The problem with data exports

One problem with exports is that they’re generally not incremental. In most cases, people just re-export the entire dataset whenever they need an updated copy. Even if zero rows were modified since the last export, you still end up making another complete copy every time you run the job.

Copying all that data can get pretty expensive, both in terms of time and money. This is why people generally run exports as infrequently as they can. Why pay to export the entire table every hour, when really once per day is enough? Why once per day, when you really don’t run that query that often, do you? So why not once a week? This paradigm incentivizes using stale data because it ends up being cheaper.

Let’s talk about logs

To end the discussion there would be a misrepresentation of the reality, though — the thing is, for some data, a simple copy really is just fine. You may not need to update any records in the destination if the source dataset is conceptually an append-only log.

Think about application logs, for example. They are append-only. You’d never want to update an existing log line, and there’s not really a logical key for one, anyway. Logs are simply datasets that are only ever appended to.

This property makes it really easy to keep things up to date incrementally by simply copying data from the source to the destination. Sounds a lot like an export, huh? The new data can be copied at whatever cadence you want. It could be once per week or once per second. It could even be done continuously and in real time (gasp!). All you need to do is remember where you left off reading from the source, so that you can pick back up there later.

Tables vs Logs

So logs are great because they’re so easy to read incrementally. If the source is a log, and the destination is also a log, then you’re all set to easily move that data in real time just by copying from source to destination. But once again, it’s not that simple, because logs aren’t perfect for everything.

Let’s reimagine that `USERS` table from the first example as a `users` log, where each event in the log represents the latest data for a given `user_id`. When you want to update an existing user, you simply add a new event to the log with that `user_id`.

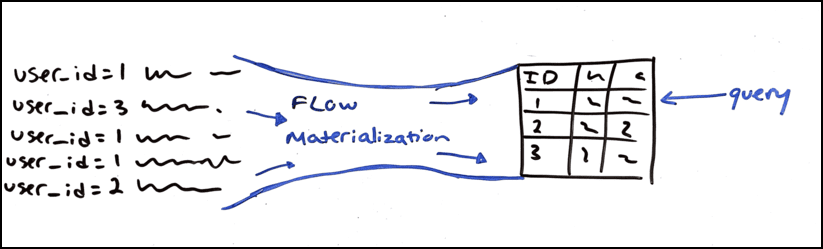

This representation makes it extremely cheap and easy to move data from a source to a destination. But when you want to query your data, things get more difficult, because the log may contain multiple events for the same `user_id`. You have to process every single event in the log in order to know the most recent value for any given id. Practically, systems that allow you to query logs must maintain lots of state in order to make the queries acceptably efficient.

A far more efficient representation for queries is something that looks more like a database table. This doesn’t necessarily mean a literal database table, but rather anything else with these same properties:

- Each instance of a primary key only occurs once.

- It’s really cheap and easy to access only the latest state of each record.

It’s far more efficient to query the table representation of `USERS`, since it provides direct access to the latest value for each `user_id`, without any duplicates.

To summarize, logs are ideal for when you want to move or transform data, because they make it easy to do so incrementally (much lower cost) and continuously (much lower latency). But when you want to query data, tables are often better because they allow for much faster and more direct access to the latest state that’s associated with each key.

This idea of using logs as the source of truth and tables for efficient queries is not at all new. Confluent has previously written about this same topic as it relates to their ecosystem. And Martin Kleppmann has also written and spoken on the topic.

Materialization in Flow

Materialization is how Flow can turn logs (a Flow collection) into a table that you can easily query (a materialized view).

Flow stores all collection data in journals, which are basically transactional logs backed by cloud storage. When you create a materialization in Flow, it runs a set of processes that continuously read from the collection’s journals and insert or update data in the target system based on the key defined for that collection. Flow has a growing list of materialization drivers, which each support a particular target system. One of Flow’s major goals is to make it as easy as possible to materialize collections into whatever system you want.

Flow1 is also not the only system out there that can turn logs into tables. Several purpose-built tools exist in the Kafka ecosystem, and there’s also Rockset, Materialize, and others. But even your typical relational database, like the venerable PostgreSQL, will actually use a write-ahead log as the internal source of truth for all data modifications, and its tables are derived by replaying that log. I’m hand waving over a lot of details there, which vary depending on the specific database, but the conceptual model remains more or less accurate, even among new distributed SQL databases.

This is why we sometimes describe Flow as “a database turned inside out.” Because Flow hoists the logs up to be first-class citizens, rather than an implementation detail.

In Flow, tables are materialized into whichever other system works best for you to query. This is where it differs from many of the other tools that can turn logs into tables. Most other technologies that do this can only materialize logs into their specific table-like format, which is queryable only using their systems. Flow is intended to work with any database. Flow provides the real-time capabilities, and the database brings the query interface. This is why we say that Flow lets you turn any database into a real-time database.

So, if you’re building a data pipeline, I’d strongly encourage you to use logs as the system of record, and materialize into tables for efficient queries. And if you want a system that makes it easy to manage those pipelines, then I’d of course recommend Flow.

1: Technically, Flow also supports just exporting the logs as they are, without querying or reducing by key. This is still a very useful feature, but it’s also a lot less interesting, so I’ve chosen not to focus on it in this post.

Need a data ops platform and curious about what we discussed here? You can try for free here. We develop in the open; you can check out the code here.

Author

Popular Articles