Businesses today require the fastest and most efficient methods of data delivery and processing. For most, finding a speedy, scalable way to keep data in sync between their operational systems and analytical models is a major challenge.

Because of this, data engineers are often looking for better ways to move data from relational databases like PostgreSQL to BigQuery.

This involves much planning and forethought, but only some IT teams are well-versed in the two platforms, which can lead to data pipeline failures, data loss, and over-investment of precious time and money. Considering the fast-paced and data-heavy digital environments of today, it can be risky for businesses to attempt a Postgres to BigQuery data pipeline without the proper prerequisites or know-how.

Our guide aims to solve all these problems and provide a simple, step-by-step method to migrate data from PostgreSQL to BigQuery.

We’ll get to the tutorial shortly. Let’s start by defining the two systems, and why it’s important to connect them.

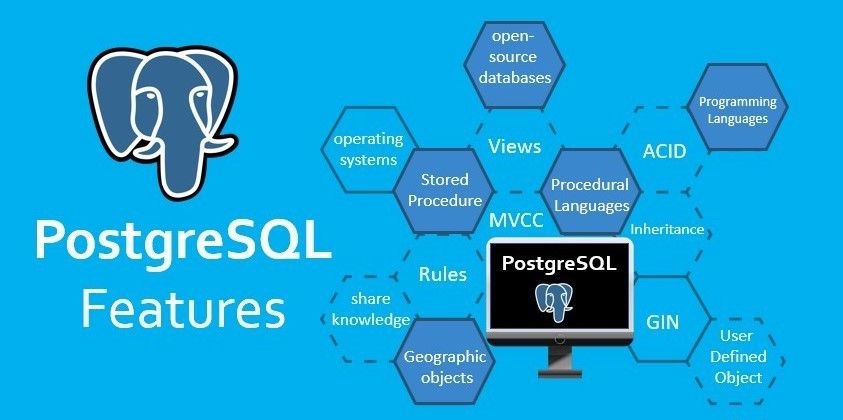

What is PostgreSQL?

PostgreSQL, also known as Postgres, is a powerful, open-source object-relational database management system (ORDBMS) with an emphasis on extensibility and SQL compliance. It was originally developed at the University of California, Berkeley, and has a long history of active development and community involvement.

One of the key features of Postgres is its support for multiple data types, including traditional scalar types such as integers and floating-point numbers, as well as more complex types such as arrays, JSON, and hstore (a key-value store). This allows developers to store and manipulate data more flexibly and powerfully than with traditional RDBMS systems.

One of the most unique and powerful features of Postgres is its support for stored procedures and functions written in a variety of languages, including SQL, PL/pgSQL, Python, and C. This allows developers to create custom logic at the database level, which can be used to build more powerful and efficient applications.

Since Postgres can handle large amounts of data quickly, it is an ideal foundation for a variety of applications that require fast data retrieval and processing. Some examples of such applications include:

- eCommerce: Postgres can handle large amounts of transactional data, making it well-suited for powering eCommerce platforms. It can handle a high volume of read and write operations and its built-in support for advanced data types such as JSON and hstore streamline the handling of product information and other metadata.

- Content management systems: Postgres can handle large amounts of structured and unstructured data, making it well-suited for powering content management systems. Its support for full-text search and other advanced search features make it easy to retrieve and organize content.

While PostgreSQL is a powerful and flexible relational database management system, it is not specifically designed for aggregating and analyzing large amounts of data. Even though it does have built-in support for basic aggregation and analysis through SQL, it may not be as efficient or user-friendly as other specialized tools for handling big data.

However, Postgres is still a popular choice for many developers because of its open-source nature, which allows anyone to use, modify, and distribute the software for free. It is supported by a large and active community of users and developers, who contribute bug fixes, features, and documentation to the project.

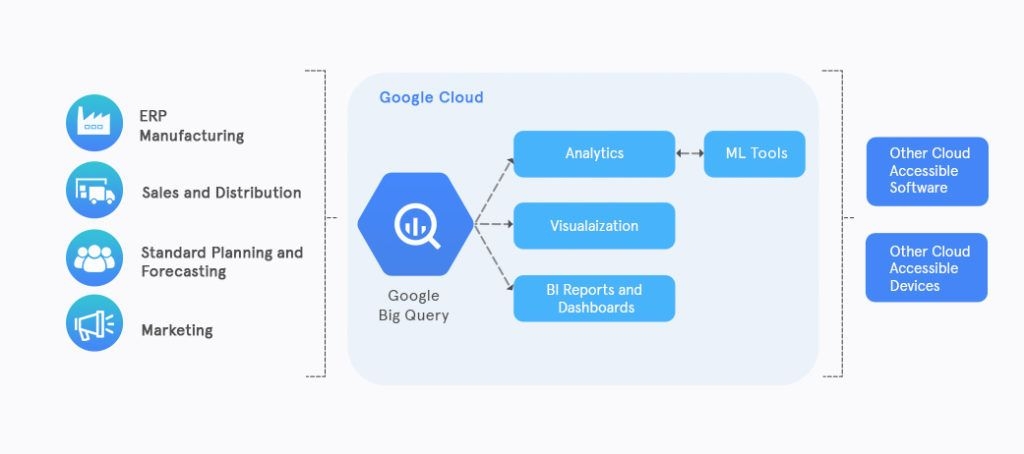

What is Google BigQuery?

Google BigQuery is a fully-managed, cloud-native data warehousing platform that enables super-fast SQL queries using the processing power of Google’s infrastructure. It is a part of the Google Cloud Platform and is designed to handle extremely large and complex datasets with ease.

One of the main benefits of BigQuery is its ability to quickly analyze large amounts of data using SQL. This is made possible through its highly-optimized query engine, which utilizes columnar storage, in-memory technology, and machine learning to execute queries in seconds, even on petabyte-scale datasets.

In addition to its speed, BigQuery is also highly scalable, allowing you to easily and seamlessly increase your data storage and processing capacity as your needs grow. This is a significant advantage over traditional on-premises data warehouses, which can be costly and time-consuming to scale.

BigQuery also includes several advanced security features to ensure the privacy and protection of your business data. These include:

- Network isolation

- Encryption at rest

- Integration with Cloud Identity

- Access Management for granular access control

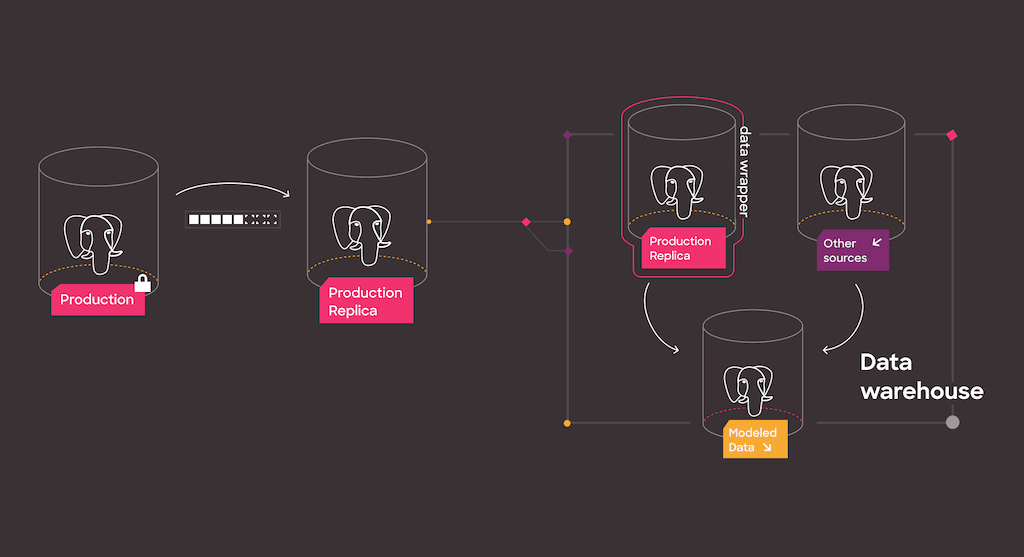

Does PostgreSQL Work As a Data Warehouse?

Yes, PostgreSQL can be used as a data warehouse. However, using it as a data warehouse can be challenging due to its certain limitations. One of the main difficulties in using Postgres as a data warehouse is that data engineers have to create the data warehouse architecture from scratch. This involves creating multiple interlinked databases for storage and modeling.

This can lead to a complex and difficult-to-manage system, especially when dealing with large amounts of data. Additionally, Postgres does not have built-in support for advanced analytics and reporting, which can make it difficult to use for data warehousing purposes.

Another issue with using Postgres as a data warehouse is that it is not well-suited for handling large-scale data processing tasks. It does not have the same level of scalability and performance as more specialized data warehousing solutions like BigQuery, which is designed to handle petabyte-scale data with minimal latency.

BigQuery, on the other hand, is a cloud-based data warehouse that is built on top of Google’s massive infrastructure. It is designed to handle large-scale data processing tasks with ease and it provides many features that make it well-suited for data warehousing. Additionally, BigQuery is a fully-managed service which means that users do not have to worry about the data warehouse architecture or managing the underlying infrastructure.

In addition to its powerful data processing capabilities, one of the key advantages of BigQuery is its easy integration with a wide variety of analytical environments and business intelligence (BI) tools. This makes it easy for users to connect BigQuery to their existing systems and workflows, and to leverage the data stored in BigQuery to gain insights and make data-driven decisions.

One of the most popular analytical environments that BigQuery integrates with is Google’s own data visualization tool, Google Data Studio. With this integration, users can easily create interactive and visually-appealing reports and dashboards that are based on their BigQuery data.

BigQuery also integrates with other Google Cloud services, such as Google Cloud AI Platform and Google Cloud ML Engine, which allows users to build and deploy machine learning models on top of their BigQuery data. BigQuery also integrates with a wide variety of popular analytics and BI tools, such as dbt, Tableau, Looker, and PowerBI.

Steps to Migrate Data From PostgreSQL to BigQuery

Now, let’s dive into how you can use Estuary Flow to sync data between PostgreSQL and BigQuery.

Estuary Flow is a real-time data integration platform that can be used to transfer data between different data stores. It is designed to be lightweight, flexible, and easy to use, making it a good choice for building pipelines between your operational and analytical data stores.

With Estuary Flow, you can migrate data from a variety of sources, including:

- Databases (e.g., MySQL, PostgreSQL) using real-time change data capture (CDC)

- Streaming platforms (e.g., Google Pub/Sub, Kafka)

- Cloud storage (e.g., Google Cloud Storage, Amazon S3)

- SaaS (e.g., Salesforce, Google Ads, Hubspot)

You can also migrate data to a variety of destinations, including databases, data warehouses, and other data stores.

Estuary Flow uses a configuration file to specify the source and destination of the data migration, as well as the mapping between the source and destination data stores. This allows you to customize the data migration process to fit your specific needs.

But there’s no need to configure all this by hand — the no-code web application makes this workflow easy to complete in your browser in a few clicks.

To demonstrate, here’s your easy-to-follow guide and steps to help migrate data from PostgreSQL to BigQuery in minutes.

Prerequisites

To complete this guide, you’ll need:

- An Estuary account. Go to the Flow web app at dashboard.estuary.dev to sign up for the free tier.

- A PostgreSQL database from which to capture data via change data capture (CDC).

- A BigQuery dataset (this is the component equivalent to a database in the BigQuery architecture).

Step 1: Set up PostgreSQL for Data Capture

Before you capturing data from Postgres database for migration to BigQuery, your database must allow Flow to access it, and meet several requirements. Complete these tasks:

- Enable logical replication (

wal_level-logical) to allow real-time change data capture - Create a user role named

flow_capturewith theREPLICATIONattribute. Flow will use this to capture the data. - Create a publication listing all the tables in your database.

- Create a watermarks table to ensure accurate data backfill.

Straightforward setup guides to meet these requirements are available in the Flow documentation based on the hosting platform you use:

- For on-premises PostgreSQL

- For Amazon RDS PostgreSQL

- For Amazon Aurora PostgreSQL

- For Google Cloud SQL PostgreSQL

- For Azure Database for PostgreSQL

Also Read: Change Data Capture Guide for PostgreSQL

Step 2: Prepare BigQuery for Data Materialization

Estuary Flow must be able to access your BigQuery dataset. To set this up:

- Log into your Google Cloud console and create a new service account as described in the Google documentation. While you’re doing so:

- Grant the account access to the Google Cloud project that includes your BigQuery data warehouse.

- Grant these roles to the user:

roles/bigquery.dataEditor,roles/bigquery.jobUser, androles/storage.objectAdmin</code.

- Grant the account access to the Google Cloud project that includes your BigQuery data warehouse.

- Click the name of the new service accounts from your list of service accounts. Click the Keys tab, then Add key, and download a JSON key. You’ll use this key later.

- Finally, create a Google Cloud Storage bucket. Flow uses this as a staging area. Make sure the bucket is in the same Google Cloud region as your BigQuery warehouse.

Step 3: Capture From PostgreSQL

Now you’re ready to set up your CDC pipeline from PostgreSQL. Flow will detect all the available tables in your database and back them up in cloud storage as collections (next, you’ll push, or materialize, these data collections to BigQuery).

- Go to the Captures page of the Flow web application, signing in if prompted.

- Click New Capture, and choose the PostgreSQL connector.

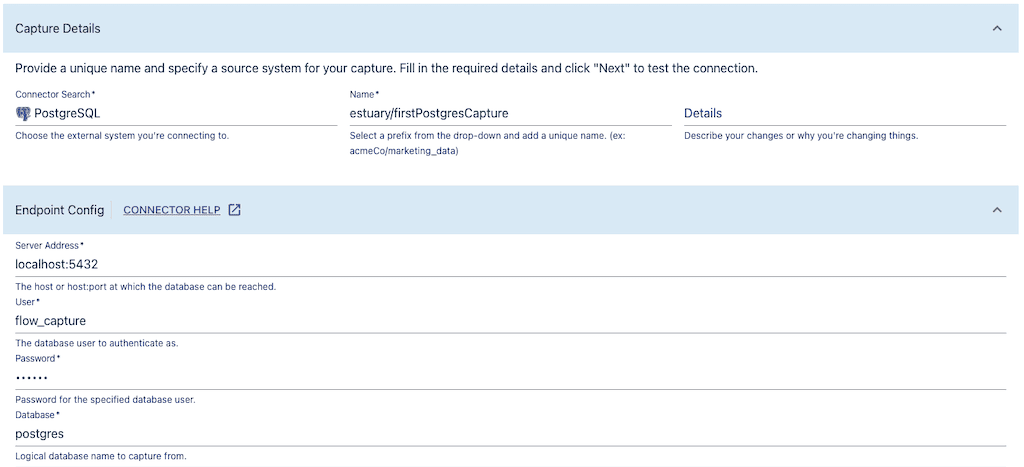

A form appears with the properties required for PostgreSQL.

- Type a name for your capture.

This name must be unique and begin with your Estuary prefix.

Click inside the Name box and select your prefix (there will usually only be one available to you.) Then, type a unique name for your capture after the slash. You’ll end up with a name like myCompany/firstPostgresCapture. - In the Endpoint Config section, fill out the required properties. You should have these on hand from when you set up your database.

- Server address in the format host:port.

- Database user (this should be flow_capture).

- The password for the user.

- Database name (you’ll almost always leave the default, Postgres).

- Server address in the format host:port.

- Click Next.

Flow tests the connection to your database and creates a list of all the tables that it can capture into Flow collections. It displays these in the Collection Selector.

- Look over the listed collections. You can remove any that you don’t want to sync to BigQuery.

- If you made changes, click Next again.

- Click Save and Publish to finalize the data capture. You'll see a notification when this completes successfully.

- Click the Materialize Collections button to move on to the next phase.

Step 4: Materialize to BigQuery

You've captured tables from PostgreSQL into Flow collections. Flow has backfilled all the data in those tables, and is continuing to actively ingest new change events.

The next step is to connect those collections to new tables in your BigQuery dataset.

- Choose the BigQuery tile.

The page populates with properties specific to BigQuery. - Create a unique name for the materialization like you did earlier. For example, myCompany/firstBQMaterialize.

- In the Endpoint Config, fill out the required properties.

- The Google Cloud project ID that includes the BigQuery warehouse and Google Cloud Storage bucket. Here’s how to find it.

- The Service Account JSON key you downloaded earlier.

- The Google Cloud Region where the BigQuery warehouse and Google Cloud Storage bucket are located

- Name of the dataset in BigQuery where you want to create the tables.

- Name of the bucket you created earlier.

- The Google Cloud project ID that includes the BigQuery warehouse and Google Cloud Storage bucket. Here’s how to find it.

- Click Next. Flow checks the connection to BigQuery, and the Collection Selector opens up. The details of the collections you just captured from Postgres are already filled in. Each will become a new table in BigQuery.

- Click Save and Publish to complete your Data Flow. You'll see a notification when it’s successful.

Flow copies all the data it captured from your PostgreSQL tables into new tables in BigQuery. Whenever changes happen in the Postgres source tables, the changes will be copied over to your BigQuery dataset without delay.

For more information, see:

- Guide to creating any Data Flow with Estuary.

- Details on the Estuary Flow PostgreSQL CDC connector.

- Details on the Estuary Flow BigQuery materialization connector.

Streamline data sync with Estuary Flow’s no-code, real-time pipeline from PostgreSQL to BigQuery. Start your free trial or contact us to learn more about our seamless integration solutions.

The Vital Benefits Of Using Estuary

Estuary Flow as a data integration platform has many benefits when migrating data from PostgreSQL to BigQuery. The biggest is that the platform has quick implementation with a short process to set up pipelines. Plus, Estuary Flow automates the entire process as you sync your data to PostgreSQL.

Estuary Flow can also manage its schema registry automatically and can correct improper schema after data is loaded. Since Estuary helps map the source schema with the destination, users don’t experience schema errors. Users can also enjoy real-time replication for any source database with log-based replication.

Finally, Estuary offers a path to build a real-time streaming pipeline at the fraction of the effort we’ve grown used to.

Both the change data capture connector from PostgreSQL and Flow’s central runtime are truly event-driven. But thanks to the easy-to-use web app, streaming pipelines aren’t just for an elite group of experienced data engineers (and even for those engineers, it’s a much simpler process.)

This puts a real-time, Postgres to BigQuery data pipeline at the fingertips of the whole team.

Conclusion

Syncing data between PostgreSQL and BigQuery is (oftentimes) a tricky process, especially if the pipeline must move data in real time. Without dedicated time from a highly technical team, the process can become difficult to follow for most businesses.

Fortunately, today’s data integration platforms make real-time pipelines a breeze in comparison to building one from scratch. The Estuary Flow platform is perhaps the best example of this in action too. With a straightforward process built into the platform, you can construct a data pipeline from the database management system to the data warehouse of your choice.

If you're interested in the product or want more information, start your free trial or get in touch with our sales team today.

Related articles:

Author

Popular Articles