Both PostgreSQL and MySQL are widely used relational database management systems (RDBMS) known for their robust features and versatility. While they share many similarities, they also have distinct strengths and use cases.

MySQL's strength lies in its replication, scalability, and transactional support, making it suitable for various applications. It’s particularly optimal for e-commerce and website development, offering notable performance advantages.

Whether you’re seeking to harness MySQL’s performance advantages or align with MySQL-compatible tools, understanding the intricacies of data migration is important. This article will help you explore two popular ways for Postgres to MySQL migration.

Let’s begin!

What Is Postgres? An Overview

PostgreSQL, referred to as Postgres, is an open-source RDBMS that offers a flexible solution for data storage and management. Backed by a large and active community of developers, users, and organizations, Postgres continually evolves to meet diverse data needs.

In addition to being able to handle advanced data types, Postgres also supports JSON and JSONB (JSON Binary) as a data type. This makes Postgres a versatile database system capable of handling both structured and semi-structured data.

What Is MySQL? An Overview

MySQL is a widely used open-source RDBMS that allows you to efficiently store, manage, and retrieve small-to-large data volumes. Data in MySQL is organized following traditional structured SQL schema defined by tables and relationships. It does also have native support for JSON. This structured approach enables efficient data manipulation and maintenance while ensuring data integrity.

The power of MySQL further extends with its query optimization, indexing, and caching capabilities, ensuring the database responds quickly to queries and reducing downtime. These features make MySQL an ideal choice for applications where rapid data access is crucial, such as web applications, content management systems, and data-driven platforms.

2 Easy Methods to Connect Postgres to MySQL

Method #1: Using SaaS Tools

Method #2: Using CSV Files

Method #1: Postgres to MySQL Data Migration Using SaaS Tools

Software-as-a-solution (SaaS) data integration tools allow you to quickly streamline your data pipeline with the help of a wide range of connectors and low- or no-code integration.

Since they’re managed by the vendor, SaaS tools deliver a hassle-free experience; things like infrastructure maintenance, connector updates, and security requirements are all handled by the service provider. As a result, you’re able to focus on core analysis tasks without worrying about the technical aspects of the software.

As an example, Estuary Flow is a SaaS tool that offers a no-code approach in part thanks to its extensive set of pre-built connectors. These connectors allow you to maintain a continuous and consistent flow of data between PostgreSQL, MySQL, and the Flow platform.

With that in mind, let’s take a look at the steps you need to follow to connect PostgreSQL to MySQL using Flow.

But before we dive in, take care of the prerequisites:

Step 1: Connect to PostgreSQL Source

- Register a new free Estuary account or log in to your existing one.

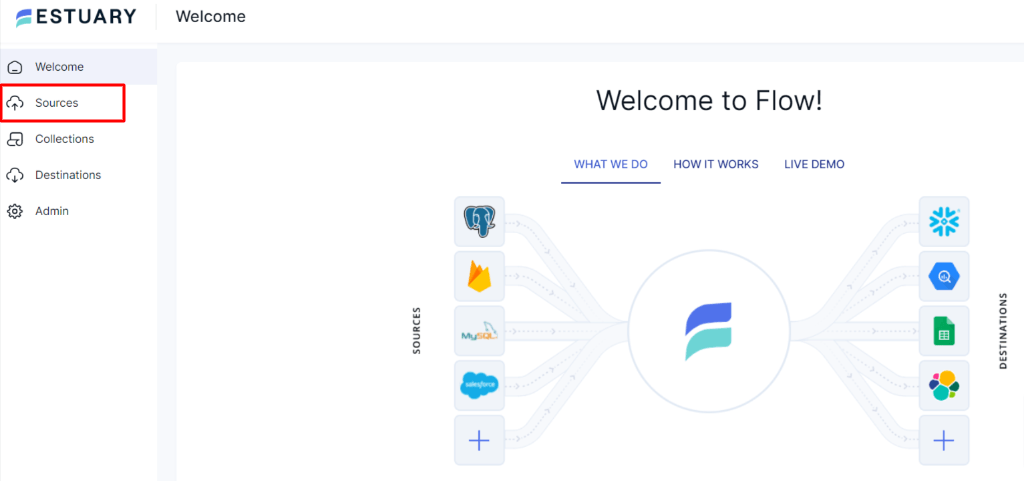

- After a successful login, you’ll see the Estuary dashboard. Click Sources.

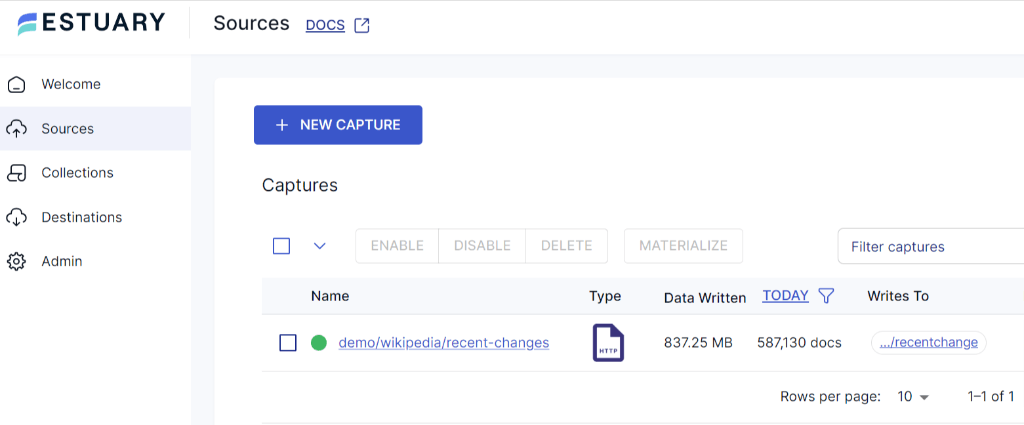

- On the Sources page, click the + NEW CAPTURE button.

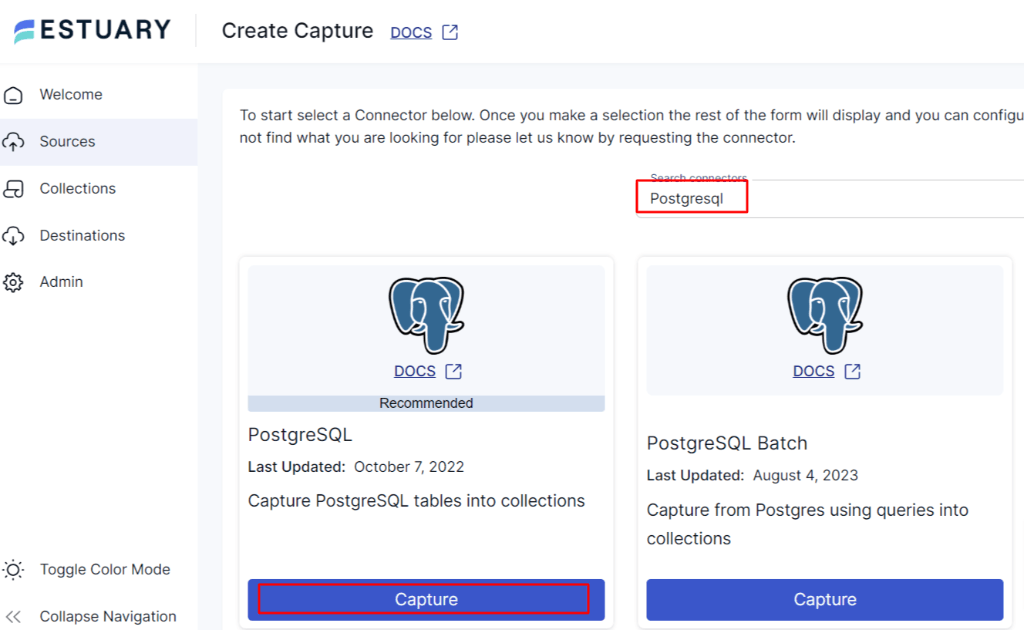

- You’ll be directed to the Create Capture page. Use the Search Connectors box to find the Postgres connector, and then click the Capture button.

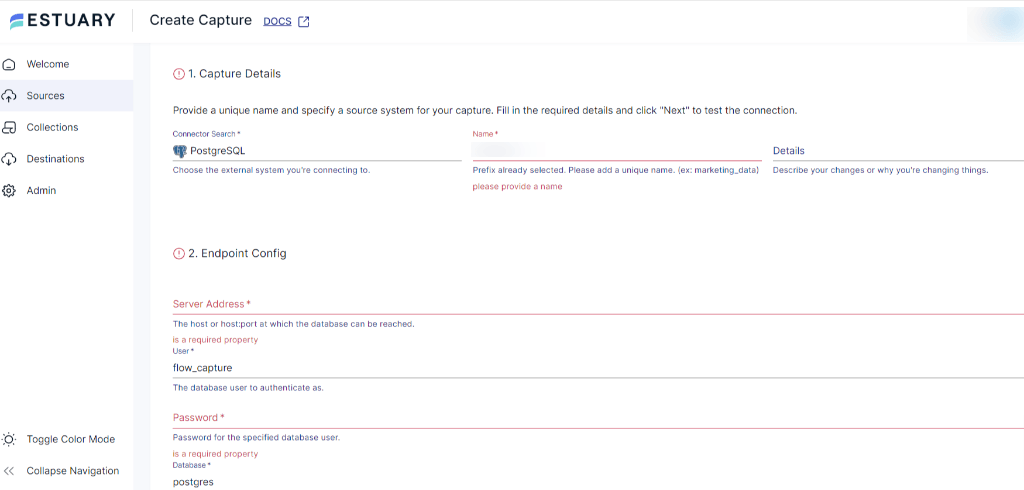

- Provide the unique Name in the Capture Details section. Add the Server Address and Password for the specified database in the Endpoint Config section.

- Click NEXT, followed by SAVE AND PUBLISH.

Step 2: Connect to MySQL Destination

- Go back to the Estuary Dashboard and click Destinations > + NEW MATERIALIZATION.

- Search for MySQL in the Search Connectors box and click the Materialization button.

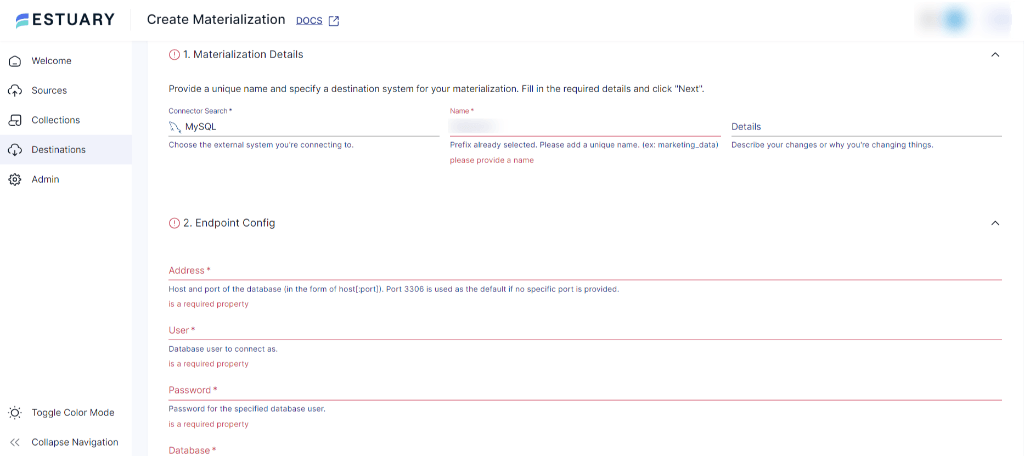

- On the Create Materialization page, provide a unique Name in the Materialization Details section. Specify the host and port of the database, database User, Password, and name of the logical Database where you want to materialize PostgreSQL data.

- If the data from PostgreSQL hasn’t been filled in automatically, you can manually add it from the Source Collections section.

- Once you have filled in all the essential information, click on NEXT > SAVE AND PUBLISH.

That’s it! You’ve created a Postgres to MySQL data pipeline in just two simple steps using Estuary Flow.

For a better understanding of the detailed process of this flow and the connectors, check out the following links:

Method #2: Export Data from Postgres to MySQL Using CSV Files

Don’t feel like using SaaS tools to speed up the migration process? No problem. You can load data from PostgreSQL to MySQL manually using CSV files. Just follow these steps.

Prerequisites:

- Access to PostgreSQL and MySQL databases

- PostgreSQL and MySQL clients

Step 1: Export Data from PostgreSQL

- Connect to your PostgreSQL database using a CLI.

- Use the COPY TO command in PostgreSQL to export data from a table to a CSV file. Here’s how:

plaintextCOPY table_name TO '/path_to_outputfile.csv' WITH CSV HEADER;table_name is the name of the PostgreSQL table you want to export data from.

'/path_to_outputfile.csv' is the name and path where the CSV file will be saved.

WITH CSV specifies the output format should be in CSV.

HEADER indicates that the first row in your CSV file should contain the column headers/names.

- Repeat this process for each PostgreSQL table you want to export in the CSV file.

Step 2: Transform CSV Files

This is one of the important steps when manually migrating data from PostgreSQL to MySQL.

If you go the manual route, you’ll need to perform various data manipulations to ensure the CSV format aligns with the requirements of the MySQL database. Some of the tasks involved in transforming CSV files include:

- Data mapping. Ensure that the columns in your CSV file match the columns of your MySQL database table.

- Data cleansing. Clean the data in a CSV file to remove any duplicates, inconsistencies, or error values.

- Data type conversion. Check that the PostgreSQL data types are supported by the MySQL database and accordingly perform data type conversion.

- Null values. Decide how null values appear in the CSV files and how they should be represented in the MySQL table.

Depending upon your data complexity, you might need to add more transformation steps. As such, it’s critical to carefully plan the steps included in the transformation phase.

Once all of your data is cleaned, move the CSV files to a location accessible from the MySQL server.

Step 3: Import Data into the MySQL Database

- To move CSV files into MySQL tables, open the MySQL client and connect to your MySQL database.

- Create a table in MySQL that matches the structure of your Postgres table.

- You can use the LOAD DATA INFILE command and load the CSV files into the MySQL table.

- If the CSV files are on the MySQL server, use the following command:

plaintextLOAD DATA INFILE ‘/path/to/csv/file.csv’

INTO TABLE table_name

FIELDS TERMINATED BY ‘,’ ENCLOSED BY ‘ “ ‘

LINES TERMINATED BY ‘\n’

IGNORE 1 LINES;- If the CSV files are on the local or client machine, use the following command:

plaintextLOAD DATA LOCAL INFILE ‘/path/to/csv/file.csv’

INTO TABLE table_name

FIELDS TERMINATED BY ‘,’ ENCLOSED BY ‘ “ ‘

LINES TERMINATED BY ‘\n’

IGNORE 1 LINES;Replace ‘/path/to/csv/file.csv’ with the name and path of your CSV file and table_name with the MySQL table name where you want to load the data.

FIELDS TERMINATED BY ‘,’ states that the fields in the CSV files are separated by the ‘,’ symbol. Adjust the delimiter with respect to your CSV file.

ENCLOSED BY ‘ “ ‘ indicates that the fields containing text or characters are enclosed by the “ symbol. Depending on your requirements, you can include it or ignore it.

IGNORE 1 LINES states that the first row of your CSV file contains column headers. Use this clause to skip the first row during the data-loading process.

- Repeat this process for each CSV file and corresponding MySQL table.

These three steps complete your Postgres to MySQL data migration.

While the above method using CSV files seems straightforward, it does have some drawbacks:

- Manual work: Exporting PostgreSQL data into CSV, cleaning it, and importing it to the MySQL table requires manual attention at each step. This approach is advisable for smaller datasets, but for enormous volumes of data, it’s quite challenging since you need to repeat every step for each Postgres and MySQL table. This can consume a lot of time and resources, potentially leading to extended downtime.

- Lack of real-time updates: CSV files don’t support incremental updates. As such, you have to continuously repeat and manually sync the entire ETL (extract, transform, load) process to achieve real-time updates. Unfortunately, this would likely prevent you from collecting timely insights.

What Makes Estuary Flow a Preferred Choice for Postgres to MySQL Data Replication?

Estuary Flow is one of the most popular tools for transferring data between PostgreSQL and MySQL in real-time. Here’s why:

- Workflow orchestration. You can define and orchestrate data integration workflows using Flow’s intuitive user interface. This makes it easier to design and manage data pipelines.

- Real-time data processing. Estuary Flow leverages change data capture (CDC) technology, which allows it to detect and replicate modified data automatically. This feature reduces the data transfer volume and ensures that your MySQL database is always updated.

- Scalability. Flow is designed to scale to accommodate growing data volumes, supporting data flow up to 7GB/s. This ability to handle large data volumes enables you to maintain consistency and efficiency as data loads get bigger.

Conclusion

By combining the features of Postgres with MySQL, you can obtain comprehensive insights into business operations and make powerful, data-driven decisions to drive business growth.

Since you’re reading these words, you now know two different ways to seamlessly transfer data from Postgres to MySQL:

- The CSV-based approach is straightforward but lacks real-time updates and involves manual interference at each step.

- On the other hand, SaaS tools like Estuary Flow can automate the entire process, eliminating the need for manual intervention. At the same time, it also provides real-time data replication, ensuring reliable data transfer from PostgreSQL to MySQL — particularly for large-scale and ongoing data migrations.

Whether you opt for a CSV-based approach or use a SaaS tool like Estuary Flow, you absolutely can establish a solid connection between the two platforms. It just depends on how much time you’re willing to spend to complete the migration.

Seamlessly connect PostgreSQL to MySQL using Estuary Flow and automate your data pipeline in just two steps. Try Estuary for free today.

Author

Popular Articles