Having your data where and when you need it is a game changer, especially when you need it to make decisions quickly. The type of data storage you have configured for your organization determines how and what your data can be used for. Organizational data needs vary from use case to use case, but for each scenario, you need to be able to migrate between databases when needed without worrying about breaking anything.

With the ever-increasing amount of data being stored every day and the changes it can undergo with time, at some point, you might need to migrate between different databases, depending on your data needs. In this article, I’ll discuss 3 ways you can migrate your data from Postgres to MongoDB. Let’s quickly understand what Postgres and MongoDB are and what they’re suitable for.

What is Postgres?

Postgres is a popular open-source SQL Database. It is an object-relational database, unlike MySQL which is only a relational database. It has more complex data types, allowing you to embed documents and files, and works with different languages like Python, PHP, C, C++, Java, JavaScript, etc. It is usually used as the primary storage for applications due to its flexibility, popularity, security, and reliability.

What is MongoDB?

MongoDB is a non-relational database with a JSON-like structure. It is a great tool to handle whatever form your data comes in and serves it to your customers at scale. It stores objects like documents, databases as clusters, and tables as collections in these clusters.

In this article, I’ll be showing you how to move data from an object-relational database (Postgres) to a non-relational database (MongoDB). Whether your Postgres database is hosted on the cloud or locally on your device, the approach for either of them is similar.

For this read, we’ll be using the Azure Postgres database. If you’re unfamiliar with Azure Postgres, it’s easy to set up and deploy a Postgres database on Azure. The three methods we’ll be exploring are

- Method 1: Moving Data From Postgres to MongoDB Using TSV

- Method 2: Moving Data From Postgres to MongoDB Using JSON

- Method 3: Moving Data From Postgres to MongoDB Using Estuary Flow

Before we go in-depth, let’s do a comparison between these three methods.

Methods for moving data from Postgres to MongoDB: TSV vs JSON vs Estuary Flow

| Method | Data with a simple schema | Data with a complex schema | Export manually | Import to Mongo manually | Fully Automated | Pulls Data Automatically | Free to use | Needs CLI login for every use | Requires writing codes |

| TSV | Yes | No | Yes | Yes | No | No | Yes | Yes | Yes |

| JSON | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes |

| Estuary | Yes | Yes | No | No | Yes | Yes | Pay after 25GB | No | No |

To migrate or not, you might want to think about these things before you make a decision.

- Does your data have rapidly changing structures? If yes, MongoDB is a great choice to handle complex data structures.

- Does your application require a high-velocity, high-volume workload with low latency?

- What are your organization’s development preferences?

Now, let’s dive in.

Method1: Migrate from Postgres to MongoDB using TSV

TSV is Tab Separated Value. With TSV, you can only export data with simple schema, i.e data that doesn’t contain documents or complex associations. To do this, we need to first export the data from Azure Postgres before we can import it to MongoDB. Whether you’re using Azure Postgres or any other type, you need to have an existing database that you’ll like to migrate.

Export the data to TSV

- Connect to your Azure Postgres DB using the CLI. You can use Azure CLI in the Postgres resource you have created or work from your command line.

- Ensure to change the

<host>,<user>,<databaseName>,<tableName>,<filename>to what you have.

In the CLI, type:psql -h <host> -p "5432" -U <user> -d <databaseName> -c "\COPY (SELECT * FROM <tableName>) TO <filename, DELIMITERtsv> ',' CSV"

Example:psql -h "my-postgresql-server.postgres.database.azure.com" -p "5432" -U "postgre_user@my-postgresql-server" -d "databaseName" -c "\COPY (SELECT * FROM tableName) TO /Users/morteza/file.csv DELIMITER ',' CSV"

You may run into Permission errors while copying data to the CSV file. The code above works, but you can refer to this guide if you face any issues. - Download the data to your device.

Import data to MongoDB

- You need to have a MongoDB Cluster created. Make sure that you have Read and Write access as a user (check that in Database Access).

Change<mongodb_user>,<mongodb_password>,<altas-cluster>, and<DATABASE>to the correct values.

You’ll need to install mongoimport. Open up your terminal and type:mongoimport --uri mongodb+srv://<mongodb_user>:<mongodb_password>@<altas-cluster>.mongodb.net/<DATABASE> --collection users --type tsv --file users.tsv --headerline --columnsHaveTypes

Method 2: Migrating from Postgres to MongoDB using JSON

JSON allows for a more complex schema, great for a schema that contains documents. Moving data from Postgress to Mongo DB with JSON is similar to TSV but gives more flexibility. You can add data from other tables too.

Export the data to JSON

- Connect to your Azure Postgres DB using the CLI.

- Make sure you change the

<host>,<databaseName>,<tableName>, and<filename>.psql -h "my-postgresql-server.postgres.database.azure.com" -p "5432" -U "postgre_user@my-postgresql-server" -d "databaseName" -c "\COPY (SELECT * FROM tableName) TO file.json WITH (FORMAT text, HEADER FALSE)" - Download data to your device.

Import data to MongoDB

- You need to have a MongoDB Cluster created. Make sure that you have Read and Write access as a user (check that in Database Access).

You’ll need to install mongoimport to import the file into MongoDB cluster. Open up your terminal and type:mongoimport --uri mongodb+srv://<mongodb_user>:<mongodb_password>@<atlas-cluster>.mognodb.net/<DATABASE> --collection orders --jsonArray orders.json

Method 3: Migrate Postgresql to MongoDB with Estuary Flow

Estuary Flow is a data platform that lets you manage streaming data pipelines and connect to different data sources, whether it’s self-hosted or on the cloud. Over 30 different data connectors are supported, including MySQL, Firebase, Kafka, MariaDB, GitHub, MailChimp, and so on. PS: You can signup for a free account to get started.

With Estuary Flow, you don’t have to worry about CLI sign-ins, manual migration, or anything breaking. It fully automates pulling data from Postgres to MongoDB.

Set up a pipeline to export data from Postgres

- Set up an Estuary account.

- Login to the dashboard.

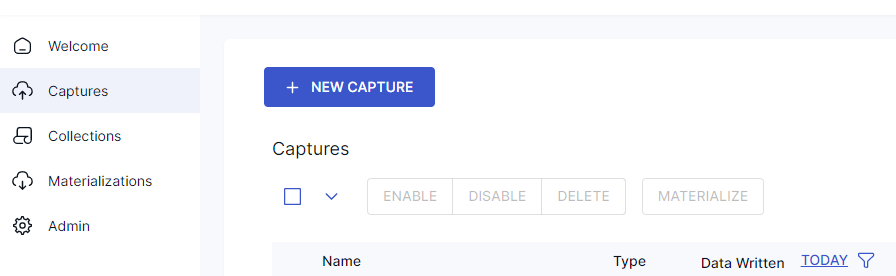

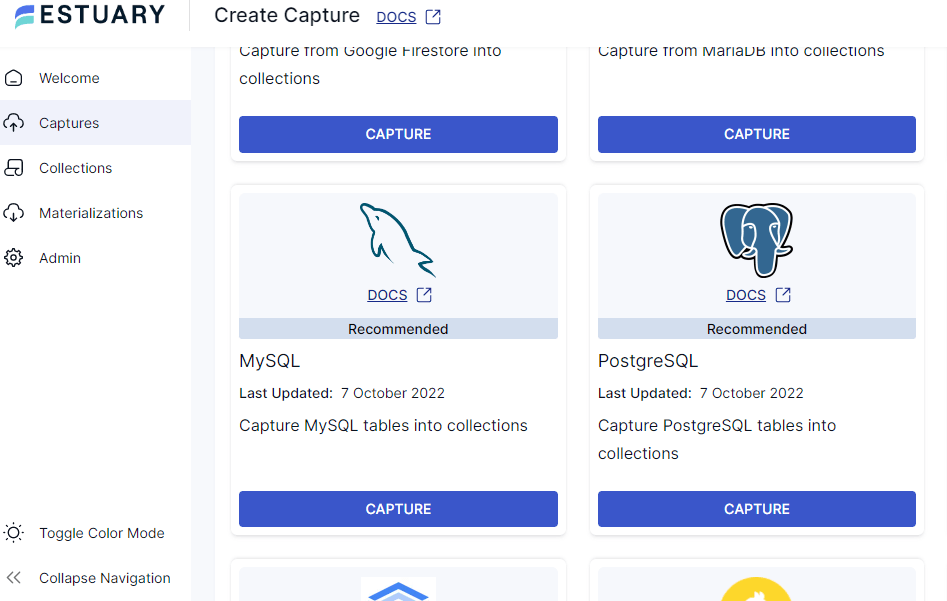

- Click on Capture. Select new capture, look for Postgres, and click on Capture.

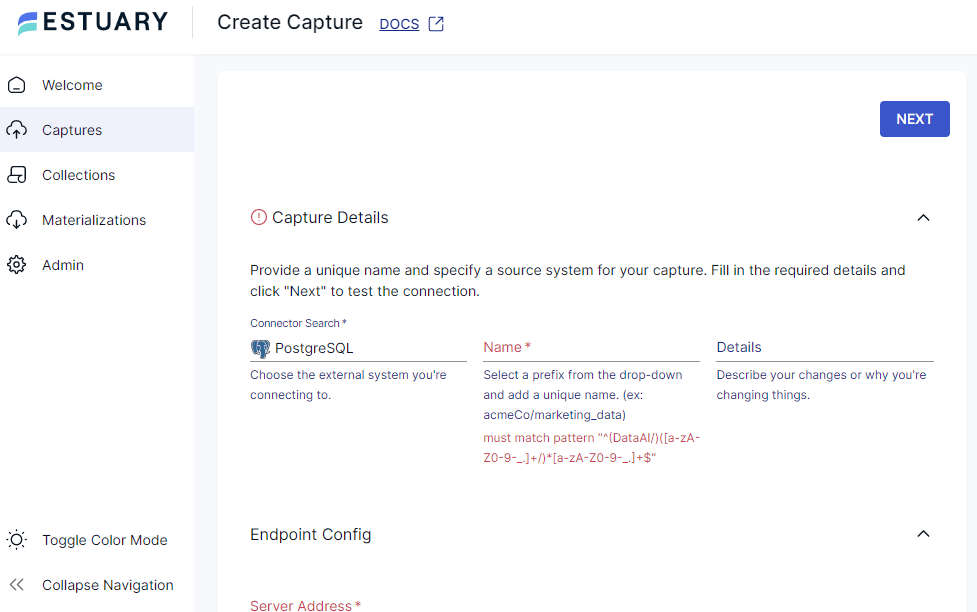

- Give your capture a name and description.

- Click on Endpoint config.

- Open the Azure resource.

- Copy the server name as the server address.

- Type in the Admin username and password.

- Add the name of the database and click Next.

NB: Be sure to allow Estuary IP to access Azure service and the Replication is set to Logical. - Click on Output Collections and select the table name, now stored as a collection.

- Click Save and publish.

Set up a pipeline to import data to MongoDB using Estuary Flow

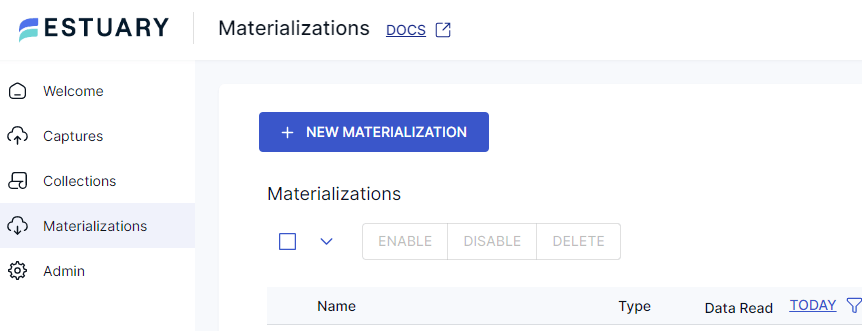

- Click on Materializations.

- Select New materialization.

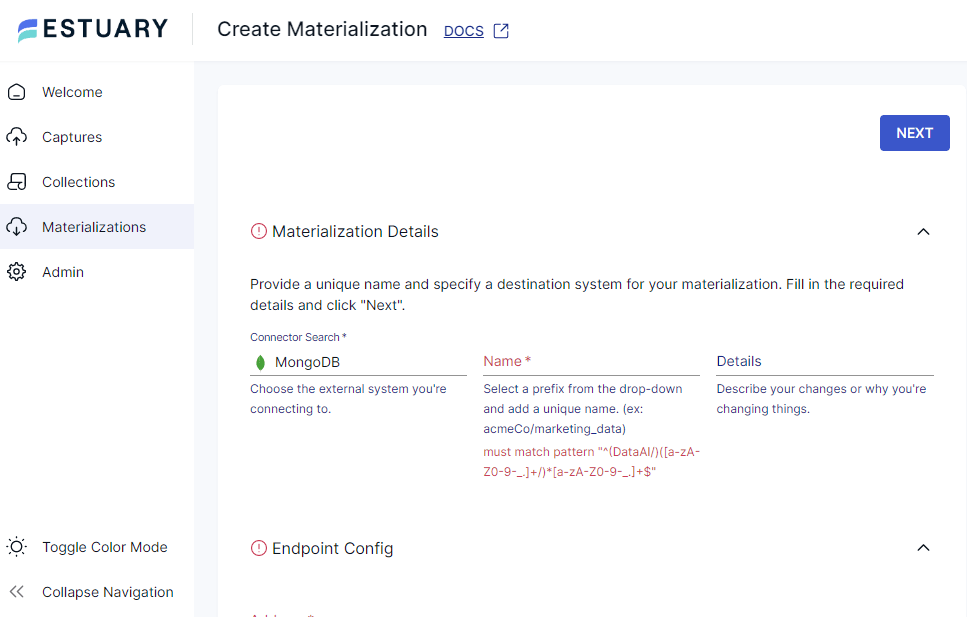

- Search for MongoDB and select Materialize.

- Give a name to the Materialization and add the details.

- Click on Endpoint Config.

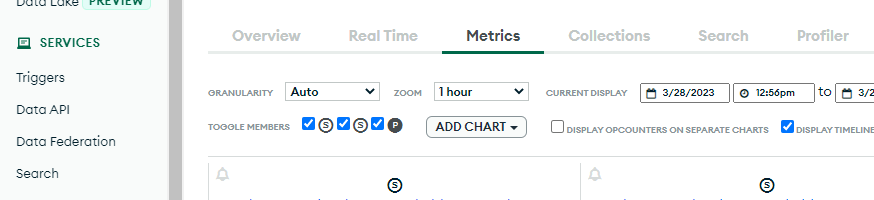

- Input the server address (host address). To get it, open MongoDB, and select your cluster. Click on metrics and you’ll see this. Copy the address at the top of the chart. Your data should be in this format.

- Enter your role username and password. Click on database access. Be sure that your role allows you to read and write to the database.

- Input the name of the database you want to write to.

- Add Estuary’s IP Address, 34.121.207.128, to MongoDB’s allow list.

- Click Next.

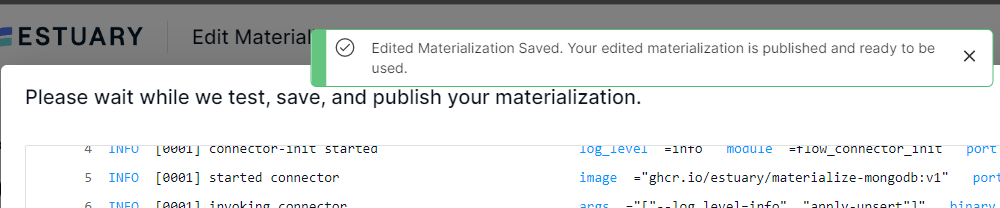

- Click Save and Publish.

- If you see this success screen, congratulations, your pipeline connection has been completed.

Conclusion

Big ups for making it this far. In this read, we discussed 3 methods of moving data from Postgres to MongoDB. Two manual processes and one no-code platform. For data with more complex schema, you can use JSON format as compared to TSV for exporting your data. Automated pipelines like Estuary Flow make it easy to connect to various data sources and manage your data easily while choosing whether to stream in real-time or in batches.

You should also consider your data demands, changing data structures, and organization development preferences before choosing a platform to migrate to. If you’re looking for cost-effective platforms, you can try Postgres compared to MongoDB as it requires you to pay for hosting.

You’ve also seen how to build Postgres to MongoDB automation pipeline with Estuary Flow. It’s free to try out Estuary Flow and build pipelines to and from a variety of data systems.

Resources and References:

https://www.mongodb.com/community/forums/t/unable-to-connect-to-atlas-cluster-via-mongosql/159113/10

https://docs.estuary.dev/reference/Connectors/materialization-connectors/mongodb/

Author

Popular Articles