Replicating your data from MySQL to Parquet seems simple enough — just copy the data from your MySQL database to a Parquet file. The reality, however, has a bit more to it. And if the data replication is not done correctly, it can lead to data losses and inconsistencies.

The process of replicating data from a database like MySQL to a Parquet file can be challenging. But with the right tools and methods, it can be done in a timely and scalable way.

In this article, we’ll be discussing how to replicate data from MySQL to Parquet. We’ll talk about the pros and cons of both MySQL and Parquet, explain how they are different, and give step-by-step instructions for different ways to replicate your data, including the no-code solution by Estuary.

By the end of this guide, you’ll have a better understanding of how to choose the right tool for your specific use case and replicate data from MySQL to Parquet in no time.

If you are already familiar with MySQL, Parquet, and the benefits of connecting MySQL to Parquet, skip to the implementation methods.

Let’s dive in.

Why Replicate Data From MySQL To Parquet

When it comes to big data analytics and reporting, MySQL may not be the best choice for many teams for its row-based storage architecture. This can make common functions like aggregating and group-by’s less efficient on large data sets.

On the other hand, columnar storage systems like Apache Parquet provide efficient storage and querying capabilities for big data, making them a popular choice for big data analytics and reporting.

Before we get into the nuts and bolts of replicating data from MySQL to Parquet, let’s first make sure we have a good grasp of both technologies.

In the next section, we’ll learn more about MySQL and explore its key features, benefits, and advantages as a relational database management system. We’ll also take a look at its limitations and drawbacks.

Understanding MySQL

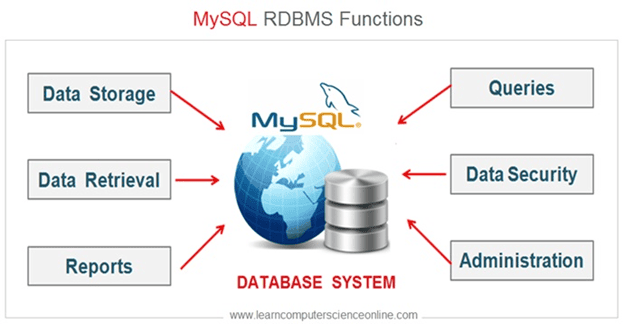

MySQL is a popular open-source relational database management system. It’s widely used in web application development and is known for its reliability, ease of use, and performance.

MySQL stores data in tables, which are organized into databases, and it uses SQL (Structured Query Language) to access and manipulate all the data.

It is compatible with most operating systems, including Windows, Linux, and MacOS; and managed versions are available from major cloud providers like AWS, GCP, and Azure. It can be used in a wide variety of applications, such as eCommerce websites, content management systems, and data analytics.

Key Features & Advantages Of MySQL

- Both self-hosted and managed versions of MySQL are available.

- It has a proven track record for being stable, fast, and straightforward to set up.

- It uses SQL, making it easily accessible to data scientists.

- It can handle a vast number of users daily with a high degree of adaptability and robustness.

- MySQL is a dependable and highly functional database management system, as attested to by its widespread use among major technology companies.

Limitations & Disadvantages Of MySQL

- It has limited built-in analytics capabilities.

- MySQL is not suitable for handling fast-changing, highly volatile data.

- It may not be the best choice for certain use cases, such as real-time streaming.

- MySQL is a row-based storage system, which can make data analysis on large datasets inefficient.

- The performance of MySQL tends to degrade as the dataset grows, making it more challenging to scale.

With a solid understanding of MySQL under our belt, it’s time to dive into understanding another popular data storage and processing solution: Parquet.

Understanding Parquet

Parquet is a columnar storage format for large-scale data processing. It is designed to work with data systems like Apache Hadoop and Apache Spark and is optimized for use with these systems.

Parquet is designed to be highly efficient in terms of storage space and query performance, and it is often used for storing large amounts of data in big data environments. It can compress and encode data in a way that reduces the amount of disk I/O required to read and write data, which makes it a good choice for use in big data environments.

Key Features & Advantages Of Parquet

- The Parquet file data format is ideal for machine learning pipelines.

- Parquet files can be easily integrated with cloud storage solutions like AWS S3 and Google Cloud Storage.

- Storing data in Parquet format allows the use of high compression rates and encoding options for efficient storage.

- Parquet files use the columnar storage format which allows for efficient querying and aggregation on large data sets.

- Data stored in Parquet files is compatible with many big data processing frameworks such as Apache Spark and Hive.

Limitations & Disadvantages Of Parquet

- The Parquet file format is not well-suited for transactional workloads.

- The Parquet format may require additional work to handle nested data structures.

- It can be more complex to set up and maintain compared to traditional relational databases like MySQL.

Now let’s take a look at many use cases for both MySQL and Parquet and how to choose the best tool for your unique use case.

Also Read: Apache Parquet for Data Engineers

Distinguishing MySQL From Parquet

A couple of questions remain:

Where do you use MySQL, and where do you use Parquet? And how do you select which one to use?

Let’s take a look.

Use Cases For MySQL & Parquet

When it comes to the use cases, MySQL and Parquet have different strengths and are often used in different industries and applications.

MySQL is commonly used in web and application development, and for powering transactional workflows like eCommerce sites or customer relationship management systems. It is also used as a primary data store for small to medium-sized businesses.

One real-world example of MySQL’s use is in the eCommerce industry. Many online retailers use MySQL as the primary data store for product information, customer data, and order history. These transactional workloads require real-time updates and data consistency, which MySQL can handle efficiently.

On the other hand, Parquet has an entirely different use case that mainly focuses on big data analytics and machine learning. It is used in industries such as finance, healthcare, and retail as a data warehouse for storing and querying large amounts of historical data.

For instance, hospitals and research institutions often have large amounts of patient data that need to be stored and analyzed for purposes like medical research, drug discovery, and public health studies. Because of the way it stores data in columns and how well it works, Parquet is a good choice for analyzing these large data sets.

Choosing The Right Storage Format For The Job

When it’s time for you to decide which data format to use, it’s critical to consider the specific needs of the task at hand as well as the nature of the data you are working with.

When deciding, think about:

- The need for real-time updates.

- The volume of data and its generation rate.

- The complexity of queries that will be performed on the data.

- The rate at which data size will grow and the need for scalability.

- Your company’s needs for data storage and processing, as well as the types of queries that will be run on the data.

But remember, MySQL and Parquet are not mutually exclusive and can be used in a data pipeline together to get the best of both worlds.

Businesses can usually achieve the best performance and scalability by replicating data from MySQL to Parquet and maintaining both. The key is to select the tool that best meets your company’s specific needs and objectives and to continuously assess and optimize all your data pipelines as your requirements change.

Methods For Replicating Data From MySQL To Parquet

In this section, we will explore two techniques for data replication:

- The first will be to manually write a script to migrate the data from MySQL to Parquet.

- The second will be to use a no-code data pipeline solution: Estuary Flow

Let’s begin with the first one.

Method 1: Manually Writing A Script

Writing your own code to copy data from MySQL to Parquet gives you a lot of control over how the data is processed and transformed. And with the relevant technical background, this can be completed fairly quickly.

If you use this method, you will have to write a code script that connects to the MySQL database, extracts the data, transforms it, and then writes it to Parquet files.

Most modern programming languages can be used for this.

What follows is a simple script that performs all of the steps required for replicating data from MySQL to Parquet using the Python programming language and its libraries, including PyMySQL, Pandas, and PyArrow.

- We start by using the PyMySQL library to connect to the MySQL database and execute a SQL query to retrieve the data you want to migrate.

import pymysql# Connect to the MySQL databaseconn = pymysql.connect(host="host", port=3306, user="user", passwd="passwd", db="dbname")# Create a cursor and execute a SQL querycursor = conn.cursor()cursor.execute("SELECT * FROM tablename")# Fetch the data from the querydata = cursor.fetchall() - Next, we use the Pandas library to create a DataFrame from the data and manipulate the data as needed.

import pandas as pd# Create a DataFrame from the datadf = pd.DataFrame(data)# Add data processing steps as per requirement - And finally, we use the PyArrow library to write the DataFrame to a Parquet file in the specified location.

plaintextimport pyarrow as pa

import pyarrow.parquet as pq

# Write the DataFrame to a Parquet file

table = pa.Table.from_pandas(df)

pq.write_table(table, '/data/parquet/tablename.parquet')While this method provides you with a lot of flexibility, it has some major drawbacks.

- Automating the replication process is not easy with this method.

- The method does not incorporate any data consistency and integrity checks.

- Optimizing the data replication code can require a significant research effort.

- The method is not easy to scale. With increasing data size, you may need to use different libraries entirely and rewrite your entire code.

- To implement your own replication code, you will need a deep knowledge of data structures, programming, and the relevant libraries.

On the other hand, no-code tools like Estuary Flow offer a more user-friendly and automated method of data replication and migration. This managed solution can ensure reliable, efficient, and scalable data pipelining with minimal engineering effort.

Method 2: Using Estuary Flow

Now let’s discuss the easier solution – replicating data from a MySQL database to Parquet files in an AWS S3 bucket using the no-code data pipeline solution, Estuary Flow.

Estuary Flow simplifies the process of data replication and migration with its no-code approach. Here are some benefits you can experience:

- Change data capture: This allows the detection of changes in the source database, the automatic retrieval of change data, and its transfer to the S3 bucket in seconds.

- User-friendly interface: As you will see below, Estuary Flow offers an easy-to-use GUI for configuring and managing data pipelines.

- Built-in error handling and data validation: This ensures the prevention of data loss or corruption.

- Real-time data integration: With Estuary Flow’s real-time data-processing capabilities, you will always have the latest data available for immediate analysis.

- High scalability: With its fast customization options, and a wide array of data connectors, you will always be ready to adapt to changing requirements.

In Estuary Flow, there are three main parts to a basic data pipeline: data capture, one or more collections, and materialization.

The data capture ingests data from an external source, such as a MySQL database, and stores it in one or more collections in a cloud-backed data lake. The materialization then sends the data from the collections to an external location, like a Parquet file in an S3 bucket.

The entire process of replicating data from MySQL to Parquet using Estuary Flow can be broken down into three main steps:

- Setting up the MySQL database for connecting to Estuary Flow

- Configuring the data capture

- Setting up the materialization destination.

Estuary Connectors are used in both the capture and materialization steps to interface between Flow and the data systems they are connected to.

Before we provide details on the exact steps to follow, make sure you have a running MySQL server and an Estuary Flow account ready. You can sign up for one here.

Set Up MySQL For Data Capture

Your MySQL database must be properly configured before you can connect your MySQL database to Estuary Flow. This includes enabling the binary log in the database system, creating a watermarks table, and ensuring that the database user you are using has the appropriate permissions.

The entire process can be completed by running just a few SQL commands in MySQL Workbench or a command line tool. You can find the details here.

Create The MySQL Capture

To create a capture to connect to your MySQL data source:

- Go to the Captures tab in Estuary Flow Dashboard and choose New capture.

- Choose the MySQL connector and fill out the required properties, including a name for the connector, the database host, username, and password.

- Next, retrieve the names of all tables in your database by clicking the Next button. Flow maps the retrieved tables to data collections.

- Select the collections you want to capture and configure any additional settings such as filtering or renaming collections.

- Once you are happy with the configuration, click Save and Publish to initiate the connection and begin capturing data.

Create The Parquet Materialization

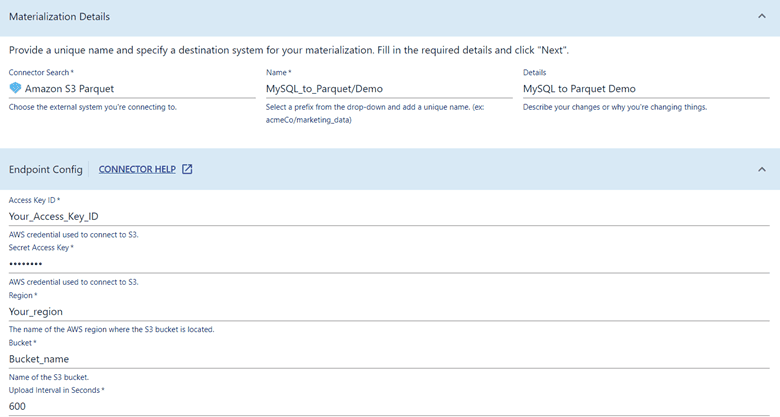

Next, you’ll create a materialization that pushes data to an S3 bucket in Apache Parquet format:

- Go to the Materializations tab in the Estuary Flow Dashboard web app and choose New materialization.

- Choose the Amazon S3 Parquet connector and fill out the required properties, including the AWS access key and secret access key, the S3 bucket name, and the region.

- Bind the collections that you want to materialize to the materialization (the collections from the MySQL table you just captured should be selected automatically).

- Set the upload interval in seconds. This is how often the data will be pushed to S3.

- Once the materialization is configured, click Save and Publish to initiate the connection and begin materializing the data to the S3 bucket.

For more information about this method, see the Estuary documentation on:

Conclusion

Replicating data from MySQL to Parquet can be a valuable step in optimizing your data pipeline and improving the performance of your analytics and reporting tools. By following the methods outlined in this guide, you can easily set up a replication process that ensures your data is up-to-date and ready for use.

At Estuary, we know how crucial data replication and migration are for businesses. That’s why we offer Estuary Flow, our powerful platform for streamlining the entire process of data management, from acquisition to operationalization. But don’t just take our word for it.

Sign up for free today and experience the power of real-time data pipelines, set up in minutes without the hassle of managing infrastructure.

Author

Popular Articles