Relational database systems such as MySQL have been the cornerstone for many businesses for efficiently managing and storing data. Its structured query language (SQL) and robust features make it popular for web applications and various data-driven tasks.

However, as businesses scale and data increases, MySQL's limitations become more apparent. Some of these include challenges in scaling to accommodate data growth, maintaining consistent performance under high workloads, and adapting to changing data structures.

Migrating to a serverless NoSQL database like Amazon DynamoDB offers a compelling solution to overcome these challenges. DynamoDB boasts impressive scalability, ensuring consistent performance even as data volumes grow exponentially. Its flexible schema allows for adaptable data structures, making it ideal for applications with changing data requirements.

By leveraging DynamoDB's capabilities, you can unlock significant improvements in application speed, scalability, and overall data management efficiency. This comprehensive guide will cover the best methods for migrating data from MySQL to DynamoDB.

MySQL – The Source

MySQL is a popular open-source relational database management system offered by Oracle Corporation. It operates on a client-server model and utilizes Structured Query Language (SQL) for database management. MySQL is widely used in web applications and is a key element of the LAMP software stack, which includes Linux, Apache, MySQL, and Perl/PHP/Python.

One of the standout features of MySQL is its support for ACID (Atomicity, Consistency, Isolation, Durability) transactions, guaranteeing the integrity and reliability of data. ACID transactions ensure that database operations are completely executed or rolled back in the event of failures, maintaining data consistency and integrity.

Here are some of its key features:

- Stored Procedures and Triggers: MySQL supports server-side programming using stored procedures and triggers. Stored procedures are SQL routines that are stored and executed on the server, while triggers automatically execute in response to specific database events.

- Text-Search Capabilities: MySQL provides built-in text search capabilities using the MyISAM storage engine. It allows fast searches on large volumes of text data using natural language queries.

- Replication: MySQL offers replication capabilities that allow data from one MySQL database server to be copied to one or more MySQL database servers. This is beneficial for data backup, fault tolerance, and scalability, as it allows load distribution across multiple servers.

DynamoDB – The Destination

DynamoDB is a robust NoSQL database service offered by Amazon Web Services (AWS). It is designed to deliver rapid and consistent performance, particularly for applications that require minimal latency. DynamoDB automatically distributes data and traffic across multiple servers, efficiently managing each customer's requests. This allows it to scale seamlessly and maintain high performance without manual optimization or provisioning.

Among the impressive features of DynamoDB is its support for Time-To-Live (TTL) attributes. TTL allows you to define a timestamp on data items, after which the system automatically deletes them. This is beneficial for managing data with a defined lifespan, reducing storage costs, and simplifying data management processes.

Here are some of the key features of DynamoDB:

- Scalability: DynamoDB automatically scales tables to adjust for capacity and maintain performance as your data storage needs grow without limits. It can handle over 10 trillion requests per day and accommodate peaks of over 20 million requests per second.

- Durability and Availability: DynamoDB automatically replicates data across multiple AWS availability zones for enhanced durability and availability. It offers up to 99.99-99.999% availability, depending on configuration. DynamoDB also continuously backs up data and verifies it to safeguard against hardware failures or data corruption.

- Flexible Data Model: DynamoDB supports both key-value and document data models, allowing tables to store items with varying attributes. This schemaless design lets you adapt your data model as requirements evolve.

How to Migrate From MySQL to DynamoDB

Let’s look into the details of the two methods to migrate data from MySQL to DynamoDB.

- Method 1: Using Estuary Flow to migrate MySQL to DynamoDB

- Method 2: Using AWS DMS to migrate MySQL to DynamoDB

Method 1: Using Estuary Flow to Migrate From MySQL to DynamoDB

If you are looking for an effortless way to migrate data from MySQL to DynamoDB, consider using no-code ETL (Extract, Transform, Load) tools like Estuary Flow. It automates the data migration process with pre-built connectors and requires very little technical expertise. Additionally, you can also achieve real-time integration for your data with Estuary Flow.

Here are the steps involved in using Estuary Flow to transfer your data from MySQL to DynamoDB.

Prerequisites

Step 1: Configure MySQL as the Source

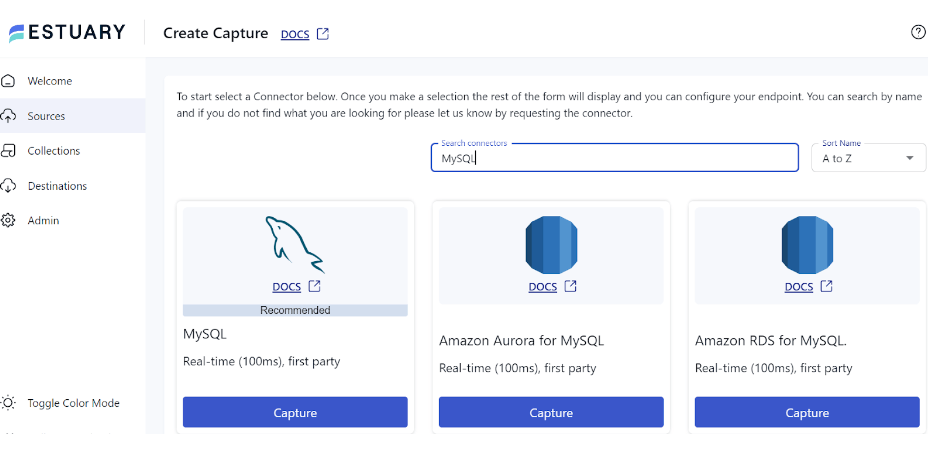

- Sign in to your Estuary account.

- Click the Sources option on the left side pane of the dashboard.

- Click on the + NEW CAPTURE button on the top-left of the Sources page.

- Enter MySQL in the Search connectors box. Click the connector’s Capture button when you see it in the search results.

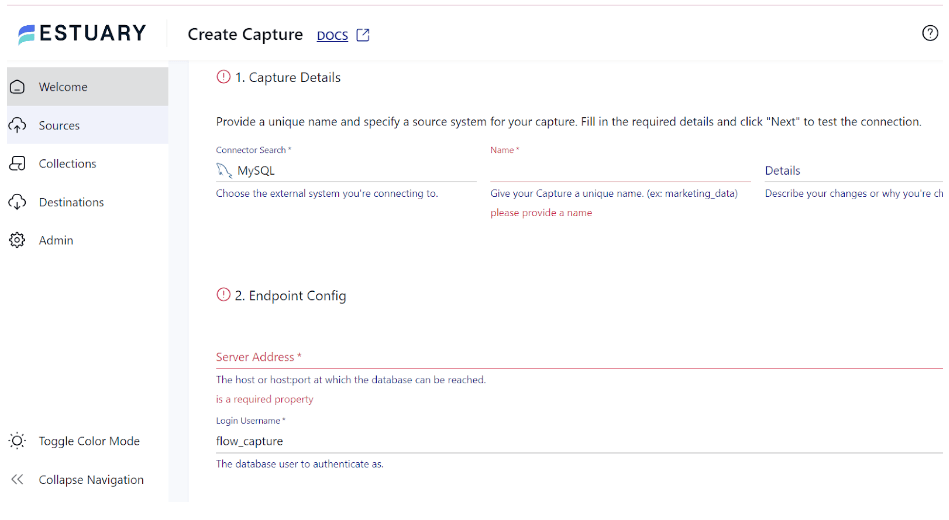

- On the MySQL connector configuration page, enter a Name, Server Address, Login Username, and Timezone.

- Click NEXT > SAVE AND PUBLISH. This CDC connector will capture change events from your MySQL database via the Binary Log.

Step 2: Configure DynamoDB as the Destination

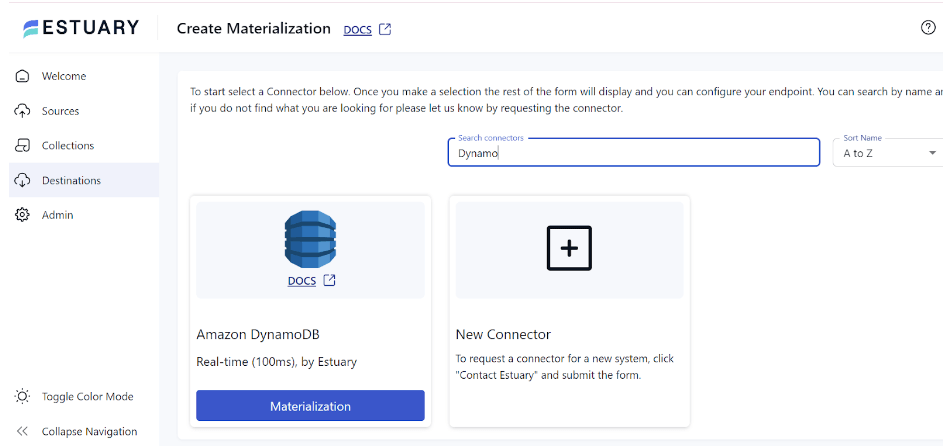

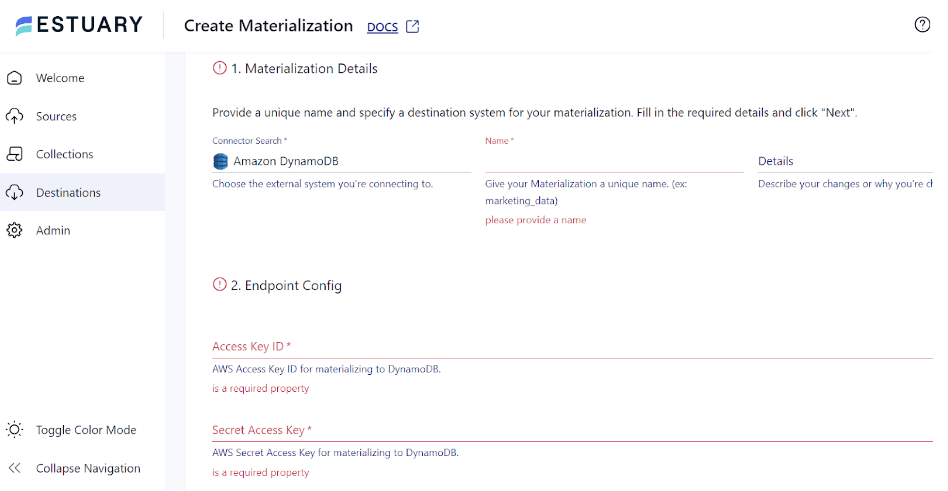

- After configuring the source, you can navigate to the Estuary dashboard and click the Destinations option.

- Click on + NEW MATERIALIZATION on the Destinations page.

- Enter DynamoDB in the Search connectors box, and click the Materialization button of the connector.

- On the Create Materialization page, enter the required details such as Name, Access Key ID, Secret Access Key, and Region.

- If your MySQL data collection is not automatically included in your materialization, you can manually add it. To do this, navigate to the Source Collections area and click the SOURCE FROM CAPTURE button.

- Click NEXT > SAVE AND PUBLISH to finish configuring DynamoDB as the destination end of your data migration pipeline. The connector will materialize Flow collections into DynamoDB tables.

To know more about Estuary Flow, read the following documentation:

Benefits of Estuary Flow

Some benefits of using Estuary Flow to migrate data from MySQL to DynamoDB:

- Wide Range of Connectors: Estuary Flow provides over 200 readily available connectors. Without writing a single line of code, you can easily configure these connectors to integrate data from various sources to destinations.

- Real-time Data Processing: Flow supports real-time data transfer from MySQL to DynamoDB. This helps with effective real-time decision-making, as your downstream apps will always have access to the most recent data.

- Flexibility and Scalability: It can scale horizontally to accommodate varying data volumes and support active workloads at a 7 GB/s CDC rate.

- Change Data Capture (CDC): Estuary Flow supports CDC; any updates or modifications to the MySQL database will be automatically reflected in DynamoDB. This reduces latencies while keeping data integrity intact.

Method 2: Using AWS DMS to Copy Data From MySQL to DynamoDB

AWS Database Migration Service (AWS DMS) helps transfer data from a source database to a destination database. To maintain synchronization between the two databases, the AWS DMS monitors changes made to the source database during migration and applies them to the target database.

Let’s dive into the details of using AWS DMS to load MySQL data to DynamoDB.

Before starting the migration process, it is important to verify that your source MySQL database is either hosted on-premises or running within the AWS ecosystem. For this guide, let’s consider MySQL hosted on-premises. Additionally, it is crucial to ensure your AWS DMS instance has the required permissions and access to the source MySQL database and the target DynamoDB table.

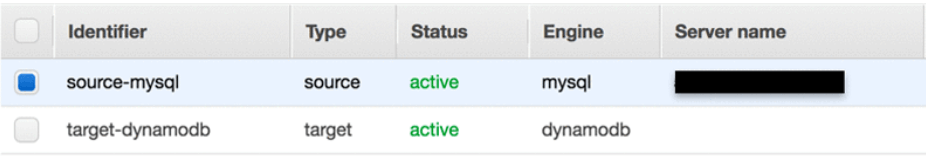

Step 1: Establish the Source and Target Endpoints

- In your AWS DMS console, create a replication instance with sufficient storage and processing power to execute the migration task.

- As a MySQL user, connect to MySQL and utilize the SUPER, REPLICATION CLIENT privileges to obtain data from the database.

- Ensure your MySQL database is configured to support DMS by enabling the binary log and setting up the binog_format parameter to ROW for CDC.

- Before you start working with a DynamoDB database as a target for DMS, create an IAM role for DMS and provide access to the DynamoDB target tables.

- In the AWS DMS console, set up the source endpoint for MySQL and the target endpoint for DynamoDB.

Sample endpoints are shown in the screenshot below.

- The information for one of the endpoints, source-mysql, is displayed as follows:

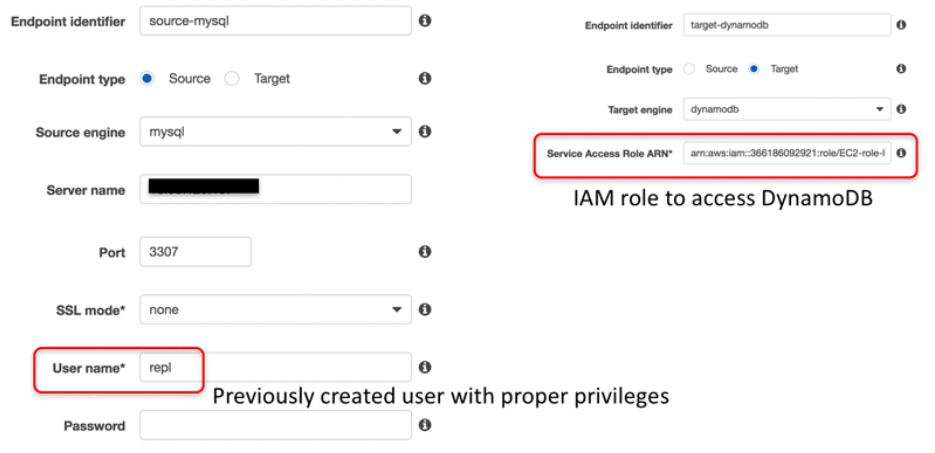

Step 2: Use Object Mapping Rule to Create a Task

- Let’s assume that the MySQL database contains a composite primary key consisting of the customerid, orderid, and productid. Using an object mapping rule, you will reorganize the key to the desired data structure in DynamoDB.

- The productid column serves as the sort key in this instance, and the hash key in the DynamoDB table is a mix of the customerid and orderid columns.

- If the Start task on create option is not cleared, migration begins as soon as the task is created.

- The task creation page is seen in the screenshot below.

Step 3: Start the Migration Process

- If the target table given in the target-table-name attribute does not already exist in DynamoDB, DMS constructs it in accordance with data type conversion rules for source and target data types. Numerous indicators are available to track the migration’s progress.

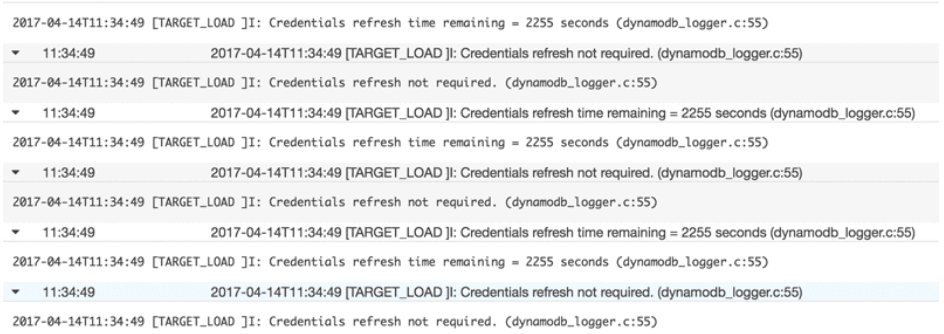

- The events and failures recorded by CloudWatch Logs are displayed in the screenshot below.

- After all migration procedures are finished, the DMS replication instances you used should be deleted. When the retention term expires, any CloudWatch logs data will be automatically deleted.

Limitations of Using AWS DMS to Convert MySQL to DynamoDB

- No Schema Conversion: AWS DMS does not automatically perform schema or code conversion when migrating from MySQL to DynamoDB. You must manually design and create the appropriate DynamoDB tables and data models to effectively store your MySQL data. Given the differences between relational and NoSQL data models, this can be complex; significant effort might be required to restructure your data to fit DynamoDB's key-value format.

- Complex Data Transformations: AWS DMS might not be viable if the data needs complex transformations during migration; you may need additional tools or scripts to preprocess the data before transferring it to DynamoDB.

- Lack of Data Validation: Manual migration processes often lack built-in data validation and quality checks. Without automated data validation, ensuring the accuracy and integrity of the migrated data becomes a manual and error-prone task, increasing the risk of data inconsistencies and quality issues.

Conclusion

A MySQL to DynamoDB migration can significantly increase your database's performance and scalability, enabling more effective data retrieval storage. However, prior to selecting a migration method, it is crucial to understand your project's needs and constraints.

Manual migration offers flexibility and control but may be labor-intensive and time-consuming, particularly for complicated datasets. This emphasizes the need for a simpler and manageable solution. With a no-code data migration tool like Estuary Flow, you can save time and effort by automating the migration process. It guarantees a seamless transition, eliminates human errors, and ensures data consistency and integrity throughout data migration.

Estuary Flow is an ideal ETL tool for building real-time data pipelines connecting a variety of sources and destinations. Register for a free Estuary account or sign in to your existing account to get started!

FAQs

1. Why should I consider migrating from MySQL to DynamoDB?

Businesses choose to migrate to DynamoDB for several compelling reasons: improved scalability to handle massive data growth, consistently fast performance even under heavy load, and increased agility due to the flexible schemaless data model.

2. What are the primary challenges of MySQL to DynamoDB migration?

The core challenge lies in the fundamental difference between the two database types. MySQL is a relational database (RDBMS), while DynamoDB is a NoSQL database, requiring a shift in database design and data modeling approaches.

3. What are the different methods available for migrating data?

Common methods include using specialized tools like Estuary Flow for batch and real-time migrations and transformation.

4. How do I design my DynamoDB tables to accommodate data from MySQL?

The key is understanding DynamoDB's concepts of partition keys and sort keys. Careful consideration is required to optimize read/write patterns based on how your application will query the data in DynamoDB.

5. Are there costs associated with the migration process?

The cost depends on the chosen method. Services like AWS DMS incur usage-based charges. Additionally, you might factor in costs for development time and any potential downtime during the migration.

Author

Popular Articles