Extend Your Modern Data Stack to Leverage LLMs in Production

Discover the power of the modern data stack and its role in leveraging AI. Explore the essential components: data pipelines, vector stores, LLM endpoints, programming frameworks, as well as the key questions to ask for your AI journey.

Introduction

Is the modern data stack just a buzzword? Whether or not it is, it's indisputably an ambiguous concept, and perhaps everyone has their own definition of it.

Regardless, without it, you'll have a hard time leveraging LLMs, which everyone is going after these days.

A modern data stack is a minimum. Besides that, you'll need a few more secret sauces if you don't already have them in your tech stack.

In this article, we will discuss what those secret sauces are, as well as why the modern data stack is critical for leveraging AI. What key data considerations for your AI journey should you have on your mind? You may have innovative ideas on how LLMs can help your business soar. Asking the right questions is the first step in formulating a plan to get there!

The Old Way

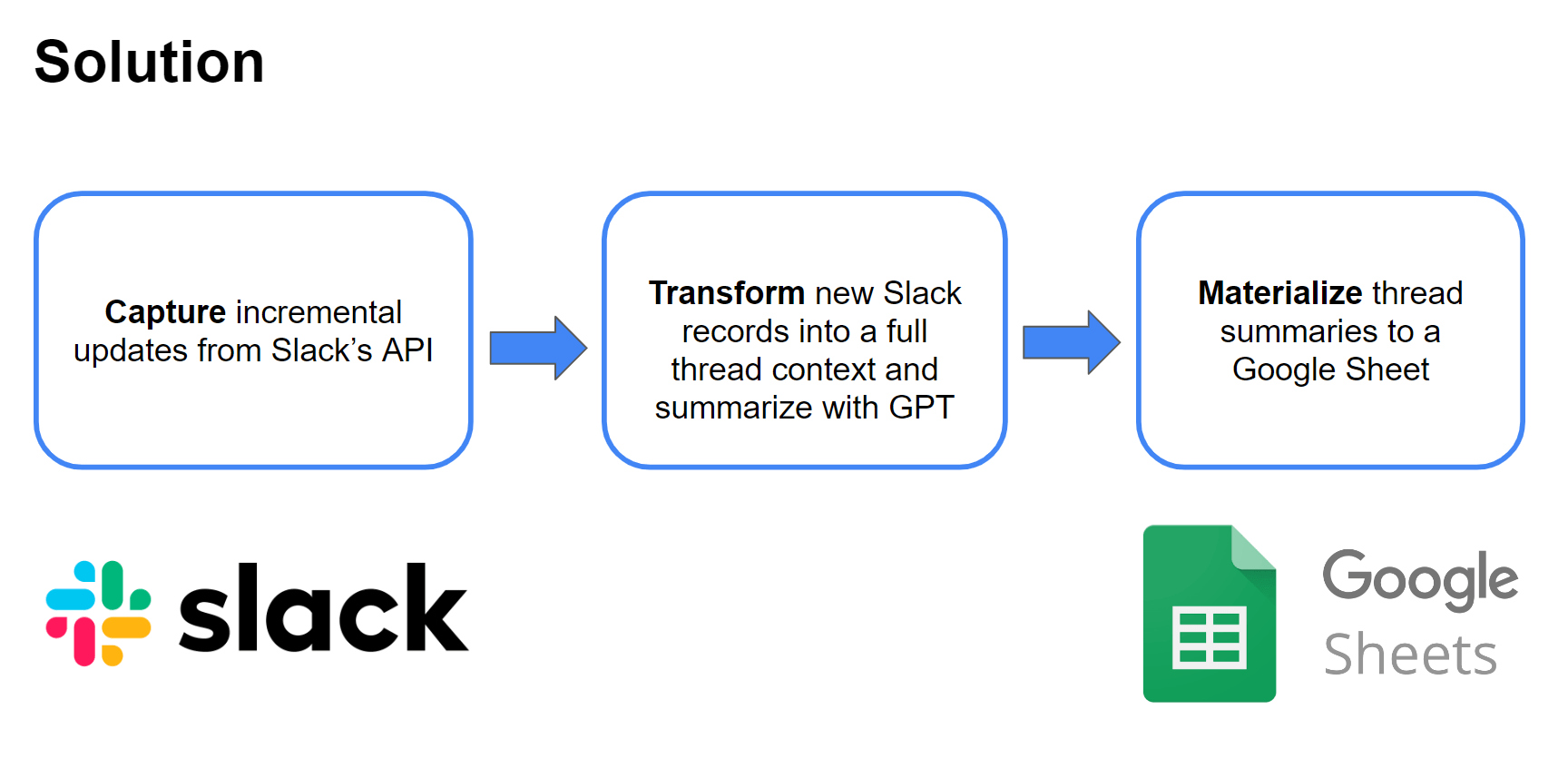

A not-so-long time ago in a galaxy not so far away, let’s say you wanted to build an application that summarizes Slack threads in your company, perhaps gives a one-line summary of every thread, which is often a developing story continuously getting new responses.

The idea sounds simple, but in the not-so-distant past, this was no small feat to accomplish. It likely required Natural Language Processing (NLP) developers leveraging a tech stack optimized for NLP tasks such as text classification, Named Entity Recognition, Named Entity Disambiguation, etc. This tech stack likely consisted of a data preprocessing pipeline, a machine learning pipeline, and a variety of databases to store structured data and embeddings. The NLP developers would store the outputs in places like ElasticSearch, Postgres, etc.1

Once the training data was in place, you likely needed the help of data scientists to build and train the models, data engineers to help deploy, software engineers to build the application layer on top of the said powerful mechanics on the back end.

Even if you had a team of technical Jedi at your service, the whole architecture was difficult to set up, required large amounts of labeled data and no shortage of model fine-tuning. Not to mention it would be difficult to maintain and expensive to run; often these types of architectures had over dozens of models in a pipeline.

To add to the pain, the most valuable data to feed into NLP models oftentimes had heterogenous document types that were difficult to work with. In that not-so-distant past, most data ingestion methods had a hard time receiving different document layouts and data types, adding to the challenge.

The Modern LLM Data Stack

Today, this is no longer the case. With the revolutionary entrance of something almost as powerful as The Force - GPT, in combination with recent advances in how data is stored and moved, the nearly impossible feat described above can now be done by one person in a couple days.

Real-time ETL managed solutions such as Estuary Flow and managed vector databases such as Pinecone have made it just that much easier to leverage Large Language Models (LLMs) and add vector search to production applications.

For the Slack use case, the new solution with the modern tech stack leveraging Estuary Flow can be found here.

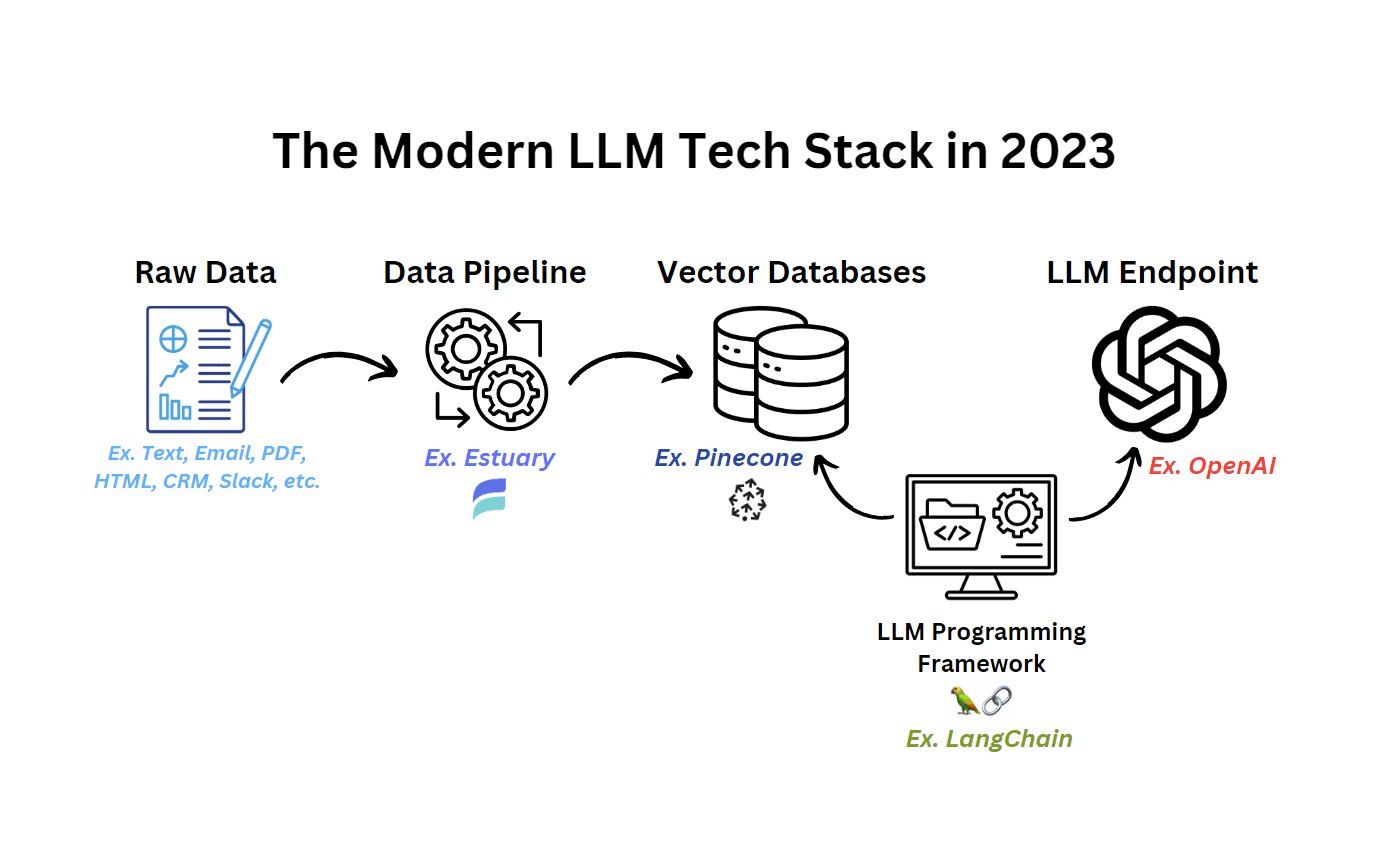

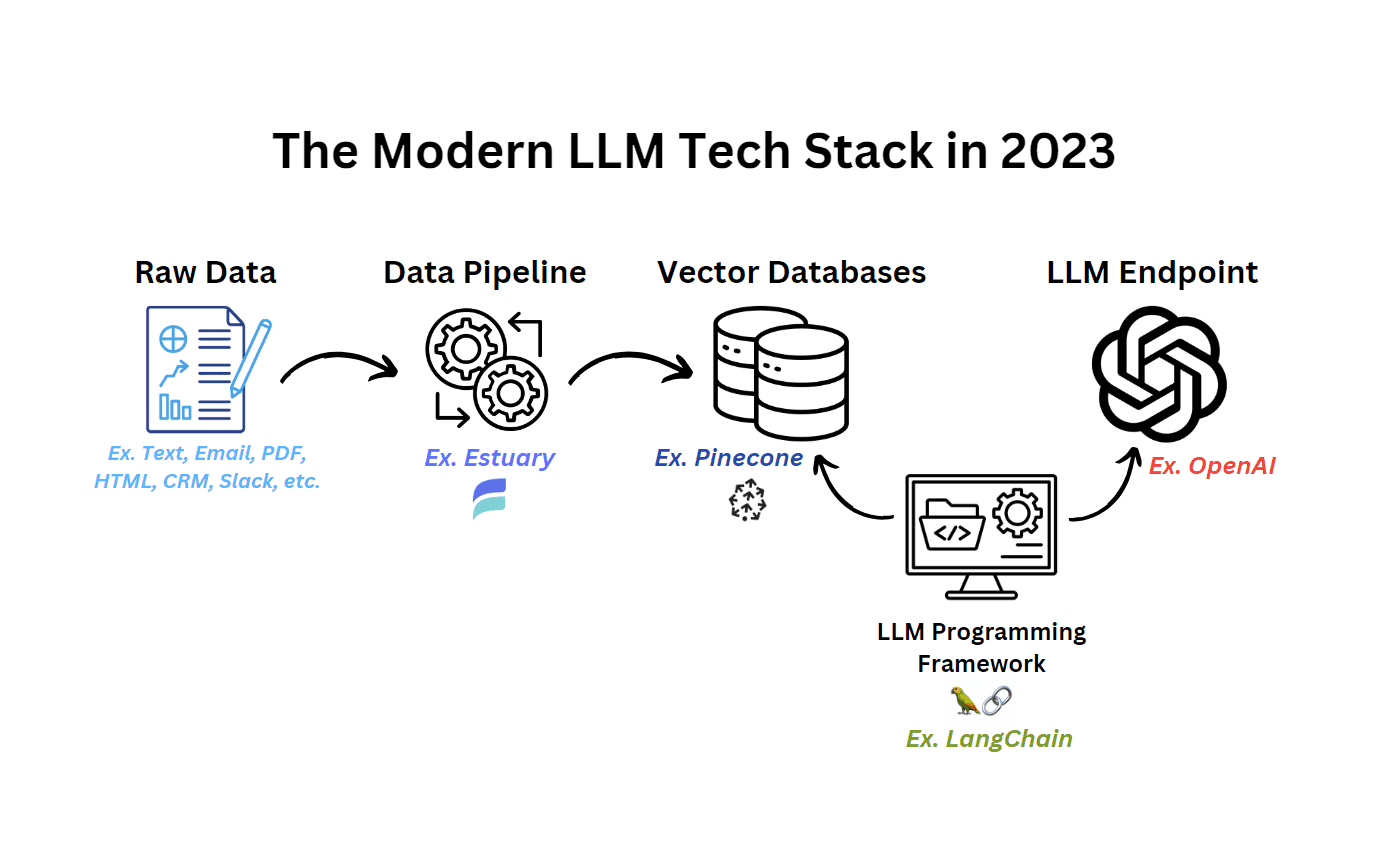

In a nutshell, the new stack in 2023 consists of four components:

- Data pipeline (Ex. Estuary)

- Vector stores for embeddings (Ex. Pinecone)

- LLM endpoint (Ex. OpenAI)

- LLM programming framework (Ex. LangChain)

Data Pipeline

The first component of the modern data stack focuses on the data pipeline. This step involves ingesting data from various sources, transforming it, and connecting it to downstream systems. A real-time, managed ETL solution like Estuary Flow helps simplify the process of processing large and heterogeneous datasets, producing clean JSON for further analysis and storage.

Vector Stores for Embeddings

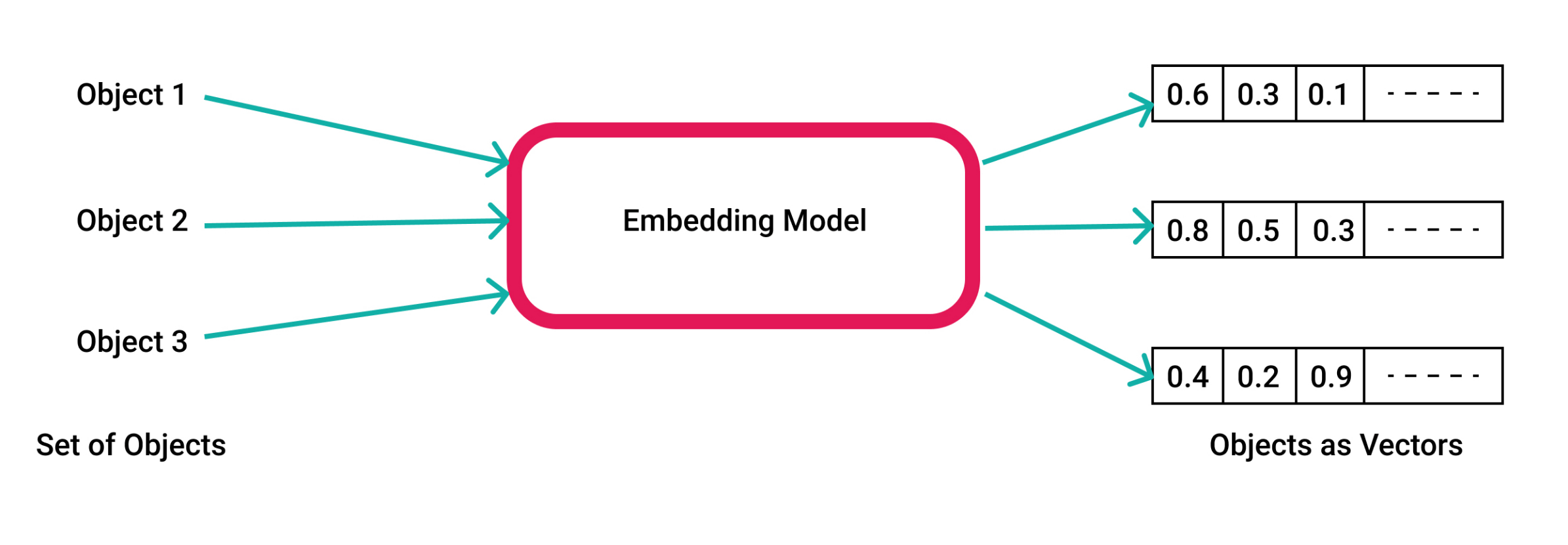

The use of embeddings and vector stores is a significant advancement in data storage and access.

Embeddings provide a compact and meaningful representation of data, enabling efficient storage and retrieval.

Image Source: Pinecone

Storing documents and their embeddings directly in vector databases allows for faster processing and reduces the amount of data processed during training and inference.

Also, vector stores facilitate advanced search techniques, such as similarity search, while also enabling transfer learning and fine-tuning.

These advancements enhance scalability, efficiency, and effectiveness in AI applications, revolutionizing data storage and enabling more efficient leveraging of AI technologies.

What used to be expensive to accomplish can now be done in a much more cost-effective way.

LLM Endpoint

The third component of the modern data stack is the LLM endpoint, which is the critical magic sauce that receives the input data and generates intelligent output. This endpoint is responsible for managing the resources of the model, such as memory and compute, and provides a fault-tolerant and scalable interface for feeding the output to downstream applications.

While LLM providers may offer various types of endpoints, here we are specifically referring to the text-generation endpoints, which expose a text field as input and a text field as output.

Today, these endpoints are already powering many emerging applications compared to traditional machine learning pipelines. A prime example of this is ChatGPT and the OpenAI API endpoint.

LLM Programming Framework

The final component of the new data stack is the LLM programming framework, which serves as a comprehensive set of tools and abstractions for developing applications with language models. Python is a tool, and LangChain is an example of such framework. These frameworks play a crucial role in orchestrating various components, including LLM providers, embedding models, vector stores, document loaders, and external tools.

Modern data stack is critical for leveraging AI

All of the above and examples lead me to this: To best leverage AI, it is critical to have a modern data stack.

In the second half of this article, I am going to discuss the points to consider when determining whether your data stack is compatible with leveraging AI, particularly LLMs.

It is indisputable that LLMs are powerful. In fact, they are so extremely intelligent that they have a Verbal IQ of 155, superior to 99.9% of their human counterparts.2

You can hire a team of these high-IQ beings to increase productivity for your business probably in twenty different ways (modestly), free of charge. AI has made this possible - why hasn’t everyone done it already?

In reality, there are two major challenges that prevent all businesses from taking over the world already:

1. You need access to a large amount of relevant data to train the AI models properly.

2. You need a scalable and easy-to-manage way to productionize your AI solution(s).

Consistent with the four components of the modern LLM data stack described above, having a modern data stack can solve both of these challenges and help a business leverage AI successfully.

Key Data Considerations for Your AI Journey

As businesses plunge headfirst into their AI journey, a key question to ask is: How are you managing your data?

What a loaded question.

Data management entails (at least) the following:

- How are you moving your data?

- How are you processing your data?

- How are you storing them?

- How are you accessing them?

Let’s tackle each of these.

How are you moving your data?

Moving data from a source to one or more destinations, or syncing data across multiple platforms, can be a complex data engineering operation.

Depending on the maturity of your data operations, how you move your data can pose a significant hurdle, hindering your AI implementation efforts. Does your AI solution have the data it needs to perform properly? Is the data up to date?

How are you processing your data?

If you aim to train LLMs or any other model, acquiring a substantial amount of data is essential. However, the manner in which you process and manipulate that data is equally crucial.

Real-time processing is important when it comes to feeding data to AI. Timely data processing directly impacts AI performance. A modern data stack should be able to ingest and process data in real-time or near real-time for tasks that require immediate feedback and action.

Real-time data processing capabilities help ensure that AI systems can analyze, predict, and react to dynamic situations effectively.

Additionally, data processing often involves data transformations. Machine learning fundamentally involves instructing machines to identify patterns and make predictions based on acquired knowledge. Raw data usually lacks the structure and formatting necessary to facilitate this process smoothly.

Because of that, significant efforts are often required to transform extensive datasets into a consistent format that aligns with the intended purpose. This transformation includes tasks such as modifying, cleansing, removing duplicates, and potentially undertaking other operations based on the initial condition of the raw data.

Most existing ETL platforms take care of either moving data or transforming data, but not both. For example, Fivetran is focused on moving data. Customers who need data transformation capabilities need a partnering solution dbt to carry out SQL transformations. On the other hand, Estuary Flow has native capabilities to do both.

Flow offers dozens of pre-built connectors that allow you to move data from multiple sources to multiple destinations in real time, and you can apply SQL transformation or Typescript transformation to your data easily without stitching multiple tools together to meet your needs.

How are you storing your data?

Unlike traditional databases that primarily focus on structured or tabular data, vector databases like Pinecone are optimized for storing and retrieving vector embeddings.

Is your current data infrastructure compatible with vector databases? And do you have the ability to move data between vector databases and other systems that hold your organization’s data such as CRMs, Slack, or any other institutional knowledge base?

Adding Pinecone to your tech stack is a good move here. Pinecone is a managed and scalable vector database that makes it easy to build high-performance vector search applications, and vector search is a crucial part of leveraging LLMs.

Flow’s pre-built Pinecone connector allows you to move data from several dozen data sources to a Pinecone database, which can then be leveraged by your LLMs, enhancing the capabilities of your AI applications.

How are you accessing your data?

AI solutions are complex and often require cross-discipline collaboration. This is why the ease of access to your organization’s data is important.

A modern data stack should enable team members to access and share data readily. By promoting collaboration among data scientists, data engineers, software engineers, business SMEs and other stakeholders, you can create an environment to support rapid experimentation and encourage the development of innovative AI solutions. Empowering team members to access the data they need quickly also contributes to overall productivity and efficiency.

Another critical step to improve ease of access to data is removing data silos. In organizations with diverse systems and applications, data is often stored in isolated silos. Extracting data from these silos and consolidating it into a unified format can be a complex and time-consuming process.

Having functional and low-maintenance data pipelines helps remove data silos in your organization, so you can create AI solutions without organizational friction.

How are you productionizing your AI solution?

There’s no lack of toy solutions out there demonstrating proof-of-concepts, or what could theoretically work, but there can be a big difference between that and a production-grade, scalable solution.

Some things to consider on this front include:

- Ease of implementation

- Low maintenance

- Scalability

While you may possess the necessary expertise and talent to construct and manage data pipelines internally, it is crucial to consider whether this is the best use of your resources. Building and maintaining pipelines can create unnecessary bottlenecks.

This becomes especially important when you consider the consequences of pipeline failures on your AI solutions. Inaccurate or unreliable data can undermine the output of predictive modeling, which is why it’s important to have a solid data pipeline and reliable way to move data between places.

This is where a managed solution can help automate your data pipelines in a scalable and low-maintenance way.

More LLM Examples

In case you need some brainstorming juices, here are three more examples of AI solutions leveraging the modern data stack to maximize the power of LLMs.

Example 1: Use ChatGPT on your own data

- Teach ChatGPT new knowledge from Notion which contains a custom, company-specific knowledge base.

- Transform the data within the data pipeline, then materialize the transformed data to a vector database in Pinecone.

- Call the OpenAI API to leverage its LLM.

- Lastly, use LangChain to carry out retrieval augmented questioning and answering.

See the detailed walkthrough of the solution here.

Example 2: Use ChatGPT to give personalized feedback

In this example, the goal is to help a sales team close more deals and increase revenue by analyzing call notes from a CRM, then giving personalized recommendations to each sales rep based on the call notes. This solution also utilizes Estuary Flow, Pinecone, OpenAI, and LangChain. See the detailed walkthrough of the solution here.

Example 3: Use ChatGPT for sales conversations: Build a smart dashboard

Another example of using ChatGPT for sales, but in a different way. In this example, we are using ChatGPT to monitor active Hubspot sales conversations, draw conclusions about sentiment, stage, and the likelihood of closing a sale, then display it in a compact dashboard in Google Sheets. See the detailed walkthrough of the solution here.

Conclusion

Data lies at the core of AI's vast possibilities. Imagine extensive reserves of unrefined oil, waiting to be transformed into fuel that drives numerous industries. Even in the age of AI, one thing hasn’t changed: data is still the new oil. Meticulous extraction, processing, and utilization of data are vital to unlock the full potential of AI.

Businesses that effectively harness data position themselves to soar higher in terms of agility, innovation, and decision-making. In the realm of AI, data isn't just a valuable resource; it is the propellant that launches you into new territories with new opportunities.

However, like any fuel, the right infrastructure is essential to ensure its safe and efficient utilization.

Are you ready to leverage the full power of AI and LLMs? And is your tech stack prepared for you to do so? If not, you may soon find yourself scrambling to catch up with competitors.

To try out the example LLM uses mentioned in this article, try Estuary Flow for free today. Join us on Slack and let us know what you think and if you have other innovative use case ideas!

References:

- LLMs and the Emerging ML Tech Stack:

https://medium.com/@unstructured-io/llms-and-the-emerging-ml-tech-stack-bdb189c8be5c

- https://www.scientificamerican.com/article/i-gave-chatgpt-an-iq-test-heres-what-i-discovered/

Author

Popular Articles