Managing Apache Kafka is hard enough on its own. But learning that you have to connect Kafka data to another system is enough to throw a wrench in any data professional’s week. So if you need to build a Kafka to BigQuery pipeline, you’ll be happy to hear that we have a shortcut for you.

This article covers three methods to migrate data from Apache Kafka to BigQuery. Some are easier than others, but we’ll provide details on all three so you can choose which method works best for you.

But first, some obligatory introductory content (we know you already know… it’s for the algorithm).

Ready to dive into the methods? Click here to jump straight to the step-by-step guide for migrating data from Kafka to BigQuery.

What is Apache Kafka?

Apache Kafka is a distributed streaming platform initially developed at LinkedIn to handle real-time data feeds. It was later open-sourced through the Apache Software Foundation. Kafka is a popular tool for building real-time streaming data pipelines and real-time streaming applications.

Kafka’s core is designed to handle large volumes of data in real time. Its distributed architecture consists of multiple nodes or brokers—each responsible for storing and serving data for a subset of topics. Kafka can handle data streams from various sources and deliver them to multiple consumers.

You can use Kafka’s reliable data pipelines to process data from various sources incrementally and sequentially. Kafka can handle both online and offline message consumption. It persists all the messages to the disk and replicates them within central clusters to prevent data loss.

Some of the Apache Kafka use cases include:

- Process streams of data in real time.

- Publish and subscribe to streams of records.

- Store streams of records accurately in a fault-tolerant and durable way.

- Combines with other technologies for performing supporting analytics on data streams.

What is Google BigQuery?

Google BigQuery is a serverless and fully managed cloud-native data warehouse. Since it’s a serverless platform, you don’t need to manage infrastructure or perform maintenance tasks. It is a powerful tool for organizations to store, analyze, and process massive amounts of data in real time. You can use it to analyze large volumes of data using SQL queries.

BigQuery is a columnar-based storage system. So, it can store and analyze petabytes of data at scale efficiently. As a result, you can perform complex analyses on large datasets without worrying about performance issues.

BigQuery also supports partitioning and clustering. This helps reduce costs and speed up query performance by minimizing the amount of data to be scanned.

Need for Kafka to BigQuery Migration

The Kafka platform helps you build real-time data applications and pipelines. On the other hand, BigQuery helps modernize your IT landscape and meets your growing analytics needs.

When you establish a connection from Kafka to BigQuery, you get many benefits. Firstly, it enables real-time data processing, meaning you can analyze and act on data as it is generated. This will allow you to obtain valuable insights and enable faster decision-making. A common use case in the finance industry is where real-time data processing helps identify fraudulent activities.

Secondly, Kafka to BigQuery migration provides scalability. Organizations must ensure their data processing systems can handle their increasing data loads. Since Kafka and BigQuery are both highly scalable platforms, you can handle large volumes of data without any performance issues. While Kafka can handle millions of messages per second, BigQuery can handle petabytes of data. By connecting these two systems, you can scale your data processing systems as data volumes grow.

Finally, migrating from Kafka to BigQuery provides cost-effectiveness. With the open-source Kafka platform, you won’t incur any licensing costs. And BigQuery is based on a pay-as-you-go pricing model, meaning you only pay for the data processed. When you integrate the two platforms, you only pay for the data you process and analyze. This helps reduce your overall costs.

Kafka to BigQuery Migration Methods

Now that you're 100% clear on why it’s helpful to connect Kafka to BigQuery (if you weren’t already), here’s how to implement it.

Method #1: Using Custom Code

You can build a custom-coded data pipeline between Kafka and BigQuery. To do this, you must make provisions for:

- Streaming data from Apache Kafka

- Ingesting data into Google BigQuery

We won’t sugar-coat it… this is the hard method, and what you probably are hoping to avoid when you found this article. Still, we’ll discuss the basic steps.

1. Streaming Data From Apache Kafka

To stream data from Kafka, you’d typically start with an open-source framework like Kafka Connect or Apache Beam.

Kafka Connect is a free, open-source Kafka component that allows you to stream data in and out of Kafka. It achieves this through connectors—Source and Sink—that also deal with the redundancy, robustness, and scale of your system. A Source connector ingests whole databases and streams table updates to Kafka topics. And a Sink connector keeps consuming data from Kafka topics and streaming to external storage locations in seconds.

However, Kafka Connect has no inbuilt connector for BigQuery. You’ll have to use third-party tools for this purpose.

Apache Beam is another open-source tool you can use to stream data from Kafka. Apache Beam is a unified programming model. It helps define and execute data processing pipelines. It is open source with batch and stream processing capabilities. With its distributed processing backend—Google Cloud Data Flow—your code is executed as a cloud job. Since it’s fully supported and auto-scaled, you do not need to focus on how the job gets executed.

2. Ingesting Data Streamed From Kafka Into Google BigQuery

You can either use BigQuery API or batch loading into Google Cloud Storage to ingest data into BigQuery. Ensure you have the following before you start migrating to BigQuery from Kafka:

- Write access to the dataset containing your destination table

- Enable billing for your GCP account

- Check quota policy for BigQuery data streaming

You can use the BigQuery API to manage, create, share, and query data into Google BigQuery directly. The quota is 100k rows per project. Enable the BigQuery API so that you can use Kafka Streams to move the data you had extracted using Kafka Connect or Apache Beam, into BigQuery. Kafka Streams is an open-source library you can use to build scalable streaming applications on top of Kafka. It is built on top of Kafka client libraries and greatly simplifies the stream processing from Kafka topics.

Alternatively, you can use a tool like Secor to deliver data from Kafka into object storage systems like GCS. Then, you can load the data into BigQuery with a load job, manually using the BigQuery UI, or through BigQuery’s command line SDK.

Method #2: Using Google Dataflow to Migrate Data From Kafka to BigQuery

The custom code method doesn’t support real-time data streaming. It also requires a lot of maintenance. A better alternative is to use Google Cloud Dataflow for data migration from Kafka to BigQuery.

Dataflow is a fully-managed, serverless streaming analytics service that supports both batch and stream processing. You can use Dataflow to read events from Kafka, process them and write them into a BigQuery table for further analysis.

By using the Kafka-to-BigQuery Dataflow template, you can configure a Kafka-to-BigQuery pipeline. The Dataflow template is a collection of pre-built templates with an option to create your own. This template uses the BigQueryIO connector provided in the Apache Beam SDK.

You can create your pipeline with Apache Beam SDK using Java or Python. Once you’ve created your pipeline, you can use Dataflow to deploy and execute the pipeline, hence creating a Dataflow job. Then, it assigns worker VMs to execute data processing. All infrastructure provisioning and cluster management are automated by Dataflow.

The Dataflow streaming engine separates storage from compute. It moves certain pipeline execution parts out of the worker VMs and into the Dataflow service backend. This results in improved data latency and autoscaling.

You can use the template by following these steps:

Step 1: Deploy Kafka

If you haven’t done so yet, you can deploy a Kafka cluster on Compute Engine VM instances or use a third-party managed Kafka service.

Step 2: Network Configuration

Dataflow launches instances within your default Virtual Private Cloud (VPC) network by default. You may need to configure a different network and subnet for Dataflow, depending on your Kafka configuration.

If your Kafka cluster is deployed outside of Google Cloud, you must create a network connection between the Kafka cluster and Dataflow.

Step 3: Set IAM Permissions

The two Identity and Access Management (IAM) service accounts used by Dataflow jobs are the Dataflow service and worker service accounts. The worker service account needs write access to the BigQuery output table, among other access permissions. You must ensure these two service accounts have appropriate permissions.

Step 4: Write a Function to Transform Event Data

The Kafka-to-BigQuery Dataflow template creates a pipeline that can read events from one or more Kafka topics. It then writes them into a BigQuery table. You can also provide a JavaScript user-defined function to transform the event data before it’s written into BigQuery. The pipeline output must be JSON-formatted data to match the output table’s schema.

Step 5: Create a BigQuery Output Table

Before you run the template, you must create the BigQuery output table. Your table schema must be compatible with the pipeline’s JSON output. The pipeline writes each property’s value of the JSON payload to the BigQuery table column of the same name. Missing properties are interpreted as NULL values.

Step 6: Deploy the Kafka to BigQuery Dataflow Template

Run the Kafka to BigQuery Dataflow template after you create the BigQuery table. Use the Google Cloud console or CLI for this purpose.

Method #3: SaaS Alternatives

SaaS platforms that provide support for Kafka to BigQuery migration can be used instead of the manual method or Dataflow. With built-in connectors available in SaaS tools, you can complete the migration in a matter of minutes.

Consider using Estuary Flow to achieve Kafka to BigQuery migration. You can use the Apache Kafka connector to capture streaming data from Apache Kafka topics. Flow also has a connector for Google BigQuery that materializes data collections into tables within a BigQuery dataset. This is a real-time connector that materializes data using continuous transactions. When you deploy the pipeline, the data moves from Kafka to BigQuery in real time.

Benefits of Using Estuary Flow:

- It provides a hassle-free solution using Kafka to BigQuery connector. The migration takes only minutes to set up with an easy-to-use interface.

- It completely automates loading data from Kafka, enriching and transforming it into an analysis-ready.

- The pre-built connectors handle the database connection, allowing you to focus on key business processes.

Here’s a step-by-step guide to use Estuary for Kafka to BigQuery migration:

Step 1: Capture the data from your source

Sign in to your Estuary account. If you don’t have one, sign up for free.

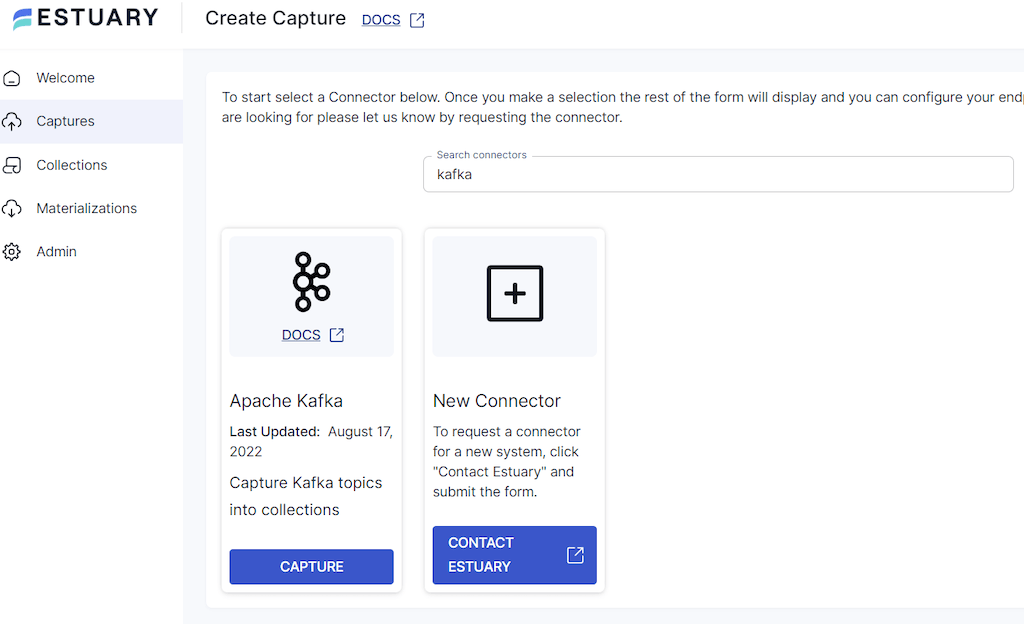

Click on Captures. Next, click on the New Capture button.

Search for the Kafka connector.

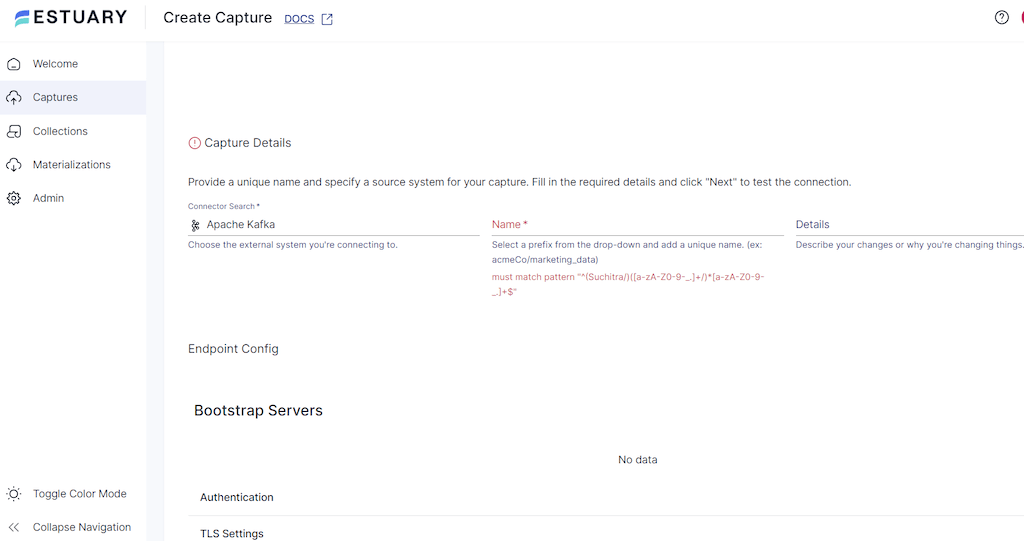

After locating the Kafka connector, click on Capture. You’ll be redirected to the Kafka capture page. Give your capture a name.One of the prerequisites for Kafka to BigQuery migration is a Kafka cluster with bootstrap servers configured. This is for clients to connect via the desired host and port. You must enter the bootstrap server details on the Kafka capture page.

Click on Next. Then, click on Save and Publish.

Step 2: Set up the data’s destination

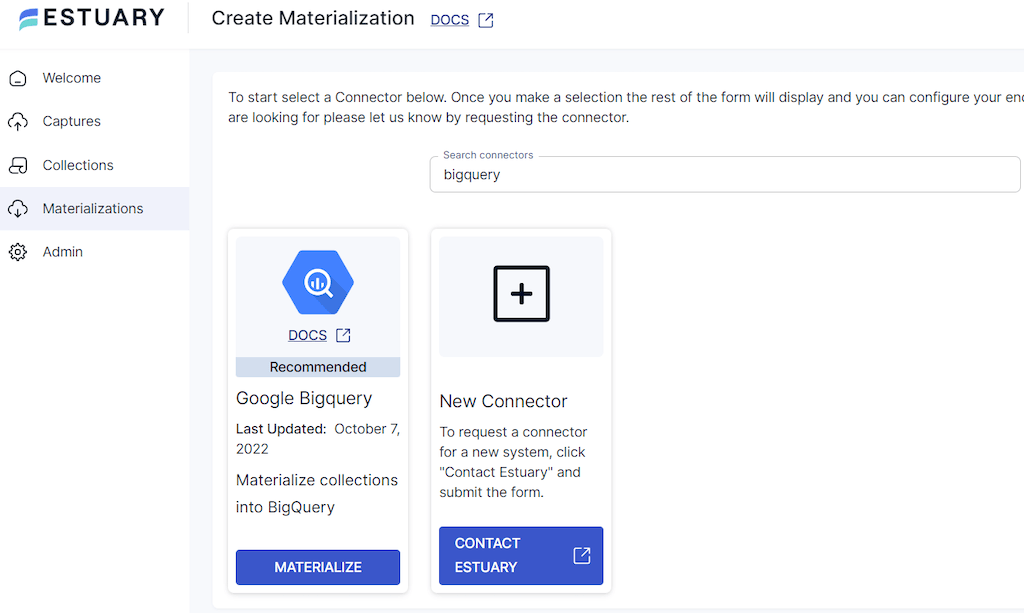

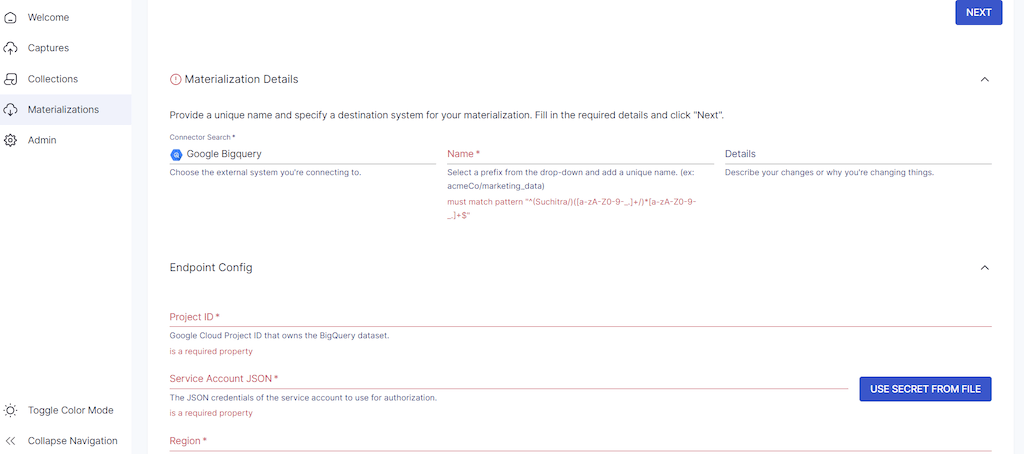

There are two ways you can set up the destination where your data will go. One is by clicking on Materialize Connections in the pop-up. The other way is by navigating to the Estuary dashboard and clicking on Materializations on the left-side pane. Then, click on New Materialization.

In this case, the materialization option you must select is BigQuery.

BigQuery has a few extra prerequisites that you must meet before connecting to Flow successfully. Here are the steps you need to follow.

Once you’re done with the steps, you can set up your destination as easily as you did with the source.

Fill in the required fields on the BigQuery materialization page and click on Next. If you don’t see the details of the data captured from Kafka automatically, use the Collection Selector to add the data. Then, click Save and Publish.

If you’d like more detailed instructions, look into the Estuary documentation on:

- The Kafka source connector

- The BigQuery materialization connector

- Detailed steps to create a Data Flow like this one

Avoid the hassles of custom coding—try Estuary Flow to effortlessly connect Kafka to BigQuery with real-time streaming. Start for free or connect with our team for tailored support.

Conclusion

This article provided you with a guide for migration from Kafka to BigQuery. The different ways to implement this include using custom code, using Google Dataflow, or using SaaS alternatives like Estuary Flow.

The custom code method has its limitations. Not only does it consume time and resources, but it’s also error-prone. And implementing it is requires lots of use-case-specific engineering, far beyond the scope of an article like this one.

While the Google Dataflow template is a better alternative to the custom code (especially if your Kafka cluster is deployed in Google Cloud), the best option is using SaaS alternatives to smooth out some of the inherent friction of managing Kafka.

Estuary Flow helps you overcome the above-mentioned limitations for seamless integration and hassle-free data integration between Kafka and BigQuery. To test it out for yourself, sign up to start using Flow for free. You can also bring any questions you might have straight to our engineering team on Slack.

Author

Popular Articles