Real-time RAG with Google Sheets

Extracting actionable insights from this data can be a daunting task. Fortunately, with advancements in Retrieval-Augmented Generation (RAG), vector search, and real-time data integration, this process has become much more manageable.

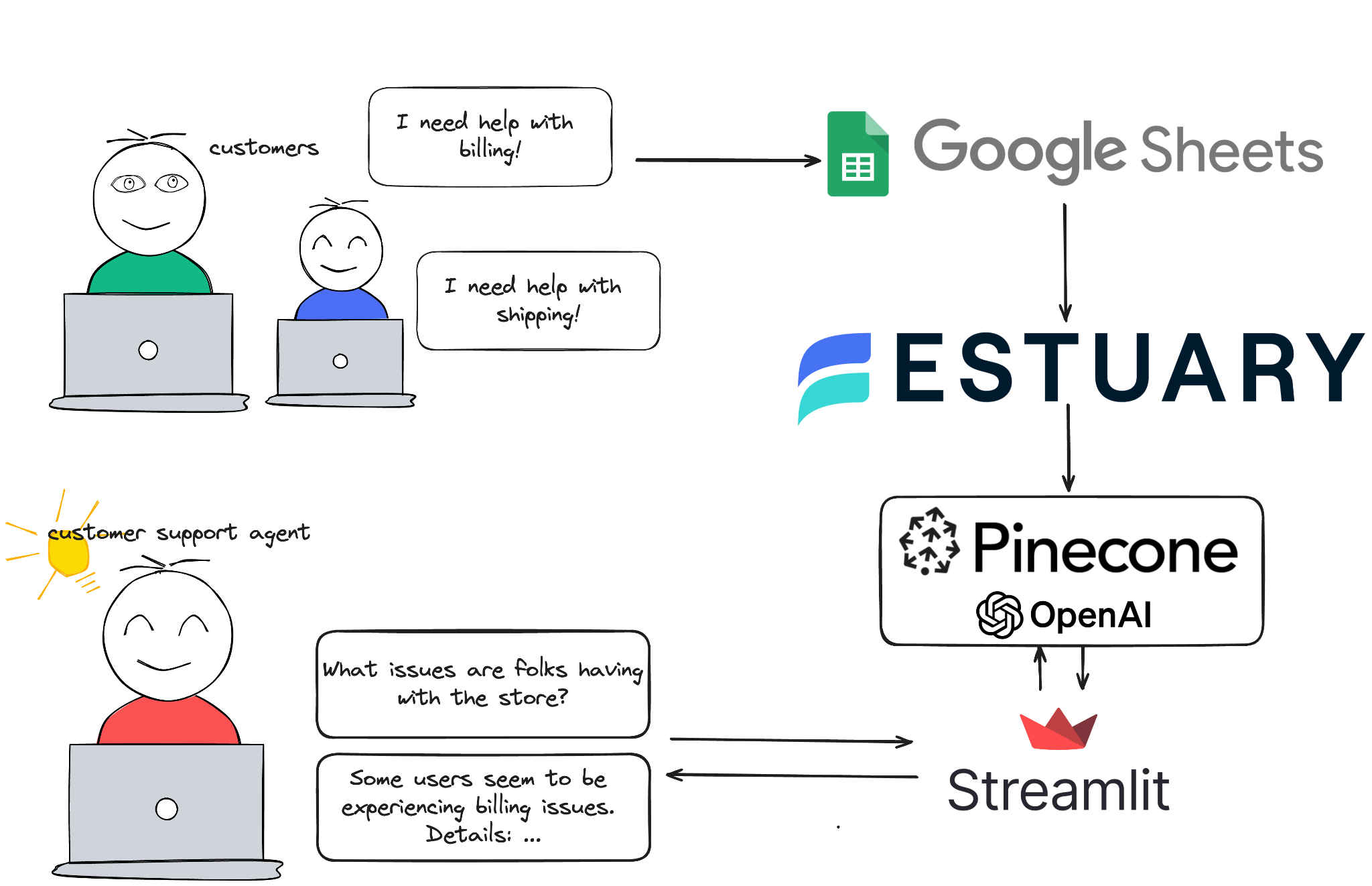

In this article, we'll explore how to integrate Google Sheets with Estuary Flow and Pinecone to build a chat application with access to real-time data.

What is RAG?

Retrieval-augmented generation (RAG) is a technique that merges search-based information retrieval and generative AI models. Generative models produce responses based solely on pre-existing training data, which can limit their accuracy and relevance over time.

In contrast, RAG dynamically retrieves relevant documents from external sources during the generation process. This hybrid approach ensures that responses are not only more accurate but also contextually relevant, especially when dealing with large datasets or when access to the latest information is essential.

Consider a customer support chatbot for a tech company that uses RAG. When a customer asks about a newly released feature, the chatbot retrieves the most recent documents or knowledge base articles related to it and incorporates this information into its response. This ensures that the customer receives the most up-to-date and accurate information, rather than a response based solely on the chatbot's initial training data.

Understanding Vector Search

Vector search is a powerful method for finding relevant data points by comparing their vector representations. In the context of RAG, vector search is crucial for efficiently retrieving documents that are semantically similar to an input query. This process involves transforming data into high-dimensional vectors, which capture the semantic meaning of the information.

Pinecone Vector Store is a platform revolutionizing the way data is stored and queried. It offers lightning-fast vector similarity search, enabling users to efficiently find similar items within massive datasets in milliseconds. With its scalable infrastructure and advanced algorithms, Pinecone empowers businesses to unlock the potential of their data, whether it's for recommendation systems, personalization, or anomaly detection.

Vector databases like Pinecone leverage these representations to perform fast and accurate similarity searches, enabling real-time, contextually relevant data retrieval that enhances the capabilities of generative models.

Here are some key features of Pinecone:

- Lightning-fast vector similarity search

- Scalable infrastructure for handling massive datasets

- High-performance vector storage

- Millisecond response times for search queries

Imagine you're building a recommendation system for an online retail store. When a user views a product, the system converts the product's description into a vector representation. It then uses Pinecone to search for other product vectors that are semantically similar. This allows the system to recommend products that are contextually relevant to the user's interests.

For instance, if a user is looking at a high-performance laptop, Pinecone can help identify and recommend other laptops with similar features, specifications, and reviews, thereby enhancing the shopping experience with personalized suggestions.

A step-by-step illustration of how vector search works using Pinecone:

- Data Transformation: Convert data into high-dimensional vectors.

- For example, consider product descriptions like "high-performance gaming laptop with 16GB RAM" and "ultra-thin laptop with 8GB RAM".

- These descriptions are transformed into vectors using an embedding model. Each product description becomes a point in a high-dimensional vector space.

- Vector Database: Store these vectors in Pinecone.

- Each vector, along with its metadata (such as product ID, name, and description), is stored in Pinecone.

- Query Vector: Convert the search query into a vector.

- When a user searches for "gaming laptop with good graphics", this query is also transformed into a vector using the same embedding model.

- Similarity Search: Perform a vector search to find similar vectors.

- Pinecone compares the query vector with the stored product vectors to find the most similar ones. The similarity is typically measured using distance metrics like cosine similarity or Euclidean distance.

- Retrieve Results: Fetch the top results based on similarity.

- The most similar product vectors are retrieved, and their corresponding product details are returned to the user.

Visual Representation

Consider the following visual representation of vector search:

- Vector Space: Imagine a multi-dimensional space where each point represents a product.

- Query Vector: A point in this space representing the search query.

- Similarity Search: Lines connecting the query point to the nearest points (products) based on vector similarity.

plaintext | x (Product 3)

|

|

| x (Product 2)

|

Query -> x|------------------- (Product 1)

|

| x (Product 4)

|

|In this illustration:

- The "Query" point represents the vector of the user's search query.

- The "x" marks represent vectors of different products.

- The distance between the query point and product points indicates similarity. Shorter lines represent more similar products.

The Importance of Real-Time Contextual Data for RAG

One of the limitations of generative models is that their knowledge is static, and confined to the data they were trained on. LLMs, such as GPT-4, are trained on terabyte-size data sets. But they can be a year old and not contain domain-specific content - finance, healthcare, technology, news - that is needed to answer a domain-specific or current-news type questions.

For example, a model trained a year ago would lack knowledge of the latest developments in AI, recent scientific breakthroughs, or current geopolitical events. This gap can lead to outdated or incorrect responses, undermining the utility of the model in providing timely and accurate insights.

Real-time data supplementation transforms generative models from static knowledge repositories into dynamic sources of information. By continuously integrating new data into the prompt, these models can adapt to changes and provide up-to-date responses.

This dynamic approach offers several key advantages, for example:

- Enhanced Accuracy and Relevance: By incorporating the latest data, models can generate responses that reflect the latest information, improving their accuracy and relevance. This is crucial in industries where outdated information can lead to costly mistakes.

- Competitive Advantage: Organizations that leverage real-time data in their AI models can gain a competitive edge by staying ahead of trends and making proactive, data-driven decisions. This capability can lead to improved operational efficiency or better customer service.

Introducing Estuary Flow

Estuary Flow is a real-time CDC platform built from the ground up for CDC and streaming. It excels at capturing data from various sources and delivering it to many destinations used for analytics, operations, and AI. With its event-driven architecture, Estuary Flow ensures data is processed and delivered exactly once, with low latency, making it an ideal solution to use with Materialize.

These capabilities are particularly beneficial for applications requiring continuous data updates, such as RAG implementations.

Some key features of Estuary Flow are:

- Fully Integrated Pipelines: Flow simplifies data integration by enabling you to create, test, and adapt pipelines that gather, modify, and consolidate data from multiple sources.

- Change Data Capture (CDC): Always-on CDC that replicates in real-time with exactly-once semantics, backed by cloud storage.

- No-code Connectors: With pre-built connectors for popular data sources and sinks, such as databases and message queues, Flow reduces the need for custom connectors. This speeds up data pipeline deployment and ensures consistency across systems.

- Native support for vector databases like Pinecone, including the ability to vectorize data as part of the loading process.

- Support for real-time SQL, TypeScript, including the ability to call APIs to use generative AI services like ChatGPT.

Tutorial Overview

The rest of the article will contain step-by-step instructions on how to build a real-time RAG application. You’ll learn how to:

- Spin up a local SQL Server instance, stream fake data into it, and prepare it for CDC capture.

- Configure a capture in the Estuary Flow dashboard to ingest change events.

- Set up Estuary Flow as a source in Materialize and create real-time analytical materialized views.

Prerequisites

- Estuary Flow account: if you haven’t registered yet, you can do so here, for free!

- Pinecone account: Pinecone is the target vector database in this project.

- OpenAI account: The project uses OpenAI’s API to calculate the embeddings and to wrap the chat responses using an LLM.

- Docker: for convenience, we provide a Dockerized development environment so you can get started in just seconds!

Step 1. Clone example repository and set up development environment

Head over to GitHub and clone the Estuary Flow examples repository. Change directories into the one called google-sheets-pinecone-rag. This folder contains the data generator script and the Streamlit application for the chatbot.

First, create a Google Sheet that you’ll use as the data store for the generated customer support tickets. You’ll also need programmatic access to this sheet for the data generator script, which can be accessed via a GCP Service Account. Create one and export its credentials as a JSON file.

Take a look at the docker-compose.yml file and make sure you update the environment variables before spinning up any containers. After you’re done, start the data generator script like this:

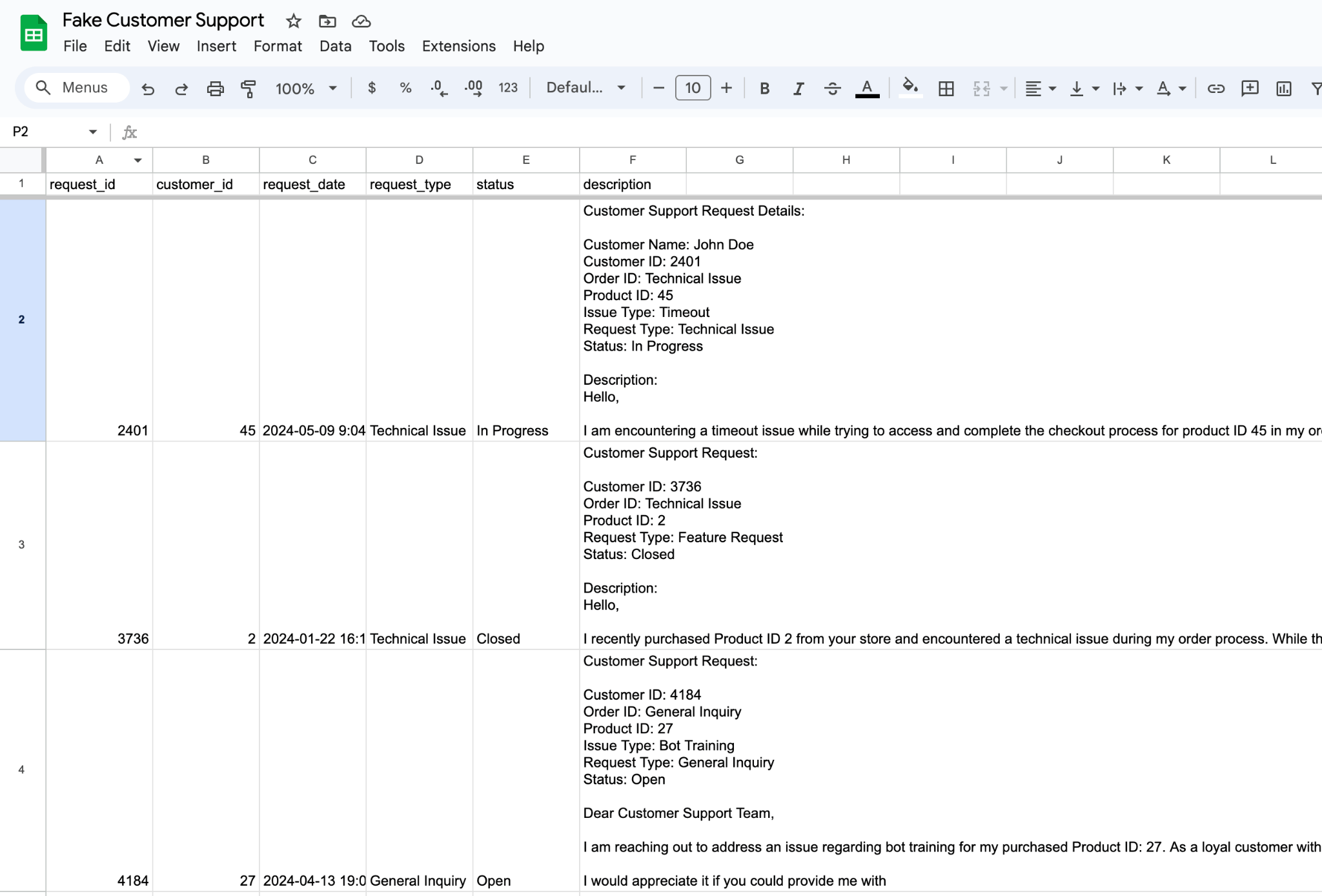

plaintextdocker compose up datagenHead over to the Google Sheet you created and verify that the fake complaints are indeed being generated. It should look something like this:

The schema of the fake support tickets is fairly simple; it contains a identifiers about the issuer, the request, a timestamp, it’s type, current status and description. Similar to what you would see in a real ticketing system.

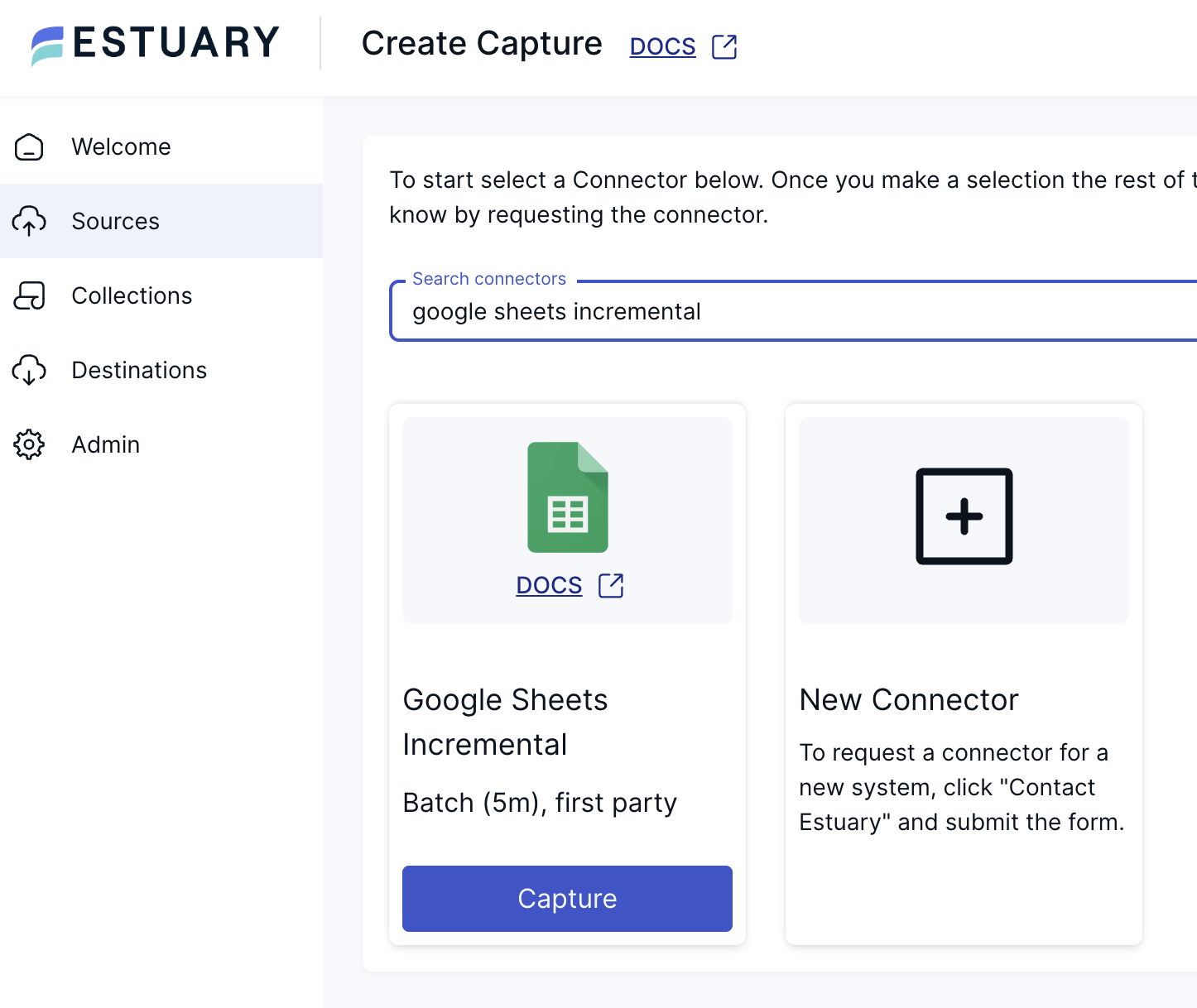

Step 2. Capturing Changes from Google Sheets

Estuary Flow provides a Google Sheets capture connector that simplifies the process of extracting data from your spreadsheets. This connector is incremental, meaning it only captures data changes, rather than parsing the entire sheet.

This approach not only saves time but also reduces the computational cost associated with data processing and vectorization as with other services, you would have to vectorize the whole sheet and rely on Pinecones UPSERT operation to not introduce any duplicate data in your vector embedding space.

- Create a New Capture in Estuary Flow:

- Log in to your Estuary Flow account.

- Navigate to the "Captures" section and click on "Create New Capture".

- Select "Google Sheets [Incremental]" as your source connector.

- Authorize Access:

- Follow the prompts to authorize Estuary Flow to access your Google Sheets.

- Ensure that you grant the necessary permissions for data reading.

- Configure the Capture:

- Provide the URL of the Google Sheet you want to capture data from.

- Specify the sheet name.

- Save & Publish the Capture:

- Once the configuration is verified, start the capture process.

- Estuary Flow will now continuously monitor your Google Sheet for changes and incrementally capture updated data.

Step 3. Vectorizing and Loading Data into Pinecone

Pinecone is a specialized vector database designed for high-performance vector search and similarity matching. By vectorizing your Google Sheets data incrementally and loading it into Pinecone, you can leverage its native search capabilities to quickly retrieve relevant information.

Here's what you need to do to quickly set up Estuary Flow’s Pinecone materialization connector:

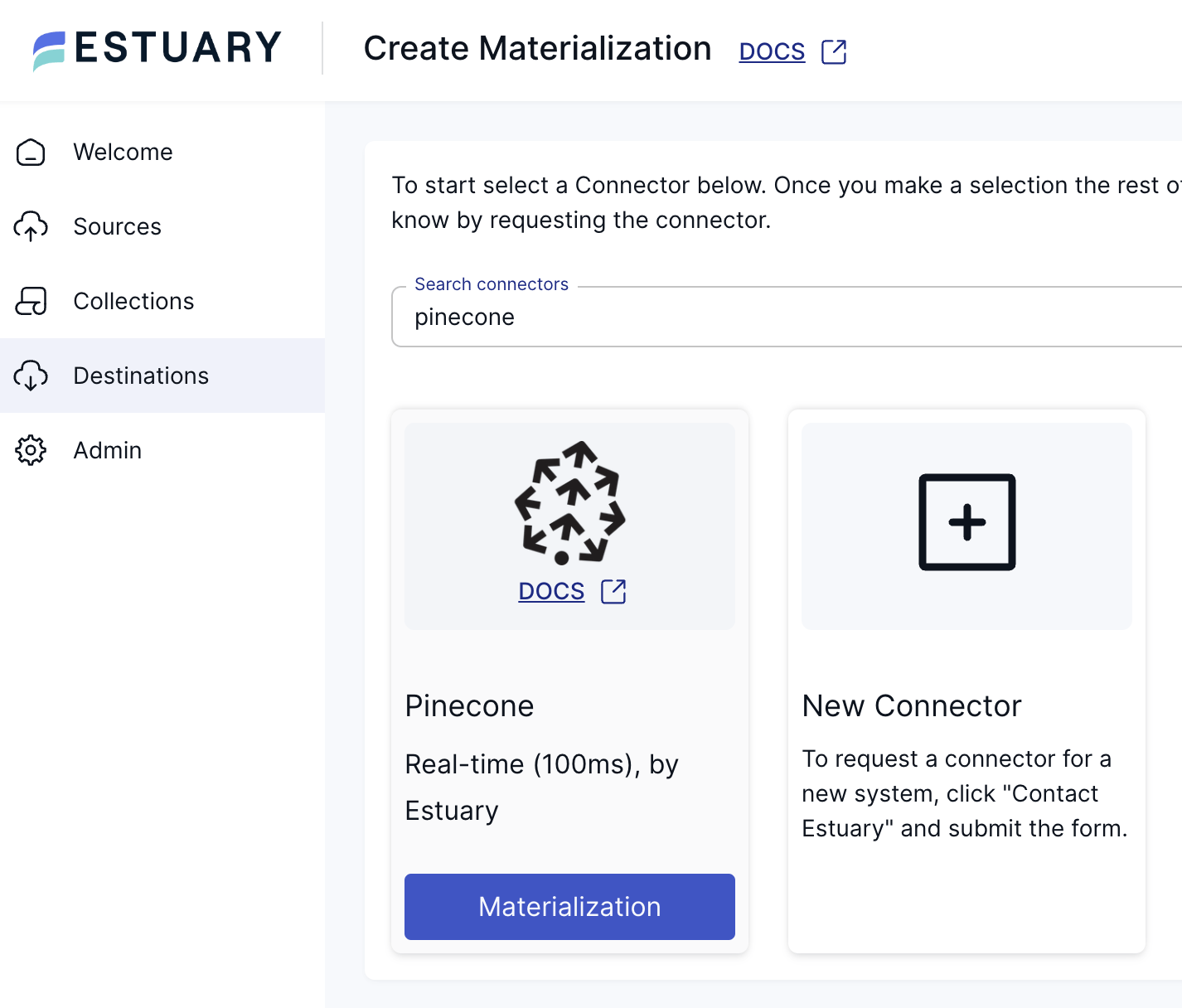

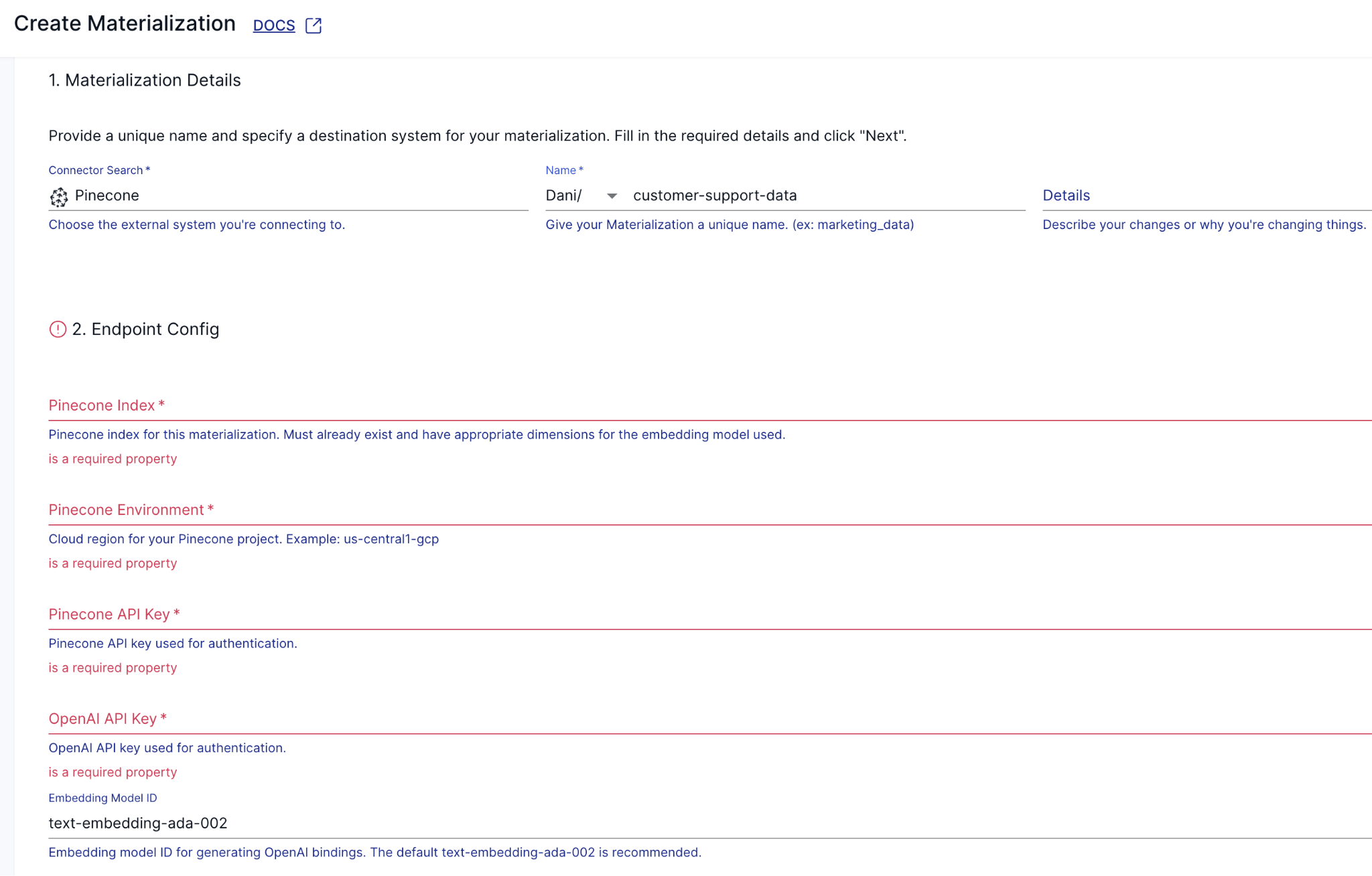

Head over to your Estuary Flow dashboard, navigate to the "Destinations" section, and click on "Create New Materialization". Select "Pinecone" as your materialization connector.

Next, configure the Materialization. Enter all the configuration details required by the connector; such as your Pinecone index name, environment identifier and both API keys.

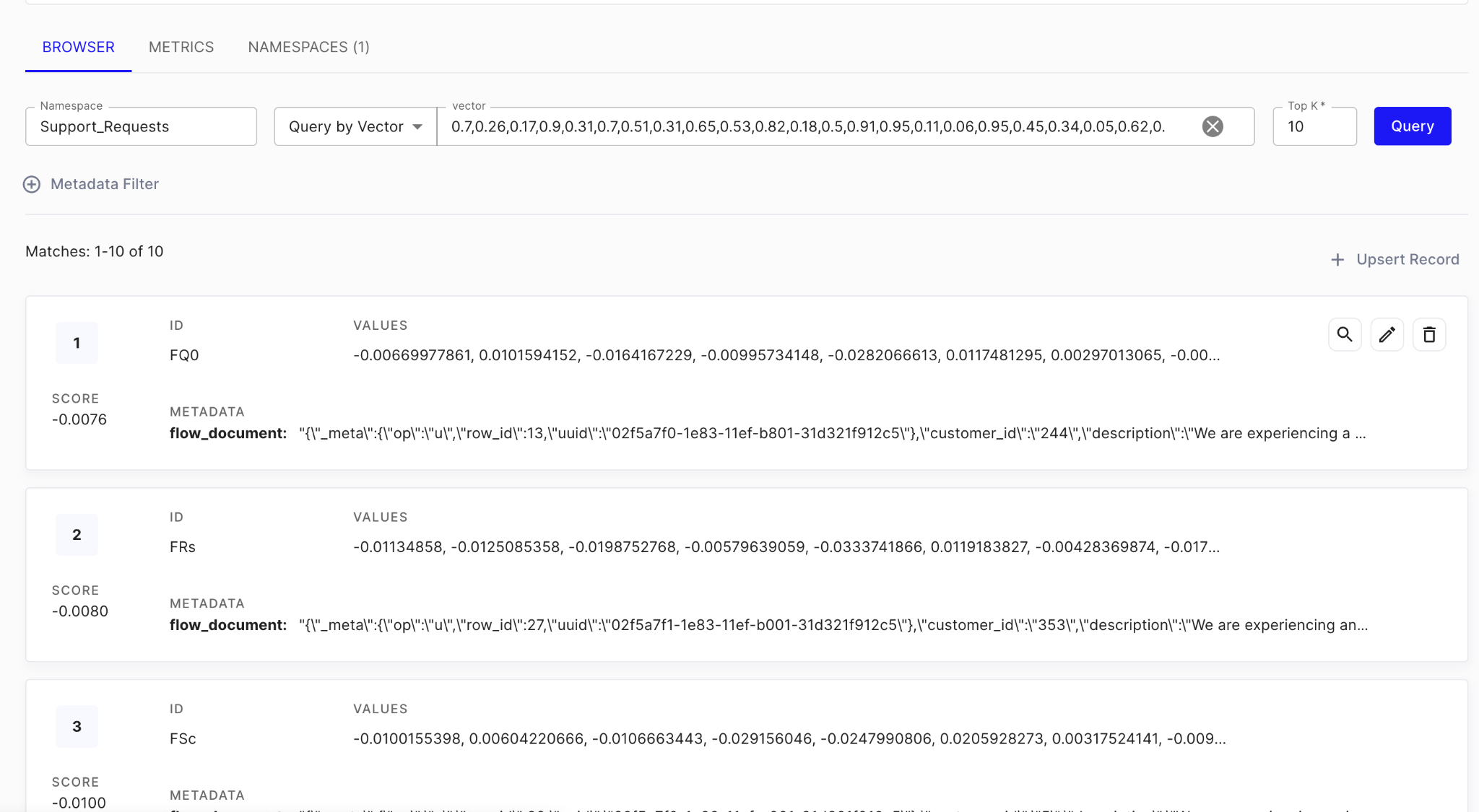

Finally, Save & Publish the Materialization. To verify that it’s working correctly, you can head over to the Pinecone web UI and make sure there are embeddings in the index.

It should look something like this:

The Pinecone materialization connector will generate a vector embedding for each document in the collection that the source connector produces with some additional metadata.

The structure of the embeddings is fairly simple. Flow packages the whole document under the flow_document key, including the metadata fields it produces while capturing changes from the source which include a uuid value, the original row_id and the operation type that triggered the change event.

Because Pinecone supports upserts, you can always use only the latest version for every record – this is critical to avoid stale data.

With Estuary Flow, real-time data integration is seamless and fast. Sign up for Estuary Flow now to try it yourself!

Step 4. Ready, Set, Chat!

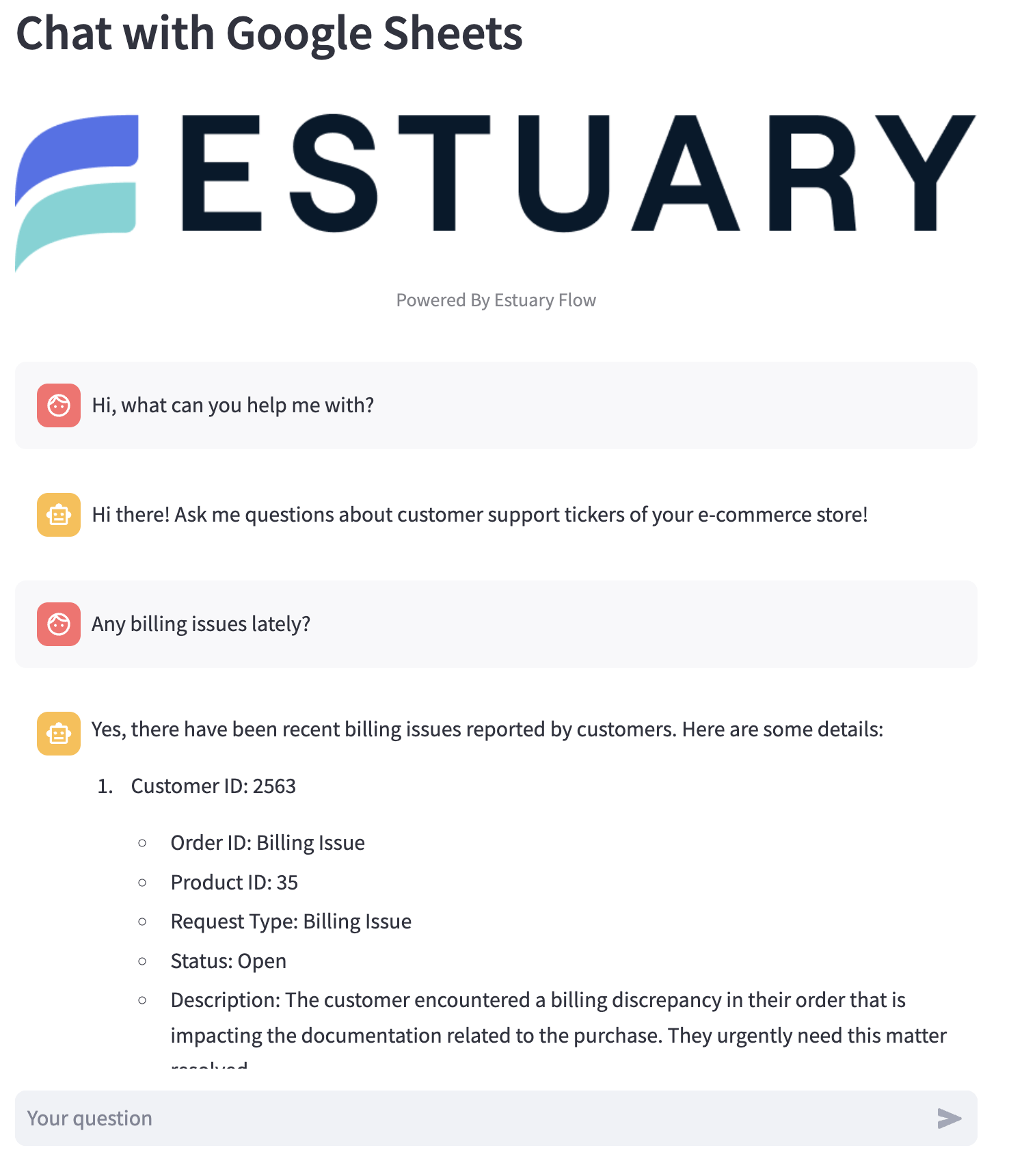

Now that you have a steady flow of incoming data and embeddings are being generated incrementally, as the last step, spin up the Streamlit chat application so you’ll have a familiar interface that allows you to access the customer support tickets.

plaintextdocker compose up streamlitNavigate to http://localhost:8502 and try asking a few questions!

The most important part of this application is that as soon as data lands in the Google Sheet, it immediately gets vectorized and stored in Pinecone, which allows the chat application to include it without any delay. If you are working with time-sensitive data, this is a critical aspect of any data pipeline.

What happens under the the hood when you submit a question to the Streamlit application, is that it first gets vectorized using the same embedding model as the Pinecone Materialization connector uses, which enables a similarity search to be performed against the existing vectors in the space.

Once the top 5 most semantically similar vectors have been found (remember: each of these vectors are vectorized versions of the documents being ingested from the Google Sheet!) the app composes the prompt that it eventually submits to OpenAI’s API so a large language model can format all the data in it as if a human was answering.

And that’s it!

Wrapping up

By combining Estuary Flow and Pinecone, you learned how to build a real-time incremental vector embedding generation pipeline, which coupled with a Streamlit chat application completes an end-to-end RAG project.

We hope this tutorial has provided you with the knowledge and tools to implement your own data flows and leverage the full potential of Estuary Flow and LLMs in your data products.

If you want to learn more, make sure you read through the Estuary documentation.

You’ll find instructions on how to use other connectors here. You can connect to just about any source, not just Google Sheets! There are more tutorials here.

Also, don’t forget to join the Estuary Slack Community!

About the author

Dani is a data professional with a rich background in data engineering and real-time data platforms. At Estuary, Daniel focuses on promoting cutting-edge streaming solutions, helping to bridge the gap between technical innovation and developer adoption. With deep expertise in cloud-native and streaming technologies, Dani has successfully supported startups and enterprises in building robust data solutions.

Popular Articles