As a key component of the Google Firebase app development ecosystem, Firestore boasts flexibility, scalability, and serverless DataOps, eliminating the need to manage servers or scale your database as your app expands. But you need to follow Firestore’s best practices to get the most out of it.

Google Cloud Firestore, a cloud-hosted NoSQL database, enables seamless storage and synchronization of data and user documents across devices and platforms. Despite its numerous advantages, Firestore presents certain limitations and challenges that warrant attention when designing data models and querying data.

In this article, we will cover points ranging from using indexes wisely and avoiding large collections and documents to using denormalization and aggregation techniques and using batch operations and transactions wisely. We will also discuss how you can leverage our Estuary Flow platform to optimize your Cloud Firestore use.

By following the best practices we present here, you'll maximize Firestore's potential while ensuring a swift and reliable user experience for your app.

What Is Google Firestore?

Google Firestore, or Cloud Firestore, is a cloud-based NoSQL database that makes storing and syncing data across various devices and platforms a breeze. It is the newer NoSQL database in Firebase, designed to replace the older Realtime Database.

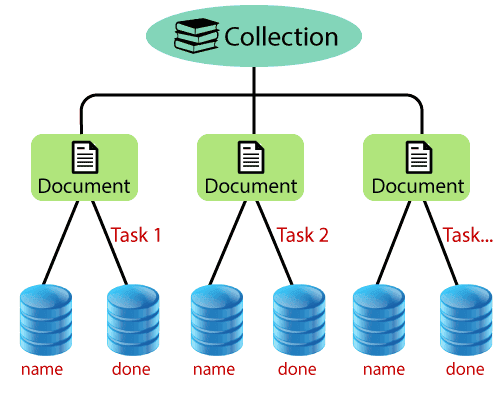

In Firestore, data is stored in documents organized into collections. These documents can hold simple values like strings, numbers, and booleans or more complex ones like arrays, maps, or references to other documents. They can even feature subcollections with additional documents. Querying your data is easy with filters, ordering, and pagination for any field in your documents.

Along with REST or RPC APIs for universal access, Firestore offers native SDKs for:

- iOS

- C++

- Web

- Unity

- Flutter

- Node.js

- Android

With offline access and data synchronization, your app keeps working even without network connectivity. Plus, real-time updates let you listen to data changes and update your app's UI as they happen.

The beauty of Firestore lies in its flexibility, scalability, and serverless nature. No need to worry about managing servers or scaling your database as your app grows – Firestore automatically handles it all.

It can accommodate millions of concurrent users and terabytes of data, and you only pay for what you use. Security rules and indexes ensure control over data access and queries.

8 Google Firestore Best Practices To Get Optimum Results

Now let's take a look at some essential best practices. By following these guidelines, you'll improve your Firestore database's efficiency and performance.

Best Practice 1: Use Indexes Wisely

Indexes are key to efficiently querying data in Firestore, allowing for complex queries with multiple fields, ordering, and filtering. However, they come with certain trade-offs and limitations you should be aware of.

First, indexes take up storage space and can impact write performance. When you write or update a single document, Firestore must update all indexes involving the affected fields. More database query indexes can slow down your writing and raise storage costs.

Second, there are restrictions on the number and type of fields in an index. For instance, you can only have one array field per index and you can't use an array field with a range filter like < or >=. Also, a database can't have more than 200 composite indexes.

So be smart with indexes and only create the ones you need for your queries. You can manage and add database indexing manually with the Firebase console or Firebase CLI, or let Firestore create them automatically based on your queries. The single-field index exemption feature lets you disable indexing for specific fields you don't query.

Using indexes wisely helps optimize query performance and lower storage costs.

However, they're not a one-size-fits-all solution. In some cases, you may need to use other techniques like denormalization or aggregation to boost your query performance.

Best Practice 2: Avoid Large Collections & Documents

Firestore imposes limits on user documents and collection sizes and numbers to ensure the maximum depth of subcollections is 100 and a query can't return more than 10 MiB of data. To work within these constraints while avoiding large collections, you can use pagination or cursors to break queries into smaller chunks.

By applying methods like limit(), startAt(), startAfter(), endAt(), and endBefore(), you can control how many documents you fetch and where your query starts or ends, reducing query latency.

Another approach is to employ sharding or hashing techniques to distribute data across multiple subcollections. For instance, if you have a massive user collection, you can create subcollections based on the first letter of users' names or a random hash value. This helps reduce the number of documents per subcollection, enhancing query performance.

To dodge large documents, you can use subcollections or references for nested or related data. For instance, if a document contains a comment array, move the comments to a subcollection or store them in a separate collection with references to the original document. This keeps your documents under the 1 MiB limit.

Another technique is to compress or encode data, like images or videos, using gzip or base64 encoding before saving them in Firestore. This saves storage space and bandwidth.

While avoiding large collections and documents keeps you within Firestore's limits and improves query performance, it can add complexity and overhead to your data model and code logic. Striking a balance between simplicity and efficiency is key when designing your data structure.

Best Practice 3: Use Denormalization & Aggregation Techniques

Firestore's NoSQL database allows for flexible and dynamic data models but it doesn't support complex queries like joins, group bys, or aggregations. To store and query data efficiently, you might need to use denormalization and aggregation techniques.

Denormalization involves storing redundant or duplicated data in multiple places to prevent expensive joins or lookups. For instance, with collections of posts and comments, you can store the author's name and avatar in both to reduce reads and improve query performance when displaying posts and comments.

But denormalization has downsides. It increases storage costs and write-operations, and it can lead to data inconsistency. To mitigate these issues, use denormalization selectively for frequently accessed data, and ensure atomicity and consistency with transactions or batch writes when updating denormalized data.

Aggregation means storing precomputed summaries or statistics to avoid costly calculations or scans. For instance, you can store monthly revenue in a separate collection instead of summing up all orders each time, reducing reads and enhancing query performance.

However, aggregation also has drawbacks, such as increased storage cost, write operation, and the risk of data inconsistency.

To address these concerns, apply aggregation selectively for frequently accessed data and use transactions or batch writes for atomicity and consistency when updating aggregated data.

Denormalization and aggregation techniques can boost query performance and reduce read operations but they may also increase storage costs, write operations, and complexity in your data model and code logic. Strive for a balance between normalization and denormalization when designing your data structure.

Best Practice 4: Cache Data Locally Or In Memory

Firestore offers offline support and caching features, allowing you to store and access data locally or in memory. This can enhance query performance and cut down on network costs and latency.

Offline support ensures Firestore functions even with no network connection or weak signals. It stores data locally on your device using the local storage API, letting you read and write data offline. When you're back online, Firestore syncs your local data with the server.

Caching enables Firestore to store data in memory using the in-memory cache API. This allows for quicker data retrieval from memory instead of fetching it from the server each time. Firestore also keeps your in-memory cache updated with the latest server data.

Configure offline support and caching settings through the Firebase console or Firebase CLI. You can also use the get() method with different source options to specify data retrieval: server-only, cache-only, or either based on network availability.

While offline support and caching features help optimize query performance and reduce network costs and latency, they can also increase storage costs, memory usage, and code logic complexity. So finding a balance between online and offline modes is essential when accessing data.

Best Practice 5: Use Batch Operations & Transactions Wisely

Firestore allows you to use batch operations and transactions for performing multiple write operations atomically and consistently. This can boost write performance and decrease network costs and errors.

Batch operations let Firestore execute multiple write operations as a single atomic operation, meaning all operations either succeed or fail together.

Billing-wise, batch operations count as one write data operation. To create a batch object, use the batch() method, then add write operations with set(), update(), or delete() methods. Execute the batch with the commit() method.

Transactions enable Firestore to execute multiple read and write operations atomically, ensuring data consistency and preventing changes during the transaction.

Use the runTransaction() method to create a transaction object, then add read and write operations with get(), set(), update(), or delete() methods. The transaction's return value provides feedback on its success or failure.

Batch operations and transactions help optimize write performance and reduce network costs and errors but can introduce limitations and complexity in your code logic.

For instance, batch operations can't exceed 500 write operations per batch, transactions can't surpass 500 read/write data operations per transaction or last longer than 270 seconds, and transactions can't modify documents more than once.

So use batch operations and transactions wisely – only when you need atomicity and consistency for multiple write operations. Using batch operations and transactions can simplify your data manipulation logic while maintaining consistency and atomicity.

Best Practice 6: Monitor Query Performance Metrics

Monitoring query performance metrics can identify a slow database query reference and optimize it. Firestore offers a variety of tools and metrics to monitor and analyze query performance, helping you spot and troubleshoot issues or bottlenecks.

The Firebase Performance Monitoring SDK is one such tool, enabling you to gather and view performance metrics for Firestore queries like latency, payload size, cache hits/misses, and errors. Use the Firebase console or CLI to set up the Performance Monitoring SDK for your app and analyze performance metrics in the Firebase console.

Another useful tool is the Cloud Monitoring API which lets you access and export performance metrics for your Firestore databases, such as database read-write operations, user document counts, storage usage, and network egress/ingress.

To enable and configure the Cloud Monitoring API for your project, use the Google Cloud console or CLI. You can then analyze performance metrics with the Google Cloud console or other third-party tools.

Best Practice 7: Test Different Query Strategies

Firestore provides a variety of query types to filter, sort, limit, and paginate your data. However, not all queries offer the same performance or scalability. Therefore, evaluate different query strategies and assess their performance metrics and trade-offs to optimize your application.

Consider the following query strategies:

- Simple queries: These queries filter or sort data using a single field or document ID. Typically fast and scalable, they may not be suitable for complex or dynamic data models.

- Array-contains / array-contains-any / in / not-in queries: Filtering data based on array values or multiple values, these queries use special operators and do not require extra indexes. They cater to flexible or dynamic data models.

- Composite queries: Using multiple fields or document IDs, composite queries filter or sort data. These queries demand composite indexes to function correctly, accommodating more intricate or dynamic data models. However, they may entail limitations and costs related to index creation and maintenance.

- Collection group queries: These queries are especially useful when working with collections sharing the same hierarchical data structure. Targeting all collections with a specific name throughout your database, collection group queries necessitate collection group indexes. While they can support hierarchical data structures or nested data models, they might involve limitations and costs regarding index creation and maintenance.

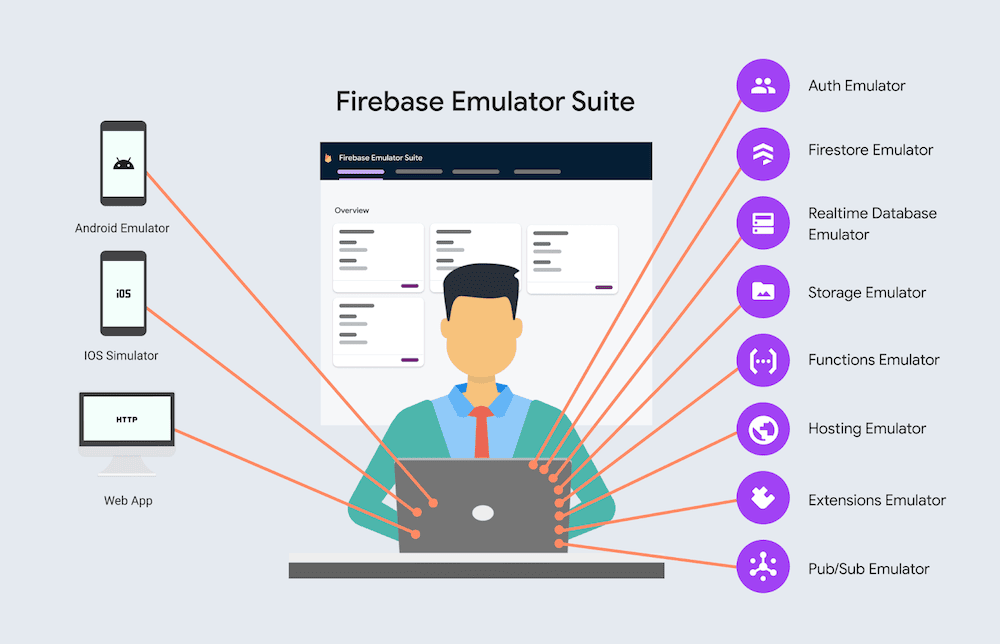

To test and compare different query strategies, use the Firebase console or the Firebase Emulator Suite. Collect and view performance metrics for your Firestore queries using the Firebase Performance Monitoring SDK or the Cloud Monitoring API.

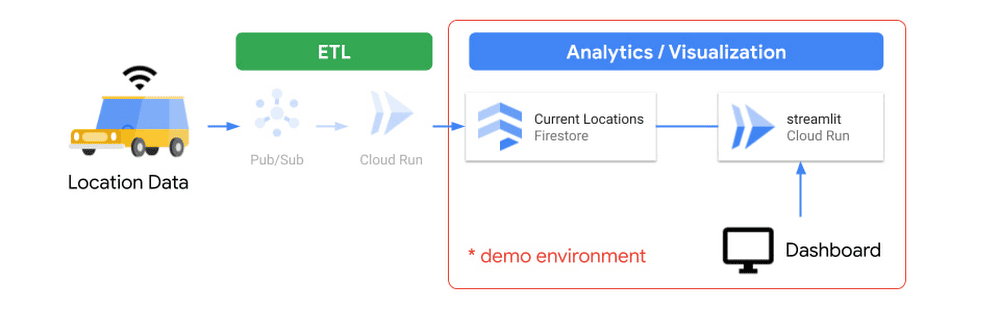

Best Practice 8: Leverage Real-Time Data Updates & Streaming In ETL & Data Pipelines

Firestore offers real-time data updates and streaming capabilities, allowing you to listen for changes in your data and automatically update your application UI or trigger actions based on those changes.

This can greatly improve user experience and enable real-time processing of data. Integrating these features into your ETL (Extract, Transform, Load) and data pipelines can lead to more efficient and responsive data processing workflows.

To leverage real-time data updates, use Firestore's onSnapshot() method to create a listener on a document or a query. The listener will be triggered every time the document or query results change and it will receive the updated data.

Integrating Firestore's real-time capabilities into your ETL and data pipelines can help streamline your data processing workflows by:

- Minimizing the latency between data updates and their processing, as changes are immediately detected and acted upon.

- Providing a more responsive and efficient system, especially when dealing with time-sensitive or rapidly changing data.

- Reducing the need for periodic polling or scheduled batch processing, potentially lowering resource consumption and costs.

While real-time data updates and streaming can enhance your ETL and data pipelines, they may also introduce additional complexity, resource usage, and costs. Be mindful of the number of data read event listeners and the frequency of updates, as excessive usage can impact your application's performance and Firestore billing.

And when leveraging real-time data updates and streaming in your ETL and data pipelines, carefully plan and implement your listeners to ensure optimal performance and avoid unnecessary costs.

Now that we know the Firestore best practices, let’s take a look at how you can use Estuary Flow to get the best results.

Using Estuary Flow With Google Firestore

Estuary Flow is a data integration platform that allows you to capture, transform, and materialize data from various sources into various destinations. One of the sources that Estuary Flow supports is Google Firestore.

You can use Estuary Flow to capture data from your Google Firestore collections into Flow collections.

You can then use Estuary Flow to transform and materialize your data from Flow collections into various destinations, such as Snowflake, BigQuery, Google Sheets, etc.

To use Estuary Flow with Google Firestore, you need to:

- Create a service account for your Google Firestore project and grant it the required permissions to access your Firestore data.

- Create a capture specification for your Google Firestore source. A capture specification defines which collections and fields you want to capture from Firestore and how you want to map them to Flow collections.

- Deploy your capture specification using the Estuary CLI or the Estuary web application. This will create a capture connector that will stream your Firestore data into Flow collections.

- Optionally, create a transformation specification for your Flow collections.

- Create a materialization specification for your destination. A materialization specification defines which destination you want to send your data to and how you want to configure it.

- Deploy your transformation and materialization specifications using the Estuary CLI or the Estuary web application. This will create transformation and materialization connectors that will stream your transformed data to your destination.

Using Estuary Flow with Google Firestore can help you integrate and analyze your Firestore data with other sources and destinations.

For more details on how to use Estuary Flow with Google Firestore, please refer to the guide to create a Data Flow and the Estuary connector documentation for Google Firestore.

Conclusion

As you develop your Firestore-powered applications, consider how these best practices can help you achieve better performance and scalability. Incorporating these strategies will lead to a more robust and efficient application.

Don't forget to take advantage of our Estuary Flow platform, a data integration platform that works seamlessly with Google Firestore. Try the beta program of Estuary and explore its potential in enhancing your Firestore experience.

By combining these Firestore best practices with Estuary Flow, you can further optimize your data management and drive your application's success.

Author

Popular Articles