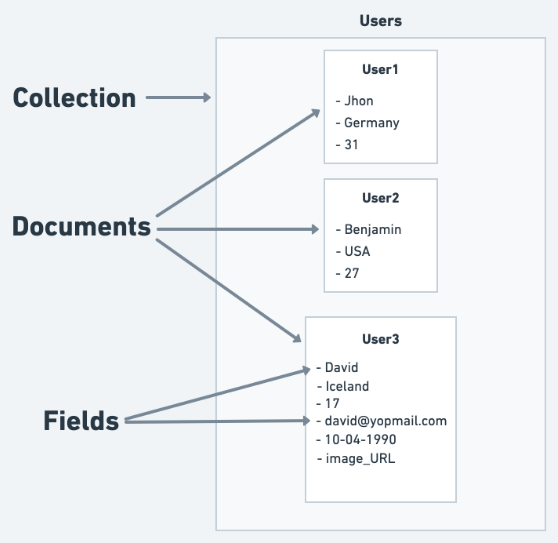

Firestore is the latest NoSQL database in Google’s Firebase platform. Known for its flexibility, scalability, and real-time capabilities, it is the de facto choice for developers looking to quickly create powerful web and mobile apps. Yet, it's not without drawbacks. Firestore limitations can pose serious challenges for those seeking optimal database performance in their applications.

Understanding these limitations is crucial. By anticipating potential challenges and devising tailored workarounds for them, you can make well-informed decisions when designing database schemas for efficient and scalable data management.

The workarounds can also ensure that your Firestore database integrates seamlessly with your applications. Plus, they provide the perfect opportunity for you to shine as a developer.

In this article, we will discuss the various limitations of Firestore queries and records, providing you with practical solutions and workarounds to overcome several query and record limitations of Firestore. We will also discuss how to leverage Estuary Flow with Firestore to enhance your application's data processing capabilities.

By the end of this article, you will have effective strategies to work around major Firestore limitations and turn them into opportunities for query optimization and better database management.

Firestore Query Limitations & Their Workarounds

Firestore query limitations can pose challenges in specific scenarios like complex filtering, ordering data, and even combining multiple conditions.

Once you understand these limitations and have effective solutions for them, you can ensure optimized database performance and seamless query management in your apps. Let’s take a look at different Firestore query limitations and discuss practical workarounds to navigate these challenges and optimize query performance.

Inequality Filters & Compound Queries

Firestore has certain limitations when it comes to using inequality filters and compound queries. It only allows a single range (inequality) filter per query which means you cannot use multiple range filters on different fields. Additionally, you cannot use an inequality filter and a 'not equals' (!=) comparison on different fields in the same query.

Impact On Applications & Use Of Firestore

These limitations can hinder the flexibility of querying in Firestore and make it difficult for developers to create complex queries to retrieve specific data. It can reduce performance and increase complexity in your application logic, especially when trying to sync Firestore data.

Workaround: Using Multiple Queries & Merging Results

To overcome this limitation, you can perform multiple queries and merge the results on the client side. Break down your complex query into simpler queries, each containing a single inequality filter, and then fetch the results from Firestore. Once you have the results, combine and filter them in your application code to achieve the desired output.

Here's an example in JavaScript using the Firebase SDK:

javascript// Suppose you want to query documents where "age" is greater than 25 and "score" is less than 50

const db = firebase.firestore();

// Perform two separate queries

const ageQuery = db.collection('users').where('age', '>', 25);

const scoreQuery = db.collection('users').where('score', '<', 50);You can find more information about Firestore queries and their limitations here.

Limited OR & NOT Query Support

Firestore has limited support for OR and NOT queries which can make it difficult to create complex queries that include multiple conditions. Specifically, Firestore does not natively support OR queries on multiple fields or NOT queries.

It means you cannot directly filter documents that meet either of two different field conditions or exclude documents based on a particular field value. Querying offline also faces the same limitations.

Impact On Applications & Use Of Firestore

This limitation restricts developers from easily fetching data that meet multiple criteria, potentially affecting application performance and increasing the complexity of the code. Furthermore, the query retrieves only partial data when not all conditions can be satisfied.

Workaround: Client-Side Filtering Or Multiple Queries

You can work around this limitation by either applying client-side filtering or using multiple queries and merging the results. This approach ensures that query results are consistent with the desired output even when operating on the same field with complex conditions.

For example, in JavaScript using the Firebase SDK, if you want to scan cached documents where "status" is either "active" or "pending", you could perform two separate queries and merge the results:

javascript// Perform two separate queries

const activeQuery = db.collection('tasks').where('status', '==', 'active');

const pendingQuery = db.collection('tasks').where('status', '==', 'pending');

// Fetch and merge results

const activeSnapshot = await activeQuery.get();

const pendingSnapshot = await pendingQuery.get();

const results = [];

activeSnapshot.forEach(doc => results.push(doc.data()));

pendingSnapshot.forEach(doc => results.push(doc.data()));

console.log(results);No Support For Full-Text Search

Firestore's lack of native support for full-text search poses a challenge when developers need to perform complex text searches within the database. This limitation becomes particularly complex in scenarios where applications rely heavily on textual data, such as content-rich websites, social media platforms, or eCommerce applications where users need to search for specific products or information.

Impact On Applications & Use Of Firestore

This limitation can make it challenging to implement advanced search features in your application, leading to a suboptimal user experience and potentially requiring additional client-side processing to filter results based on text content.

Workaround: Using Third-Party Search Services (e.g., Algolia Or Elasticsearch)

To overcome this limitation, you can integrate your Firestore database with third-party search services like Algolia or Elasticsearch. These services offer powerful full-text search capabilities, allowing you to perform advanced text queries on your Firestore data.

By leveraging third-party search services, you can provide your users with robust search functionality and work around Firestore's limitation in supporting full-text searches. Additionally, integrating third-party services can be easy with a well-architected ETL pipeline.

Query Performance & Limitations On Large Datasets

Query performance in Firestore primarily depends on the number of documents a query returns rather than the size of the dataset queried. This means that even with an extensive dataset, the performance remains consistent as long as the number of returned documents stays relatively small.

However, handling large datasets can introduce challenges such as pagination or slow performance due to many documents fetched in a single query. For example, when building an eCommerce application with thousands of products, you might encounter difficulties displaying search results or product listings involving a large number of documents.

Impact On Applications & Use Of Firestore

These limitations can affect the responsiveness and user experience of your application, especially when working with massive data sets. Slow query performance may result in a less efficient application and could potentially increase your Firestore costs. Query offline performance is similarly affected by these limitations.

Workaround: Denormalizing Data, Indexing, Or Pagination

To optimize query performance on large datasets, consider the following strategies:

- Denormalizing Data: To improve Firestore performance, you can denormalize data by storing related data across multiple documents or collections with the same field structure instead of nesting it within a single document. This reduces the number of documents required to fetch in one query.

- Indexing: Another useful technique is to make use of Firestore's built-in indexing features to create a composite index for queries. This step of creating composite indexes speeds up query execution, particularly when filtering or sorting based on multiple fields.

- Pagination: You can also implement pagination in your queries using Firestore's limit and startAfter or startAt methods. This fetches a smaller number of documents at a time, reducing the amount of data transferred and improving query results.

Here's an example of paginating a query in JavaScript using the Firebase SDK:

javascriptconst db = firebase.firestore();

const pageSize = 10;

// Fetch the first page of documents

const firstPageQuery = db.collection('posts').orderBy('timestamp').limit(pageSize);

const firstPageSnapshot = await firstPageQuery.get();

// Fetch the second page of documents using startAfter

const lastDocumentFromFirstPage = firstPageSnapshot.docs[firstPageSnapshot.docs.length - 1];

const secondPageQuery = db.collection('posts')

.orderBy('timestamp')

.startAfter(lastDocumentFromFirstPage)

.limit(pageSize);

const secondPageSnapshot = await secondPageQuery.get();Now that you are familiar with the workarounds you can use to improve the performance of your Firestore queries, let’s now discuss Firestore record limitations and how to work around them.

Firestore Records Limitations & Their Workarounds

Firestore record limitations are constraints that developers may encounter when working with individual Firestore documents and their properties. These limitations can affect database performance, data integrity, and the overall efficiency of an application.

By understanding these constraints, developers can make informed decisions when designing their database structure and implementing data retrieval strategies.

Let’s discuss 4 major Firestore record limitations and explore practical workarounds to overcome these challenges. By mastering these workarounds, developers can maintain optimal database performance and data integrity, enabling them to create efficient and scalable applications using Firestore.

Document Size & Field Limitations

Firestore has specific limitations when it comes to document size and the number of fields in a document. Each document can have a maximum size of 1 MiB (1,048,576 bytes) including its field names, values, and any nested maps or arrays. Additionally, a document cannot have more than 20,000 fields including any nested fields within maps.

Impact On Applications & Use Of Firestore

These limitations can affect how you structure and store data in your Firestore database. If you exceed the maximum document size or field count, you will encounter errors when attempting to write, update, or delete the document. This will result in increased complexity in your application's data management and potential issues with performance and scalability.

Workaround: Splitting Data Across Multiple Documents Or Collections

To overcome these limitations, consider splitting your data across multiple documents or collections. Here are a few strategies:

- Split Large Documents: Break down large documents into smaller ones, storing related data in separate documents or subcollections. This can help you stay within the document size and field limitations while still maintaining a logical data structure.

- Use References: When splitting data across multiple documents, you can use references to establish relationships between them. This allows you to retrieve related data by following the references, without needing to store all the data in a single document.

- Subcollections: Utilize subcollections to store related data within a parent document. This can help you better organize your data and avoid exceeding document size or field limitations.

By employing these workarounds, you can effectively manage your Firestore records while staying within the document size and field limitations, ensuring a well-structured and performant database for your applications.

Write, Update, & Delete Rate Limitations

Firestore imposes certain rate limitations on writing, updating, and deleting documents. Each document can have a maximum sustained write rate of 1 write per second. While this is not a hard limit, consistently exceeding this rate can result in increased latency and contention errors. Additionally, transaction and batch writes have a limit of 500 writes per operation.

Impact On Applications & Use Of Firestore

These rate limitations can affect the performance and responsiveness of your application, particularly in scenarios where you need to write or update a large number of documents concurrently or frequently. In cases where you have high write, update, or delete loads, you may experience increased latency or errors.

Workaround: Batching Writes, Implementing Exponential Backoff, Or Using Cloud Functions

To work around these limitations, consider employing the following strategies:

- Batching Writes: Use batch writes to group multiple write operations into a single atomic operation. This can help reduce the number of individual write requests and improve performance. However, remember that each batch is still limited to 500 writes.

- Implementing Exponential Backoff: In cases where you experience contention errors or increased latency, implement an exponential backoff strategy. This involves increasing the delay between retrying write operations, which can help alleviate contention and improve overall performance

- Using Cloud Functions: Leverage Firebase Cloud Functions to offload some of the write, update, and delete operations from your client-side application. This can help distribute the load and potentially avoid rate limitations by performing these operations server-side.

By adopting these workarounds, you can better manage to write, update, and delete operations in Firestore, ensuring a more performant and responsive application while staying within the imposed rate limitations.

Limited Support For Complex Data Types

Firestore has limited support for complex data types such as custom objects or complex nested structures. While it does support simple data types like strings, numbers, booleans, and basic arrays or maps, it can be challenging to store and retrieve more complex data structures without additional processing or transformation.

Impact On Applications & Use Of Firestore

This limitation can affect how you model and store your data in Firestore. Storing complex data types directly might not be feasible, requiring you to devise alternative methods for representing and managing your data. This can lead to increased complexity in your application logic and affect overall performance.

Workaround: Serializing Complex Data Types Or Using References To Other Documents

To overcome the limitation of storing complex data types in Firestore, consider the following workarounds:

- Serializing Complex Data Types: Serialize your complex data types into a format supported by Firestore such as JSON or another string representation. When retrieving the data, you can deserialize it back into the original complex data type. This approach allows you to store complex data structures as simple strings which can be easily managed in Firestore.

- Using References To Other Documents: Instead of storing complex data structures in a single document, split the data across multiple documents or collections and use references to link them together.

This approach allows you to model complex relationships between your data while maintaining the simplicity and performance benefits of Firestore.

By adopting these workarounds, you can effectively store and manage complex data types in Firestore, ensuring optimal performance and flexibility in your application's data modeling and retrieval.

Transaction & Consistency Limitations

Firestore enforces transaction and consistency limitations that may affect how you handle data updates in your application. While it provides transactional support for single-document and multi-document updates, transactions are limited to a maximum of 500 documents.

Additionally, Firestore does not support distributed transactions across multiple collections nor provide strict consistency guarantees across multiple clients, relying instead on eventual consistency.

Impact On Applications & Use Of Firestore

These limitations can affect your application's data integrity and consistency, particularly when performing complex updates involving multiple documents, collections, or clients. It can increase complexity in your application logic to ensure data consistency and integrity.

Workaround: Using Batched Writes, Distributed Transactions, Or Firestore Security Rules

To address transaction and consistency limitations, consider the following workarounds:

- Using Batched Writes: For operations involving multiple documents that do not require transactional guarantees, use batched writes to group multiple write operations into a single atomic operation. Batched writes can improve performance and reduce the complexity of your application logic.

- Implementing Distributed Transactions: For complex operations involving multiple collections or clients, you can implement custom distributed transaction logic in your application or use Cloud Functions to enforce data consistency and integrity. For example, using Cloud Functions, you can create a function that listens for document updates and ensures consistency across related documents.

- Using Firestore Security Rules: To ensure data consistency and integrity, use Firestore Security Rules to validate incoming write operations, preventing unauthorized updates or updates that would violate your application's data constraints. For example, you can use Security Rules to enforce that a document's updatedAt field is always updated whenever other fields are modified.

By incorporating these workarounds in your application, you can effectively manage transaction and consistency limitations in Firestore, ensuring your data remains accurate and consistent across various operations and clients.

Now that we are familiar with Firestore record limitations, let’s discuss how you can make the most of Estuary Flow with Firestore.

Leveraging Estuary Flow With Firestore For Optimum Results

Estuary Flow platform is our DataOps platform that offers seamless integration with Google Firestore and can significantly enhance how you work with Firestore.

Simply put, Flow allows you to avoid Firestore limitations by syncing your data with a different system — one that doesn’t share these query and record limitations. For example, you can connect Firestore to ElasticSearch for full-text search as discussed above. You can also connect to a data warehouse like Snowflake or BigQuery for more effective analysis.

By combining the best practices of DevOps, Agile, and data management, Estuary Flow streamlines data workflows, reduces errors, and improves overall efficiency. Flow offers several advantages over its competitors.

Let’s take a closer look at how Estuary Flow can assist developers when working with Firestore.

Streamlined Data Pipeline Management

Estuary Flow enables developers to create, manage, and monitor data pipelines with ease. By automating data ingestion, transformation, and integration tasks, Estuary Flow ensures that Firestore data is consistently up-to-date, accurate, and available for analysis in your other data storage systems.

This results in a more efficient data management process and allows developers to focus on application development.

Enhanced Data Quality & Consistency

With built-in data validation, transformation, and enrichment features, Estuary Flow helps maintain data quality and consistency when moving data out of Firestore.

By automatically detecting and correcting errors, Estuary Flow reduces the risk of data inconsistencies and ensures that the data you export from Firestore delivers reliable and accurate insights to users.

Faster Data Integration & Synchronization

Estuary Flow provides pre-built connectors and integrations for various data sources which makes it easier to synchronize data between Firestore and other systems in real time. This allows developers to quickly set up data pipelines and integrations, reducing the time and effort required to build and maintain custom connectors.

Improved Data Security & Compliance

Estuary Flow offers robust data security and compliance features. These include data encryption, access controls, and auditing capabilities among other capabilities which ensure that sensitive data in Firestore is protected and complies with relevant regulations.

Conclusion

Understanding Firestore limitations unlocks the full potential of Firestore in your applications. By overcoming these limitations through effective workarounds, you can optimize your Firestore usage and create powerful applications that cater to your users' needs. These workarounds can also help you improve the performance, flexibility, and scalability of your Firestore-connected applications.

If you want to take things one step further, you can transfer data between Firestore and other data storages by using real-time data integration solutions like Estuary Flow. This way, you can carry out advanced data processing and analysis without facing any limitations of Firestore.

If you're intrigued by the potential of this approach, you can build a pipeline for free today. With its no-code GUI-based interface, you'll have your very own real-time data streaming pipeline up and running in no time, with a real-time connection to Firestore.

Author

Popular Articles