ETL vs ELT: Key Differences, Benefits, and When to Use Each

Discover the key differences in ETL vs ELT, including benefits, use cases, and how to choose the right data integration method for your business.

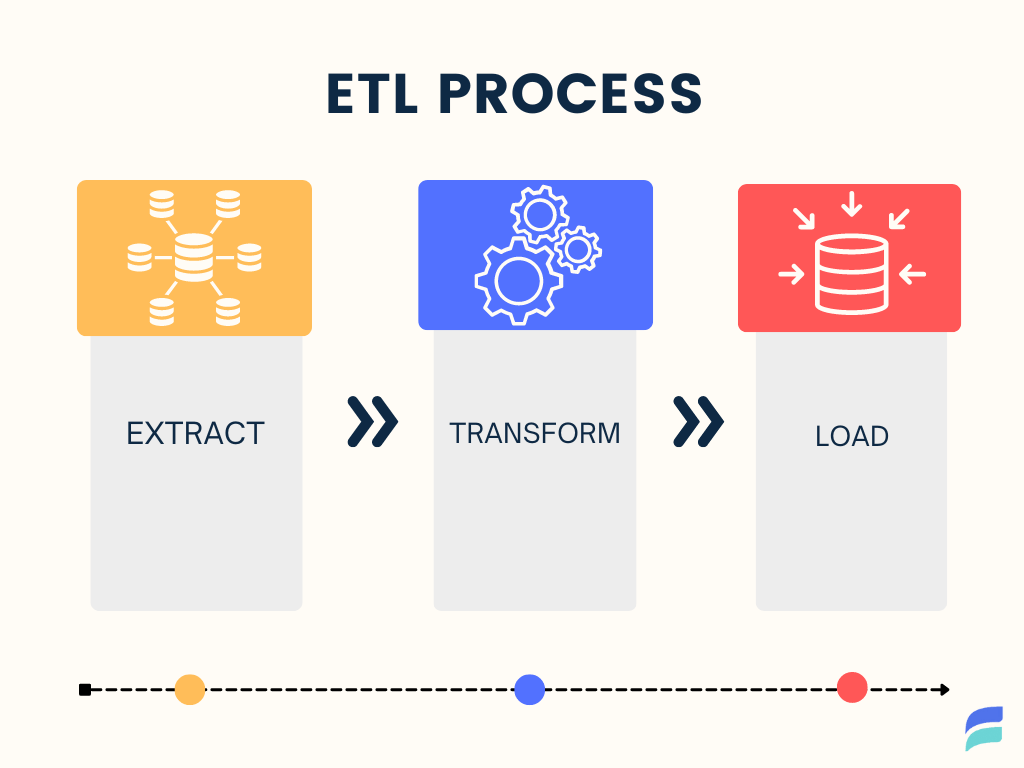

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are two data integration methods that move data from multiple sources to a central destination. The main difference lies in the sequence of steps involved in processing the data:

- ETL transforms data before it’s loaded into storage, ensuring clean and structured data.

- ELT loads data first, allowing for flexible transformations after the data is stored.

While both approaches are used for data integration, they differ in scalability, speed, and cost, depending on your data needs.

Key Differences Between ETL and ELT:

- ETL: Data is transformed before loading, which ensures data quality but may introduce latency.

- ELT: Data is loaded first, enabling faster access, but raw data may require more storage and post-load transformation costs.

In this guide, we’ll explore:

- What ETL and ELT Are: A breakdown of each process and its role in data pipelines.

- ETL vs. ELT: Practical advantages, disadvantages, and real-world use cases.

- Hybrid Solutions: Learn how combining ETL and ELT can offer flexible, powerful data pipelines.

What is ETL (Extract, Transform, Load)?

ETL is a three-steps process of a data integration mechanism (aka a data pipeline):

- Extract. Data is extracted from multiple sources — APIs, applications, webhooks, sensors, etc.

- Transform: Data is formatted, structured, enriched, or otherwise modified for reporting and answering analytical questions.

- Load: Transformed data is loaded into a final storage platform, such as a physical database or cloud storage.

In the ETL process, the order of these steps is crucial. Transformations must happen before loading to ensure that only high-quality, structured data reaches your final destination, ready for analysis and reporting.

How ETL Works (with Example)

Suppose you need to ingest data into a data warehouse like Snowflake, Amazon Redshift, Google BigQuery for analysis. Once in the data warehouse, your team plans to perform analysis. There’s a data science project planned and your growing squad of analytics engineers is eager to explore the data using tools like dbt.

However, the data you're bringing in might require some preparation before it's ready for analysis. It could be loosely structured or completely unstructured. Think of it as a jumble of information: amorphous collections of user data from Firestore, or a deluge of raw IOT sensor data streaming in through Kafka.

This raw data often needs a makeover. It's not neatly described by a schema, nor is it in a readily usable format. In ETL, this preparation stage is crucial. Data cleansing tasks like handling missing values, removing duplicates, and correcting errors ensure the data is accurate and reliable. Then comes the data transformation process—reshaping it into a structured format, like a table. You might also need to join multiple datasets or trim away unnecessary information to create a cohesive dataset.

In an ETL approach, you would utilize a processing engine to handle these data cleansing and transformation tasks before loading the data into your chosen warehouse. That way, as soon as the data lands, your team can be assured it’s usable for analysis.

Advantages of ETL

ETL is an older method than ELT. That’s because in the early days of data integration, its advantages were non-negotiable. And they’re still incredibly important today. While we'll focus on the context of data warehouses (a common ETL destination), these advantages extend to other data storage systems as well.

- Protects the destination system from corrupt or unusable data. ETL pipelines will almost always transform messy data into the correct form. If they can’t, they’ll usually halt, keeping the mess outside the warehouse.

- Guarantees data in the warehouse is ready for use. Often, multiple teams rely on a central source of truth to run analyses across a company. With ETL, there’s no potential for confusion about what data is clean and ready for use (it all is!)

- Saves data warehouse storage and compute resources. When you pre-aggregating and filter data, less of it needs to be stored. And executing the transformations in a separate environment saves data warehouse query costs. Sometimes, you can find a more cost-effective way to transform data outside the warehouse.

Disadvantages of ETL

- Hard to scale. In traditional data pipeline architectures, transformations don’t perform well at high volume.

- Adds latency. Unless you’re using a sophisticated on-the-fly transformation (more on that in a bit), transformations always extend the data’s time en route to the destination. Factor in the struggling performance at scale, and this latency increases.

- Limits analytical possibilities. Transformations like joins, filters, and aggregations, make some operations impossible that would be possible with the raw data.

ETL Use Cases and Examples

You’re likely to encounter traditional ETL when…

- The incoming data is highly unstructured, and the final data format is certain. For example, you’re using a webhook to collect user data from several online store, and you know your analysis team needs to perform standard marketing research.

- Latency isn’t a big concern. For example, you only update your models weekly and use them to perform long-term planning, not instant decision-making.

- Data warehouse governance is strict. You’re an enterprise with important data management standards. Every dataset in your warehouse must have a schema that meets several criteria.

Not all organizations fall into these categories. That’s why, as the rise of cloud computing unlocked unlimited scaling and transformation demands got more complex, engineering teams came up with a new alternative.

What is ELT (Extract, Load, Transform)?

ELT takes those same three data integration steps and flips them.

- Extract. Data is extracted from multiple sources — APIs, applications, webhooks, sensors, etc.

- Load: Data is loaded into the storage platform: often a cloud data lake, which has a lenient structure and cheap scaling.

- Transform: Data is formatted and structured within the data lake as needed.

How ELT Works (with Example)

Consider training a machine learning model on a massive amount of social media data from various platforms. While you don’t have a rigid schema for that data, you know more or less what to expect. The data can include media attachments and you plan to store it in a cloud data lake.

In this case, your main priority is to make a huge volume of data available for analysis fast. And you want the data in its raw form for your model. You can always transform it later from the data lake for other purposes.

Advantages of ELT

The main advantages of ELT are:

- Fast and easy loading. No need to worry about engineering a fast transformation process before loading. You can pipe the data straight into storage and get access immediately.

- One less staging area. Because the transformations happen on top of your storage, there’s no need for a separate staging area. This simplifies your architecture.

- Unlimited possibilities for your data. You have the data in its purest form. Without a pre-load transform, you haven’t given up any options for future transformations and analysis.

Disadvantages of ELT

- Risk of creating a “data swamp.” Sometimes, the unstructured nature of the raw data makes it impossible to parse, extract, or query — you may even forget what you have in storage. Such a pool of raw but ultimately unusable data is often called a “data swamp.”

- Post-load transforms can be expensive. This depends on the data and storage system you’re using. But in some cases, running transformations on stored raw data in a platform like a data warehouse can get pricey. This is especially true if you find your team repeating the same basic transformations over and over.

ELT Use Cases and Examples

You’ll likely run into ELT when…

- The source and destination system are the same or the source is highly structured. For example, moving data between two SQL databases.

- Someone’s performing big data analytics.

- The destination is a less-structured storage type, like a NoSQL database.

Key Differences Between ETL and ELT

Use cases aside, let’s take a look at the tangible differences between ETL and ELT processes and components.

Order of Operations: ETL vs. ELT

In ETL, transformation occurs before loading the data into storage. In ELT, transformation happens after data is loaded. The other differences are byproducts of this distinction.

Data Processing: ETL vs. ELT Approaches

In an ETL pipeline, processing happens before loading. This means that the processing component is completely independent of your storage system. ETL transformation can be batch or real-time. Either way, it needs its own staging area, which you either build or get from an ETL vendor.

Common processing agents include Apache Spark and Flink. These are powerful systems, but they can be expensive and require experienced engineers to operate. Alternatively, an ETL vendor might provide you with processing tools.

By contrast, ELT processing happens on top of the data storage platform — the data warehouse or data lake. This simpler architecture doesn’t require a staging area for transformations, and you can transform data with a much simpler method, SQL.

Often in ELT, what we call operational transformations (joins, aggregations, and cleaning — the “T” in ELT) happen at the same time as analytical transformations (complex analysis, data science, etc). In other words, you’re adding a prerequisite step to any analytical workflow, but if you set this up well, it can be fairly straightforward for analysts to do.

The “T” in ELT can come in other forms, too. For instance, you might clean up data with a pre-computed query and use the resulting view to power analysis. Be careful, though: this can get expensive in a data warehouse environment!

Data Storage Requirements: ETL vs. ELT

ETL usually requires less data storage, and offers you more control and predictability over the amount of data stored. That’s why ETL was the go-to method in the days before cloud storage. ETL is designed for more traditional, rigid data storage that will only accept data adhering to a schema. Traditional relational databases and data warehouses are common storage systems, but more modern, flexible data storage systems can be used, too.

ELT requires a large, highly scalable storage solution, so cloud storage is pretty much the only option. It must also be permissive and flexible. Data lakes, certain warehouses, and hybrid architectures are popular in ELT pipelines.

ETL vs ELT: Side-by-Side Comparison

| Feature | ETL | ELT |

|---|---|---|

| Order of Operations | Extract -> Transform -> Load | Extract -> Load -> Transform |

| Transformation | Before loading, in a separate processing environment. | After loading, within the data warehouse or data lake itself. |

| Data Processing | Often batch-oriented, may be real-time. Requires a staging area, can be complex and expensive to manage. | Typically real-time or near-real-time. Leverages the processing power of the data warehouse, often using SQL. |

| Data Storage | Designed for structured data stores like relational databases and traditional data warehouses. | Suited for flexible, scalable storage like cloud data lakes and modern data warehouses. |

| Schema Flexibility | Strict adherence to schemas, data must be transformed to fit before loading. | More flexible schema requirements, can handle unstructured and semi-structured data. |

| Scalability | Less scalable, transformations can be a bottleneck. | Highly scalable, leverages the scalability of cloud storage and processing. |

| Latency | Can introduce latency due to pre-load transformations. | Typically low latency, as data is loaded immediately and transformed later. |

| Cost | Can be expensive due to separate processing environment and storage requirements. | Can be cost-effective if the data warehouse or data lake is optimized for transformations. |

| Use Cases | Ideal for structured data with well-defined schemas and when data warehouse resources are limited. | Suitable for big data analytics, unstructured data, and when flexibility and speed are paramount. |

| Examples | Webhook data collection for marketing analysis, updating models weekly for long-term planning. | Machine learning model training on social media data, data transfer between SQL databases. |

When to Choose ETL vs. ELT

Unsure whether ETL or ELT is the right choice? Go through this quick cheat sheet.

1. Can you store data in the cloud?

- No? You'll likely need to use ETL or explore on-premise ETL solutions.

- Yes? Both ETL and ELT are options.

2. Is your data highly structured?

- Yes? ETL is a natural fit.

- No? Consider ELT, especially if your data is unstructured or semi-structured.

3. How quickly do you need your data?

- Real-time or near-real-time? ELT might be a better choice due to its faster loading.

- Batch processing is okay? Both ETL and ELT can work.

4. Do multiple teams with varying needs use the data?

- Yes? ELT allows for more flexible transformations later, reducing the risk of conflict.

- No? ETL can still work if transformations are carefully designed.

5. What’s your budget?

Consider the costs of:

- Cost of storage and querying in the storage system (ELT).

- Whether you’re using a pipeline vendor (ELT or ETL).

- Cost of the transformation engine of choice (ETL).

By answering these questions thoughtfully, you'll be well on your way to choosing the right data integration approach for your organization. Remember, there's no one-size-fits-all answer, and hybrid solutions like Estuary Flow can offer the best of both worlds!

Beyond ETL and ELT: Modern Data Integration

Tired of the ETL vs. ELT debate? You're not alone. The rigid dichotomy of these traditional approaches often forces compromises and limitations. Fortunately, a new wave of data integration tools is breaking the mold, offering a more flexible and powerful solution.

Let’s use Estuary Flow as an example:

This modern platform combines the best of both worlds, providing real-time data ingestion and transformation without the complexity or cost of traditional ETL tools. Here's what sets Estuary Flow apart:

- Data is captured from a source using a connector (E).

- A basic descriptive schema is applied, but the data isn’t transformed.

- Captured data is stored in Flow collections, real-time data lakes in cloud storage (L).

- Optionally, you can add transformations like joins and aggregations (T).

- Collections are materialized to the destination. This is a two-step operation:

- Data is transformed to fit the schema of the destination system, and basic reductions are applied automatically (T).

- Data is incrementally loaded to the destination (L).

The final acronym for Flow is EL(T)TL, though I’m sure some of my engineer colleagues would argue that’s an oversimplification.

There are also multiple ways to look at this process. From the perspective of the Flow data collection, it’s ELT. But from the perspective of the final destination, it’s ETL.

Whatever it is, by going beyond the simple dichotomy of ETL vs ELT, Flow proves that it’s possible to create a data pipeline that:

- Transforms and loads in real-time.

- Delivers orderly, analysis-ready data.

- Is affordable and intuitive to use.

You can sign up for a free trial in the Flow web application.

Conclusion

There are endless possibilities for data integration architectures. Two major methods are ETL and ELT.

ETL (extract, transform, load) prioritizes orderliness over flexibility. It encourages more efficient storage but can limit the possibilities for analysis. ELT (extract, load, transform) prioritizes flexibility over orderliness. It allows full freedom for analysis and easy scaling but can cause major data governance issues if not handled perfectly.

The best choice depends on your specific needs and resources. Consider factors like data type, storage capabilities, budget, and the desired speed of analysis.

Many modern data integration platforms find a middle ground. Hopefully by now, you have some ideas for how to strike a balance in your architecture. If you’re looking for some inspiration, check out Estuary Flow’s docs or check out the code — we develop in the open.

Author

Popular Articles