Do you need to leverage your data stored in DynamoDB for real-time analytics? You might have considered exporting DynamoDB data to a data warehouse. The challenge is most connectors to DynamoDB aren’t real-time.

The best solution is to implement Change Data Capture (CDC) for DynamoDB. CDC captures and synchronizes changes from the DynamoDB tables, enabling replication to a destination in near real-time.

This comprehensive CDC guide for DynamoDB explains your options for how to implement an effective DynamoDB CDC solution and keep your destination up to date.

What is DynamoDB?

DynamoDB is Amazon’s fully managed NoSQL database service. It supports document-based and key-value data. This makes it suitable for a wide variety of use cases for modern, enterprise-grade applications.

DynamoDB is known for its single-digit millisecond performance, limitless scalability, built-in availability, durability, and resiliency across globally distributed applications.

Here are a few key features of DynamoDB:

- Highly Available Caching: DynamoDB Accelerator (DAX) is a fully managed caching service designed for DynamoDB. It provides 10x faster performance even for millions of requests per second. DAX helps you handle all the complex tasks of adding in-memory acceleration to your DynamoDB tables, reducing the need for manual cache invalidation management.

- Active Data Replication: Global tables in DynamoDB enable multi-active data replication across selected AWS regions, ensuring 99.999% availability. This multi-active setup ensures high performance and enables local data access for globally distributed applications.

- Enhanced Security: DynamoDB’s Point-in-Time Recovery (PITR) helps safeguard your tables against accidental write or delete operations. It also allows you to back up the table data regularly and restore it to any point within the past 35 days.

- Cost Effectiveness: For frequently accessed data, the Amazon DynamoDB Standard-IA table class can reduce costs by up to 60%. This makes DynamoDB a cost-effective solution for managing high-demand data efficiently.

Why Implement CDC for DynamoDB?

Here are a few reasons to implement CDC for DynamoDB:

- Real-time Data Integration: CDC facilitates real-time integration of DynamoDB data with other systems, applications, and data warehouses. This integration helps you keep operational data in sync and provides near real-time insights and monitoring, enabling informed decision-making based on the most current data.

- Minimal Load on DynamoDB: Unlike batch processing, which can be resource-intensive, CDC imposes minimal additional load on DynamoDB. This helps maintain performance and reduce the operational impact on DynamoDB.

- Automate Backfilling: Most CDC technologies backfill historical data by performing a full snapshot. Some technologies perform incremental snapshots while reading the data, allowing you to capture and replicate changes occurring in DynamoDB during this period. Automating this backfilling ensures data consistency and continuity without requiring manual intervention.

Use Cases for Setting up CDC with DynamoDB

Implementing CDC with DynamoDB - either for operational automation or analytics - can benefit operations across industries. Here are some of the more common use cases:

Transportation and Industrial Monitoring

Sensors in transportation vehicles and industrial equipment continually transmit data to DynamoDB. Using CDC enables you to stream this sensor data for real-time sensor monitoring. This helps detect issues early and fix more issues before they disrupt operations.

Financial Application

In financial services, CDC is used to track real-time updates to stock market data stored in DynamoDB. This helps applications to compute value-at-risk dynamically and automatically adjust portfolio balances based on current market conditions.

Social Networking

CDC helps enhance user engagement by sending instant notifications to your friends' mobile devices when you upload a new post on a social media platform. By capturing these changes in DynamoDB and triggering immediate actions, CDC helps keep users active and informed.

Customer Onboarding

When a new customer adds data to a DynamoDB table, CDC can trigger a workflow that automatically sends a welcome email. This process streamlines the onboarding process while also enhancing the customer experience with timely communication.

What Are the Different Options for Implementing Change Data Capture with DynamoDB?

When a new item is added or updated in a DynamoDB table, CDC captures and streams it as a real-time event with change data. Here are three approaches for implementing Change Data Capture in DynamoDB:

1. Change Data Capture for DynamoDB Streams

If you want to monitor changes in your DynamoDB data quickly and prevent duplicates, DynamoDB Streams, an AWS streaming model, is a great option. DynamoDB Streams helps you track item-level changes chronologically and appends these changes to a dedicated log file. Applications can access this log for up to 24 hours of data.

When an application creates, deletes, or updates data items in a table, DynamoDB Streams generates a stream record with the primary key and changes and stores it in the log.

What Is a Stream Record?

A stream record involves information about a modification to a single data item in a DynamoDB table. Each record has a sequence number that indicates the order in which changes occurred. Streams preserve this order in the log.

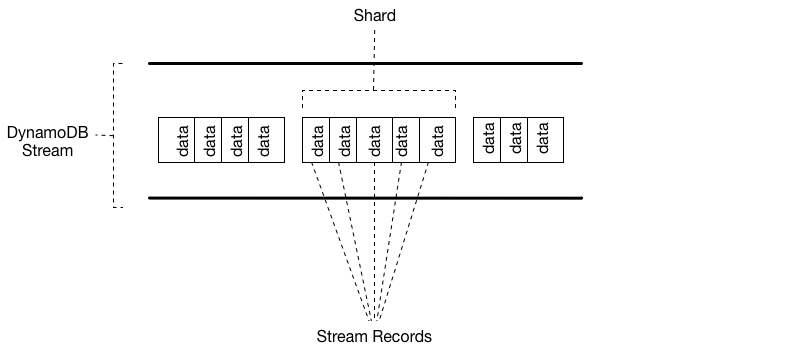

Stream records are organized into shards. Each shard serves as a container for multiple stream records and includes the necessary information to access these records. By default, up to two consumers can simultaneously access the stream records from a shard. The following illustration depicts a stream, shards in the stream, and stream records within the shards.

Key Features of DynamoDB Streams

- Data items in a DynamoDB table's stream remain accessible for up to 24 hours, even after the table is deleted or the stream is disabled.

- Each modification to data generates exactly one stream record, ensuring each change is captured exactly once, without duplication.

- DynamoDB Streams automatically ignores operations that do not result in data modification. Examples include the PutItem or UpdateItem commands attempting to overwrite an item with identical values.

How Do You Implement DynamoDB CDC Using DynamoDB Streams?

Before you begin, you’ll need the following:

- An active Amazon Web Service (AWS) account

- A preferred Integrated Development Environment (IDE)

Here are the steps to implement DynamoDB CDC directly using DynamoDB Streams:

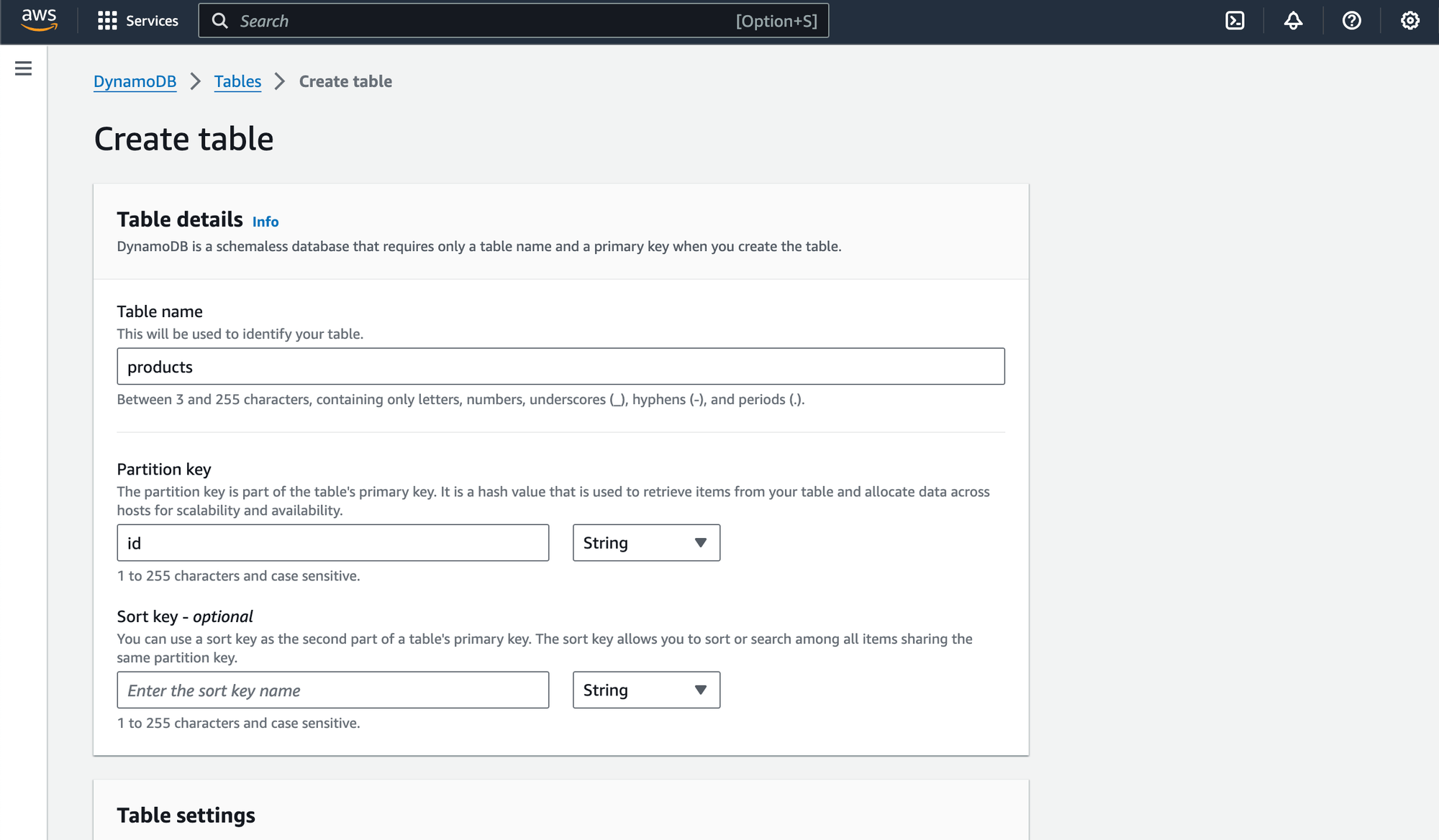

Step 1: Create a DynamoDB Table

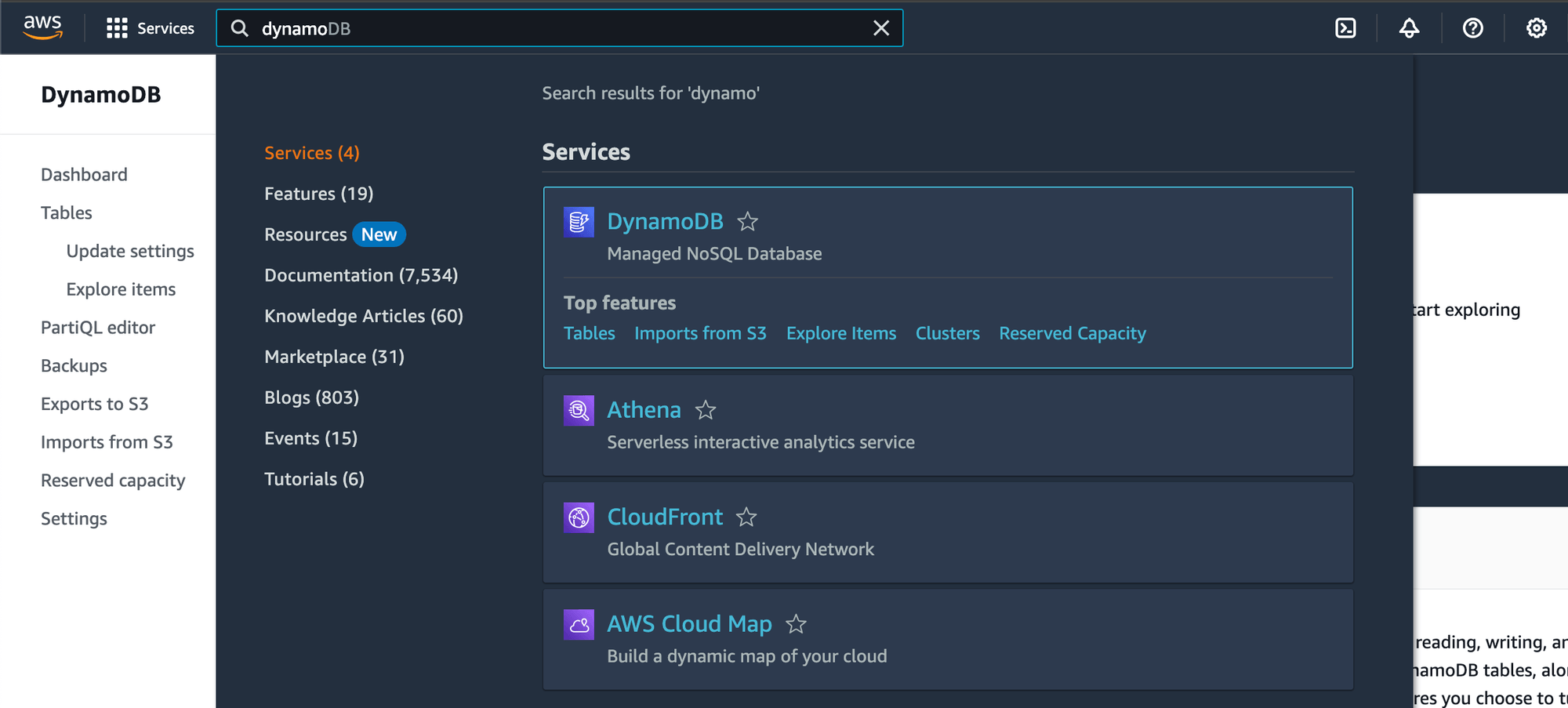

- Log into your AWS Management Console and launch the DynamoDB console.

- Select Tables from the DynamoDB dashboard and click Create table.

- Specify the necessary information for your table and click Create table.

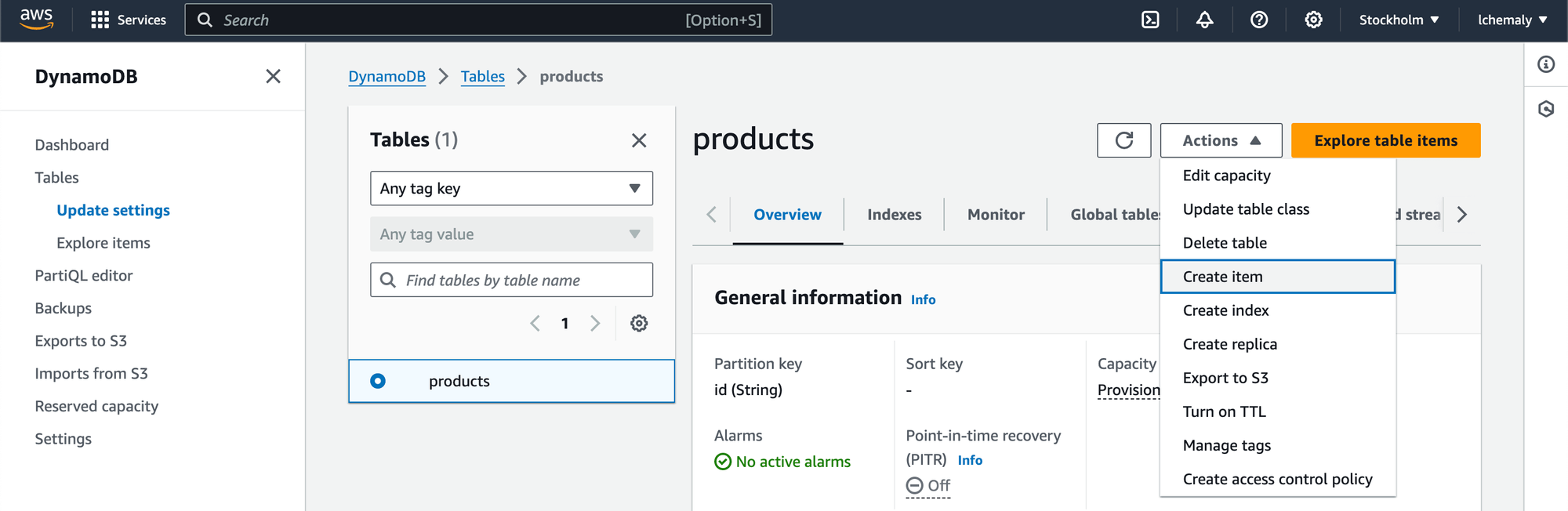

- After creating the table, try to insert a few data items into your table by clicking Actions > Create item.

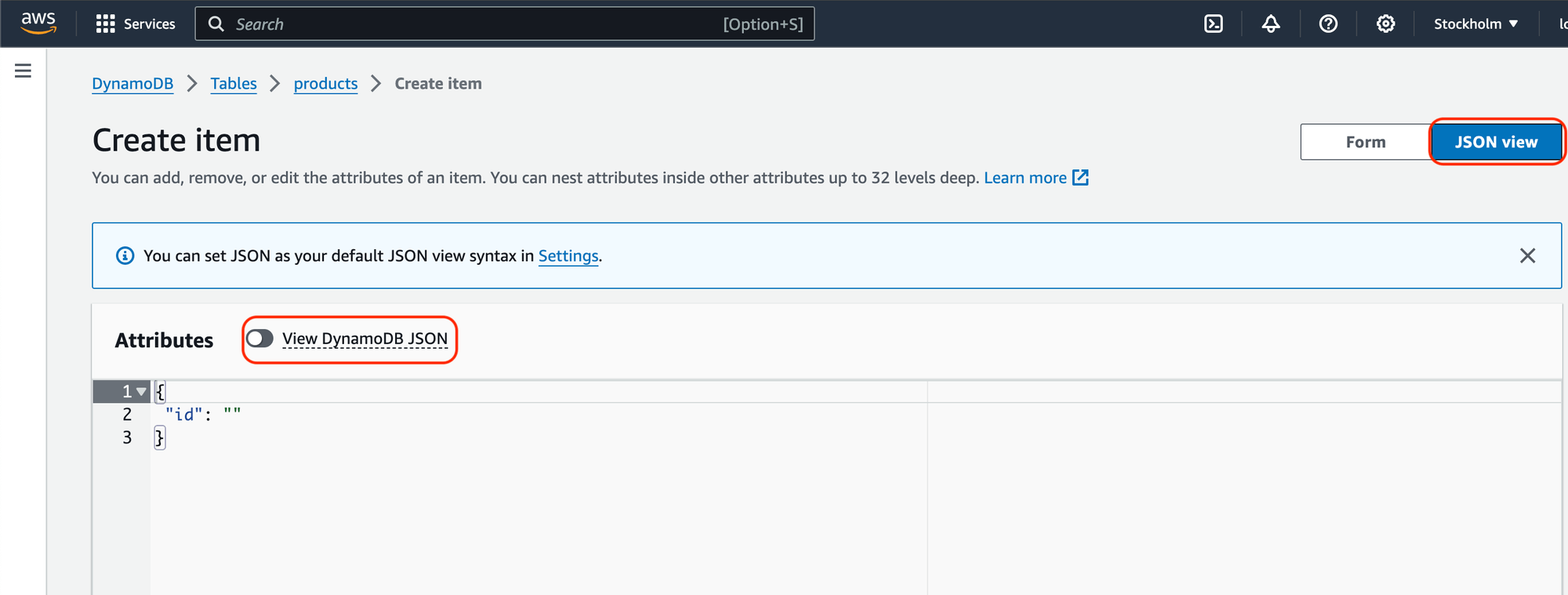

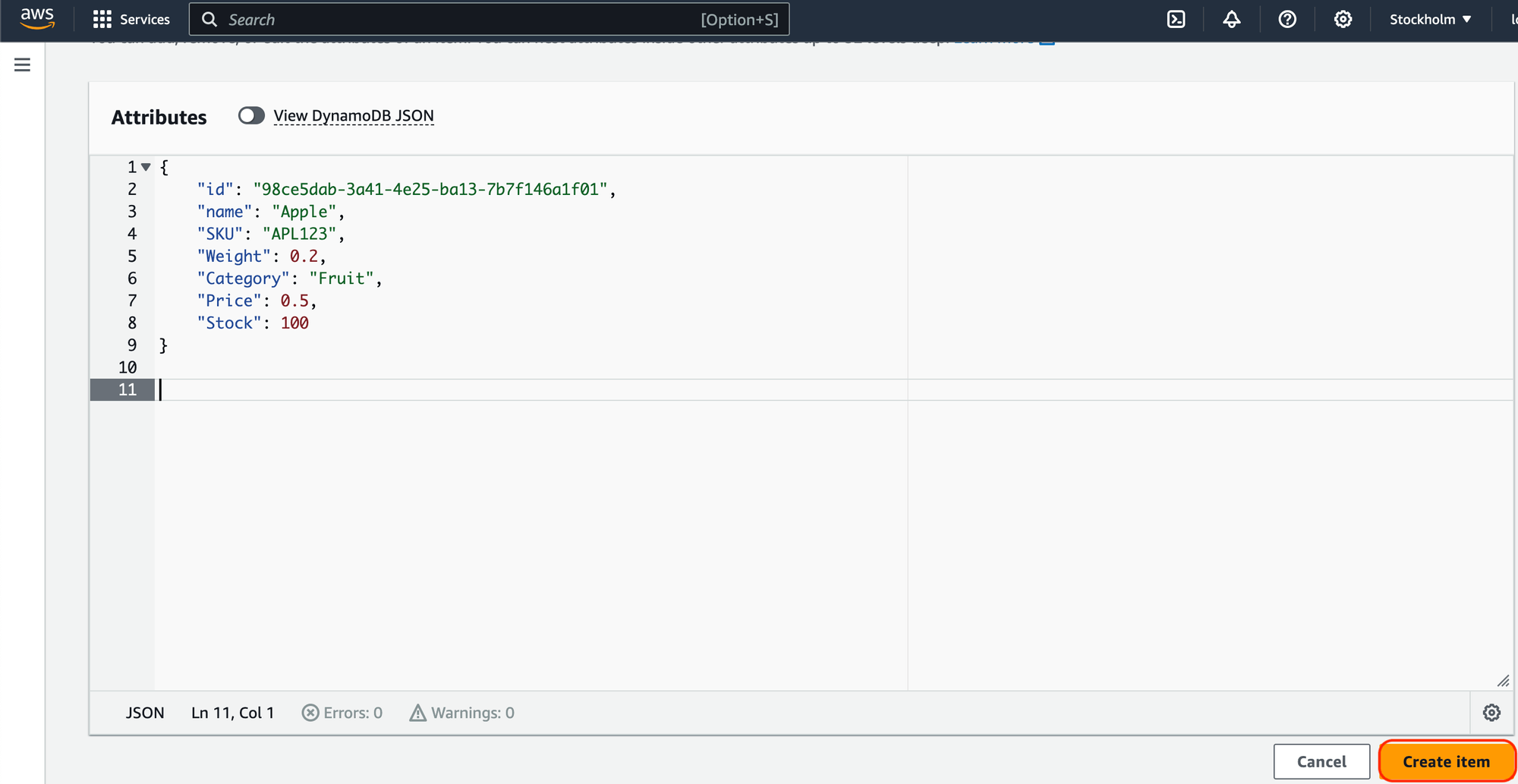

- On the Create item page, select JSON view and disable the View DynamoDB JSON option.

- Add a JSON object to the table with desired attributes and values and click Create item.

- Similarly, create the required number of items in your DynamoDB table.

For more information, read how to create a DynamoDB table.

Step 2: Enable a DynamoDB Table’s Stream

- From the DynamoDB console, select Tables and choose the table you created.

- Navigate to the Exports and streams tab and click Turn on under the DynamoDB stream details section.

- On the Turn on DynamoDB stream page, configure the stream based on one of the following options:

- Keys attributes only: The stream record must only include the primary key attributes of the modified item.

- New image: The stream record must capture the image of the entire data item after the modification.

- Old image: The stream record must capture the image of the entire data item before the modification.

- New and old images: The stream record must include the data item’s new and old images.

- Click Turn on stream to activate streaming.

To learn how to use API operations to enable a stream, read Enable a stream using API.

You can disable an existing stream by clicking Turn off under the DynamoDB stream details section in the Exports and streams tab.

Step 3: Read and Process a DynamoDB Stream

Before processing a DynamoDB stream, you must locate the DynamoDB Streams endpoint. Then, access and process the stream by issuing the following API requests through a Java program:

- ListStreams API to identify the stream’s unique ARN.

- DescribeStream API to determine which shards in the source stream contain the records of interest.

- GetSharedIterator API to access these shards.

- GetRecords API to retrieve the desired stream records from within the given shard.

Step 4: Ensure Real-time Sync Works

- Access your table from the DynamoDB console.

- Perform a few CRUD operations, like read or update, on your table and verify that the log file is always in sync.

- Delete your DynamoDB table or disable a stream on the table to check that stream records remain available in the log for up to 24 hours. After this period, the data expires, and the stream records are removed automatically.

There are other ways to consume Streams. You can use the DynamoDB Streams Kinesis Adapter to read and process your table’s DynamoDB stream. Or you could also use AWS Lambda triggers.

2. Amazon Kinesis Data Streams for DynamoDB

If you need long-term data storage and your application has multiple consumers, Kinesis Data Streams, an AWS streaming model, would be a good option. It captures every item-level change from the DynamoDB table and copies them to a Kinesis data stream.

Key Features of Kinesis Data Streams

- You can store terabytes of data per hour for up to a year.

- You can simultaneously deliver data to up to five consumers per shard. With the enhanced fan-out, this capacity increases up to 20 consumers per shard.

- Integrates with Amazon Data Firehose and Amazon Managed Streaming for Apache Flink. This integration enables the development of real-time dashboard applications and the implementation of advanced analytics and ML algorithms.

How to Implement DynamoDB CDC Using Kinesis Data Streams?

Before you start, you will require the following:

- An active Amazon Web Services (AWS) account

- An IDE of your choice

- Install Node.js and Git CLI on your PC

- Create your DynamoDB table

Here are the steps to implement DynamoDB CDC using Kinesis Data Streams:

Step 1: Enable Amazon Kinesis Data Stream

- Open the DynamoDB console and select Tables; choose your DynamoDB table.

- Navigate to the Exports and streams tab and click Turn on next to the Amazon Kinesis data stream details.

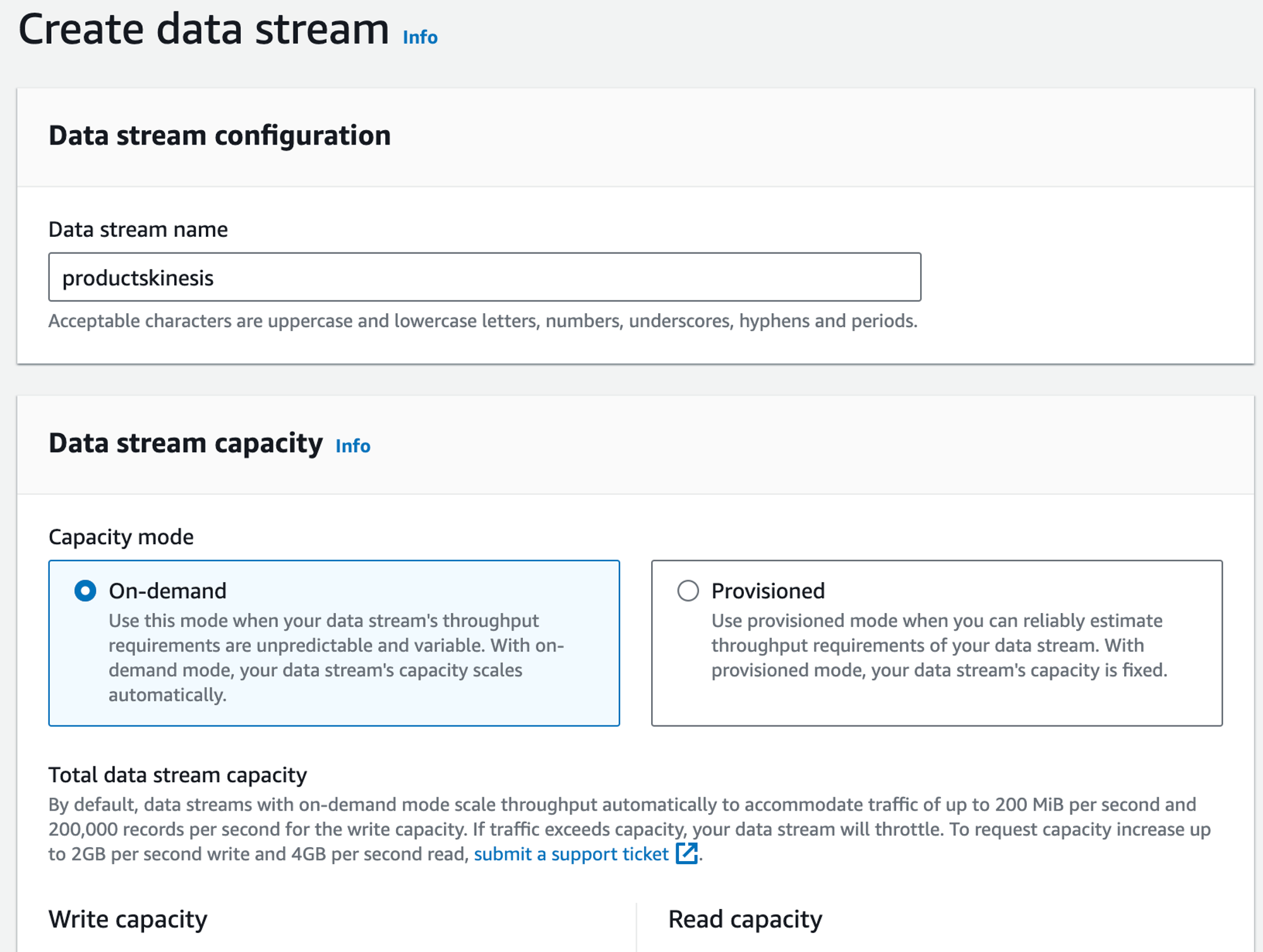

- On the Stream to an Amazon Kinesis data stream page, click Create new next to the Destination Kinesis data stream section.

- You will be redirected to the Create data stream page, where you can configure the Kinesis data stream settings.

- Click on the Create data stream button, then click Turn on stream. This enables the Kinesis Data Streams for your DynamoDB table.

The Kinesis Data Streams for DynamoDB can now capture changes to data items in your table and replicate them to a Kinesis data stream.

Step 2: Configure Kinesis with Amazon S3

Before you begin, ensure the following is ready:

In this step, you must configure the delivery streams to transmit the data from the Kinesis Data Stream to Amazon S3. Follow the steps below:

- Launch Amazon Kinesis in the AWS console.

- Click Create delivery stream under the Data Firehose section in the Amazon Kinesis dashboard.

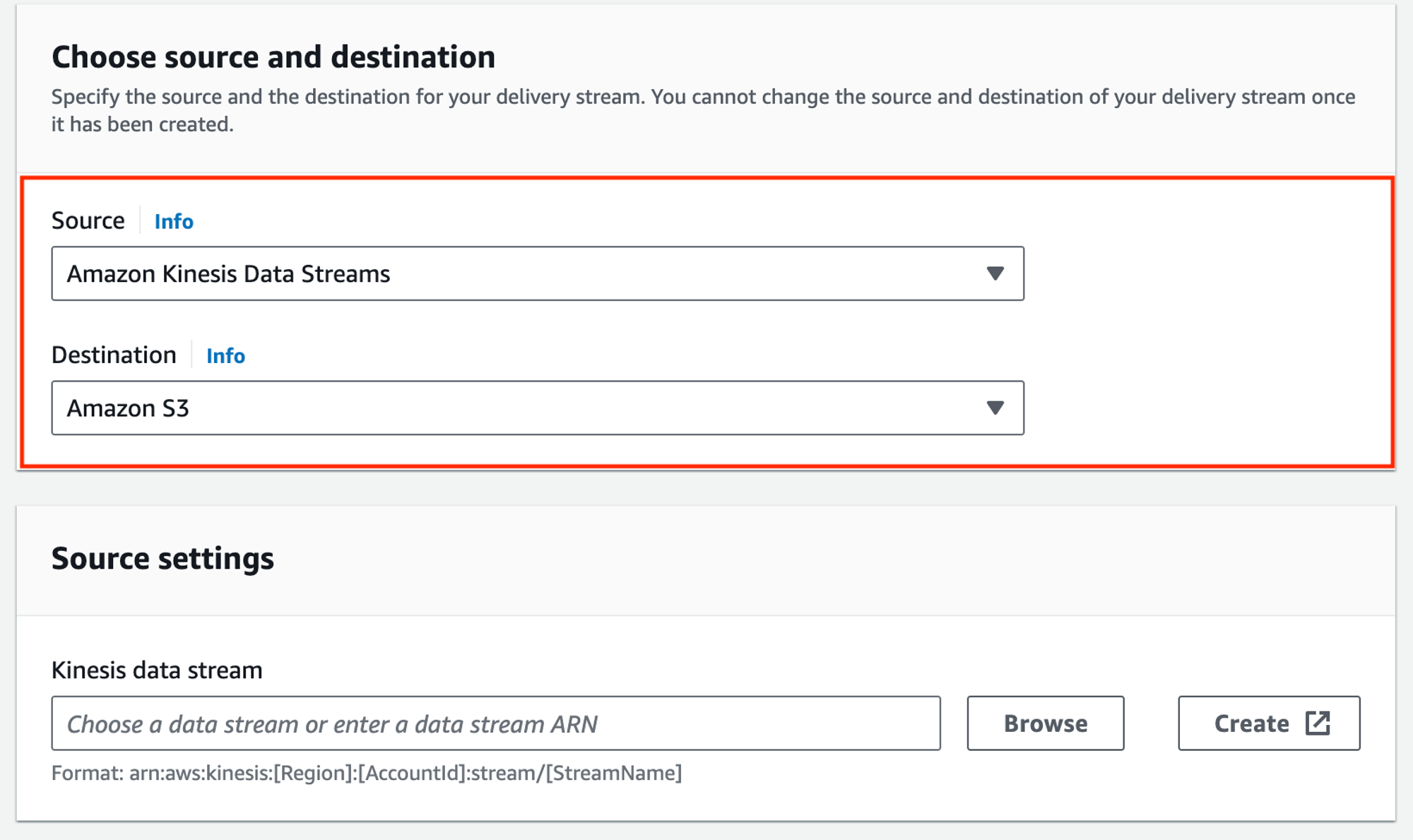

- Set Source as Amazon Kinesis Data Streams and Destination as Amazon S3.

- Browse for your Kinesis stream under the source settings and for your S3 bucket under the destination settings.

- Click Create delivery stream.

Step 3: Allow Your Application Access to Amazon S3

- Navigate to your S3 bucket panel in the S3 console and go to the Permissions tab.

- Click Edit next to the CORS section and add the following configuration:

plaintext[

{

"AllowedHeaders": ["*"],

"AllowedMethods": ["GET", "HEAD"],

"AllowedOrigins": ["*"],

"ExposeHeaders": [],

"MaxAgeSeconds": 3000

}

]- Click Save Changes.

Now, you can test the DynamoDB CDC with Kinesis and S3 using a basic application. Whenever new data is entered through the application’s input field, the changes will automatically be transmitted to Amazon S3.

3. Estuary Flow’s DynamoDB CDC Connector

Typically, DynamoDB CDC streaming approaches require a backfill process before switching to real-time streaming. During this backfill, the system must capture a snapshot of the existing data, note the start time, and then switch to streaming updates.

However, when the backfill exceeds 24 hours, as in the case of Amazon DynamoDB Streams, the start time becomes unavailable in the stream. Due to this limitation, DynamoDB Streams restricts backfills to 24 hours to prevent data gaps. Similarly, Kinesis Data Streams allows backfills for up to a year but may introduce duplicate records.

Estuary Flow’s DynamoDB CDC connector can address these challenges by allowing you to simultaneously ingest data from the stream and the backfill scan; the connector does not limit backfill to 24 hours. It also manages data redundancy by merging and deduplicating data documents based on a JSON schema.

Why Choose Estuary Flow?

Estuary Flow is the fastest, most reliable real-time CDC and ETL platform. It helps seamlessly integrate data from various sources, such as cloud storage, databases, and APIs, to multiple destinations of your choice.

Key Features of Estuary

- Change Data Capture (CDC): Estuary Flow supports streaming CDC with incremental captures. This allows you to connect and immediately start to read a stream while you capture its (24-hour) history. This combined stream is sent to each destination in real-time with sub-100ms latency or with any batch interval. Each stream is also stored in your private account for later uses, from backfills to recovery.

- Extensive Connectors: Estuary Flow offers 100s of pre-built streaming and batch connectors for data lakes, databases, SaaS applications, and data warehouses.

- ETL and ELT: Whenever you need to add transforms, Estuary Flow supports both ETL using SQL and TypeScript transforms and ELT using dbt that runs in the destination.

How Do You Utilize the Amazon DynamoDB connector for CDC?

Estuary’s Amazon DynamoDB connector uses DynamoDB Streams to continuously capture updates from tables into one or more collections.

Before you start, make sure you have already done the following:

- Set up an AWS account.

- Created DynamoDB tables with DynamoDB streams enabled (see above for help.

- Access to AWS endpoints for DynamoDB Streams to read and process stream records.

- Created an IAM user account with the following permissions.

- ListTables, DescribeTable, and DescribeStream on all resources

- Scan on all tables used

- GetRecords and GetSharedIterator on all streams used

- Obtained the AWS access key and secret access key.

- Set up an Estuary Flow account. This only takes 1 minute.

After ensuring the prerequisites, follow these steps:

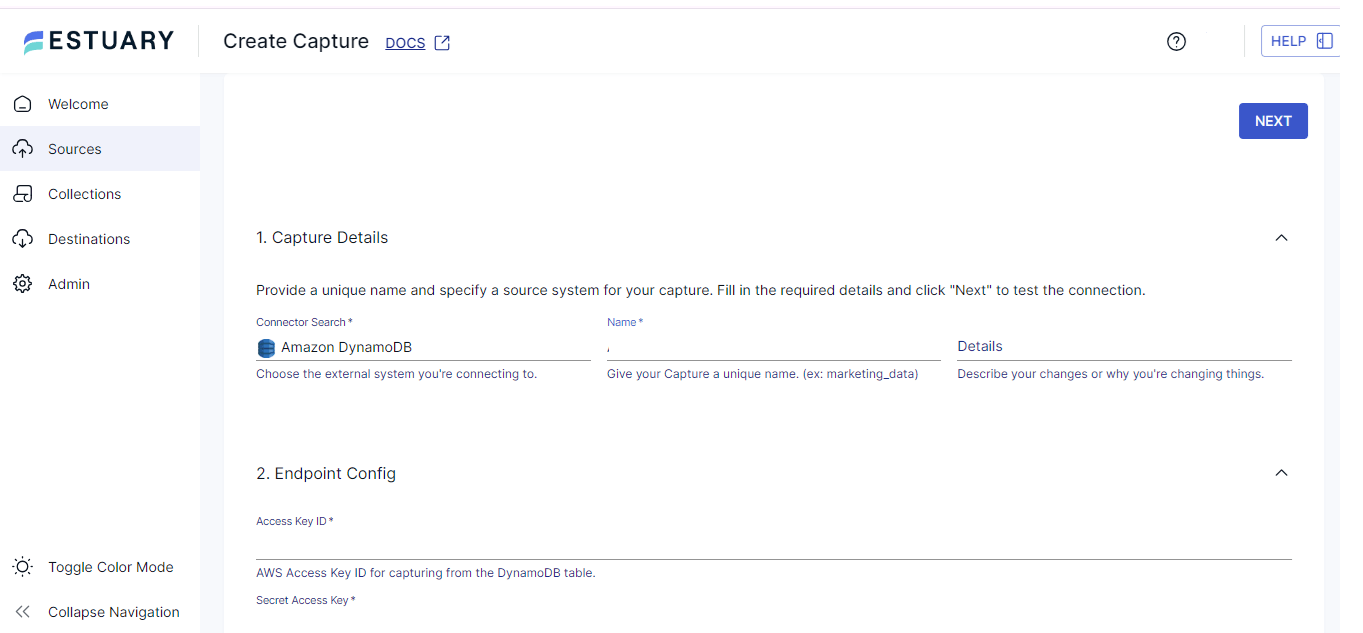

- Log into your Estuary account.

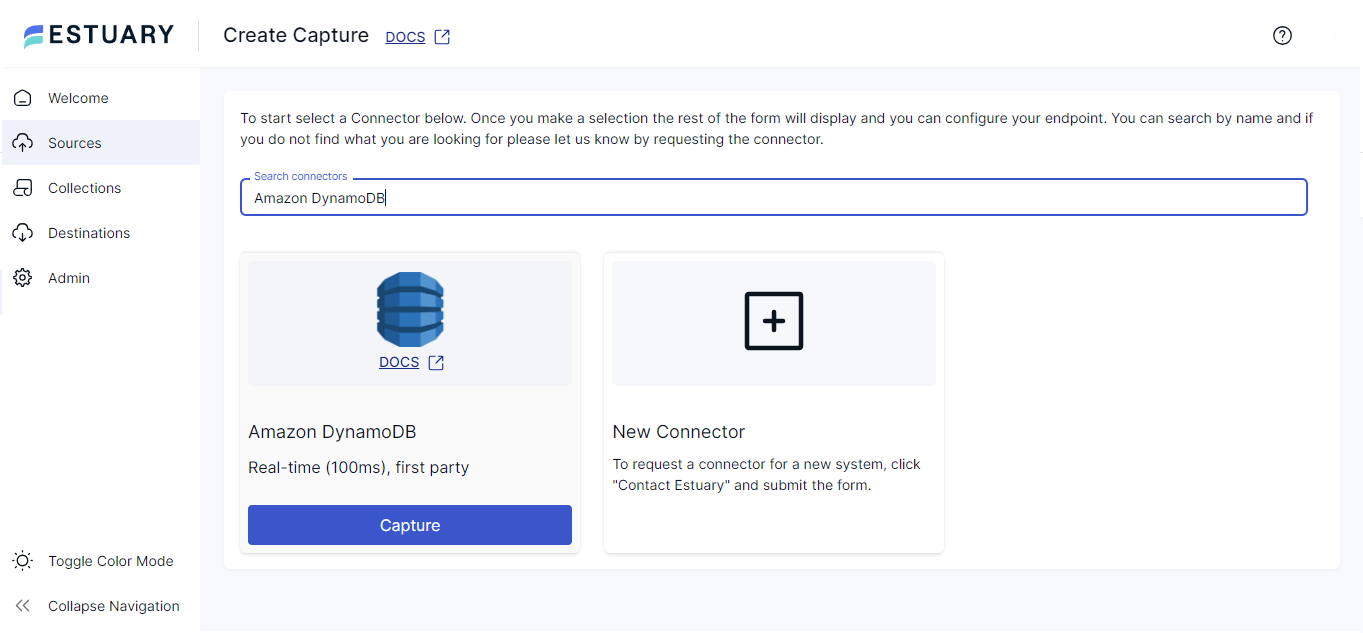

- To begin configuring Amazon DynamoDB as the source, click on the Sources option in the left navigation pane and click the + NEW CAPTURE button.

- In the Create Capture page, search for Amazon DynamoDB connector using the Search connectors field and click the connector’s Capture button.

- On the connector configuration page, specify the required information, including Name, Access Key ID, and Secret Access Key.

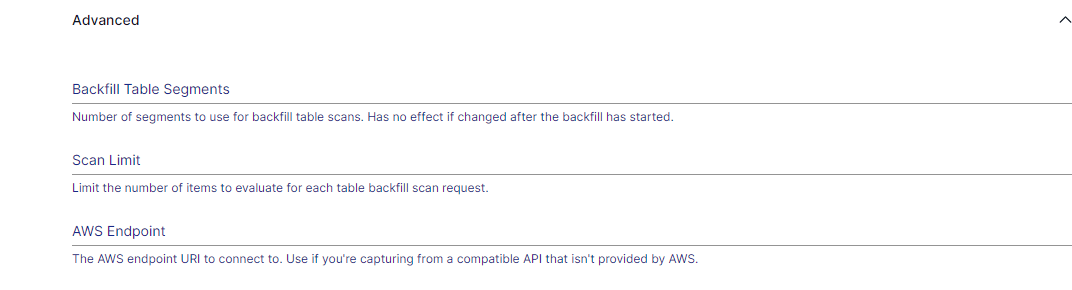

- In the Advanced section, specify the Backfill Table Segments, Scan Limit, and AWS Endpoint for DynamoDB Streams.

- Finally, click NEXT > SAVE AND PUBLISH to complete the configuration.

Now, you can configure your destination system using Estuary materialization connectors and automate the data integration from the DynamoDB tables in near-real-time.

Summing It Up

This CDC DynamoDB guide helps you efficiently capture near-real-time changes in DynamoDB tables for seamless data analytics. You can leverage DynamoDB Streams for immediate updates or utilize Kinesis Data Streams for extensive data retention.

With tools like Estuary Flow and its Amazon DynamoDB source connector, you can implement a robust CDC mechanism that effectively manages data redundancy. This ensures your data remains up-to-date and accessible across various analytical platforms.

Ready to seamlessly migrate up-to-date data from your DynamoDB tables to a destination of your choice? Register for your free Estuary account to get started!

FAQs

Does inserting a duplicate item in the DynamoDB table trigger a Lambda function or generate a CDC event in DynamoDB Streams?

No. Inserting a duplicate item in the DynamoDB table will not trigger a Lambda function or generate a CDC event in DynamoDB Streams. An event is triggered if there is a change in at least one attribute of the item.

How does DynamoDB CDC event ordering differ between Kinesis Data Streams and DynamoDB Streams?

In Kinesis Data Streams, the timestamp attribute on each stream record can be used to determine the actual order of changes in the DynamoDB table. On the other hand, in DynamoDB Streams, the stream records appear in the same order as the actual modifications for each item modified in a DynamoDB table.

Related CDC Guides:

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles