Data architecture is a broad concept in the world of technology. It is not a mere technicality – it is the bedrock upon which data-driven strategies are built, enabling companies to derive insights, make informed decisions, and gain a competitive edge. While the term is quite familiar, its intricate details, components, and functionalities often remain an enigma for many.

For any business, developing a data architecture framework is no small feat. With the immense growth of data production, businesses face numerous challenges in maintaining their integrity, ensuring its accessibility, and facilitating secure storage and transfer.

The diverse sources from which both structured and unstructured data emerge further complicate the process. Meeting these demands requires a robust, flexible, and efficient data architecture. This forms the basis of our guide today.

This article covers all aspects of data architecture, from basics to vital components, roles, data modeling, and different frameworks. We’ll also guide you through building robust data architecture and discuss its evolution, business impacts, and prospects.

By the time you’re done reading this guide, you’ll have a firm grip on data architecture and effectively use it in your organization.

What Is Data Architecture?

Data architecture is a structured system that outlines how data is stored, managed, and used within an organization. It sets up rules, methods, and protocols for handling data. Data architecture isn’t a fixed entity. It evolves as the business grows and new technologies emerge. It is adaptable and flexible, designed to cater to changing business needs and landscapes.

7 Key Benefits Of Data Architecture

Here are some key benefits of data architecture:

- Decision-making: It ensures data is accurate, consistent, and relevant.

- Work efficiency: It improves work efficiency across the business by organizing data effectively.

- New Opportunities: Well-managed data can uncover new markets and business opportunities.

- Transforming data into insight: It outlines the process through which raw data is transformed into useful insights.

- Managing data: Data architecture helps in organizing data efficiently. This includes the collection, storage, and management of data.

- Customer experience: Better data management improves understanding of customers’ needs and behavior, thereby enhancing their experiences.

- Aligning with business strategy: Data architecture is designed to align with your business goals and data strategy. It acts as a guide, linking your data operations with the company’s strategic objectives.

To get a better understanding of data architecture and its role, we’ll now look into the components of data architecture.

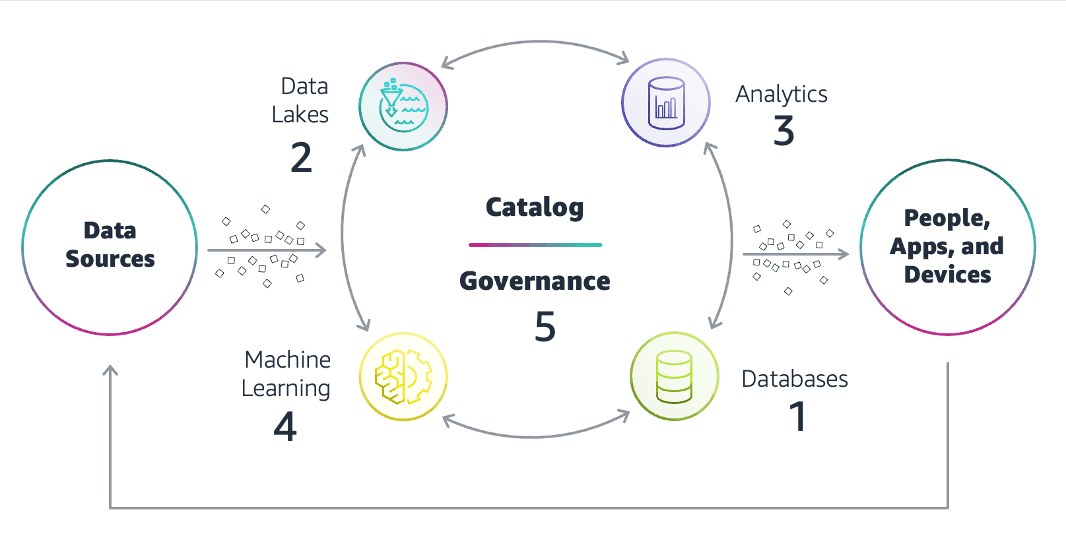

Exploring The 7 Major Components Of Data Architecture

Data architecture comprises several key components that work together to capture, process, store, and distribute information. Here is a closer look at some crucial elements that construct an effective data architecture:

- Data pipelines are mechanisms where data flows from one point to another. They handle data collection, transformation, and storage, eventually making it suitable for analysis.

- Artificial Intelligence (AI) and Machine Learning (ML) models identify patterns and insights within datasets for quicker and more accurate results without human intervention.

- Real-time analytics is the process of analyzing and deriving insights from data as soon as it enters the database. It provides immediate value and influences timely decision-making.

- Cloud storage pertains to the storage of data in online servers, also known as 'clouds'. Cloud storage offers remote access to data, facilitates its safekeeping, and enables easy sharing with appropriate access rights.

- Cloud computing uses remote servers over the internet to deliver diverse services, including data storage, processing, and analytics. It promotes scalability, flexibility, and cost-effectiveness in managing and utilizing data.

- Data streaming involves the continuous transfer of data from a source to a destination. Unlike data pipelines, data streaming refers to real-time or near-real-time data transfer, making it valuable for time-sensitive applications and processes.

- Application Programming Interfaces (APIs) are intermediary software that helps different applications interact and exchange data. They enable applications to fetch, send, and integrate data smoothly, fostering enhanced functionality and user experience.

With these advanced components and processes in place, it's equally important to have a competent team capable of building, managing, and leveraging your data architecture. That’s exactly what we are going to focus on in the next section where we’ll look into key roles involved in data architecture.

Understanding Different Roles In Data Architecture

The people responsible for establishing and maintaining data architecture play a critical part in how the organization manages and uses its data. The following is an in-depth overview of the roles involved in data architecture:

Data Architects

They are responsible for designing the blueprint of data flow within an organization. Their role involves understanding the organization’s needs and crafting a data structure that addresses those needs effectively. They manage data collection, data storage, and data integration, ensuring a seamless data cycle.

Data Engineers

Data engineers are the builders of data architecture and handle tasks such as extracting, transforming, and loading (ETL) data. They pull data from various sources, transform it into a usable form, and load it into a central repository. Data engineers are also responsible for maintaining and updating data sets, ensuring their accuracy and relevance.

Data Analyst

A data analyst’s role is to clean and analyze data sets. They use various tools and methodologies to remove inaccuracies and inconsistencies in data to make it more reliable for analysis. They further process this data to draw valuable insights which are then presented in easily digestible formats like charts or reports.

Data Scientists

These individuals use special tools and techniques to find useful information in data. They look for patterns and study changes in the market and customer behavior. Their work helps the organization make plans and policies.

Solutions Architects

Solution architects develop products or services based on an organization’s specific needs. They work closely with both business and technology teams, creating solutions that cater to their requirements. Their role is pivotal in balancing business objectives with technical feasibility.

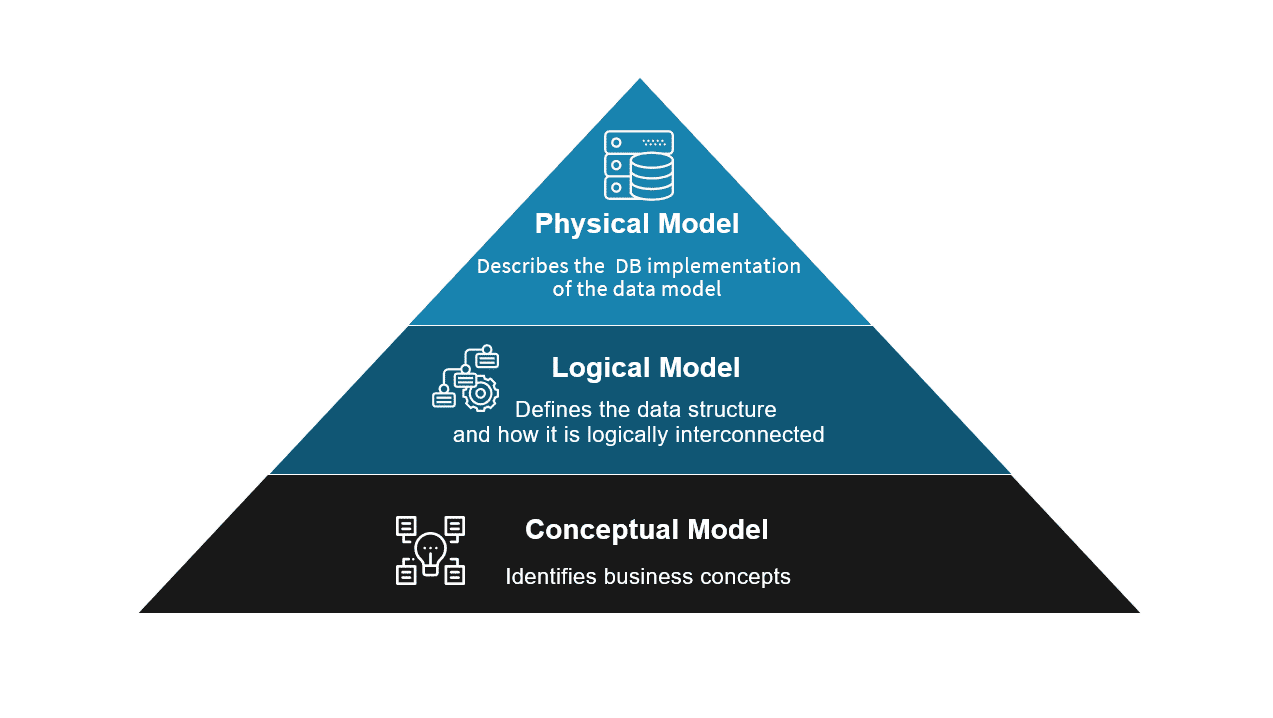

3-Tiered Approach To Data Modeling In Data Architecture

A data architecture describes 3 important types of data models: Conceptual, Logical, and Physical. Each model is a step toward transforming an abstract concept into a functional data system.

Conceptual Data Models: Establishing The Foundation

The conceptual data model, also known as the domain model, forms the initial stage of data modeling in data architecture. These models are relatively high-level and are typically used for discussions with stakeholders and domain experts. This model:

- Identifies entity classes or categories that the system will handle. For instance, in a business environment, these could include ‘data consumers’, ‘products’, or ‘sales’.

- Defines the attributes of these entities. For a 'customer' entity, the attributes might be 'name', 'address', or 'email'.

- Illustrates the relationships between various entities, showcasing how these entities interact within the system.

- Determines the scope of the model, explicitly stating the entities that the system will and will not cover.

- Incorporates business rules that dictate the structure of data and its interactions within the system.

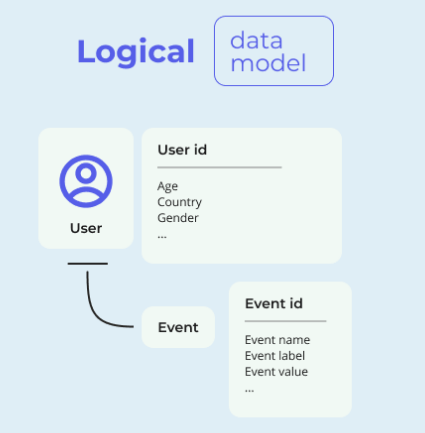

Logical Data Models: Drawing The Detailed Plan

The logical data model is built upon the foundation laid by the conceptual model. This model incorporates further details without bothering with specific technical implementations. It is a bridge between the abstract view of the conceptual model and the technical view of the physical model.

- It builds upon the relationships defined in the conceptual model, representing them with greater detail and accuracy.

- The model further refines the attributes of each entity and details individual fields like data types, sizes, and lengths.

- It helps design entities that are operationally or transactionally important, like 'sales orders' or 'inventory management'.

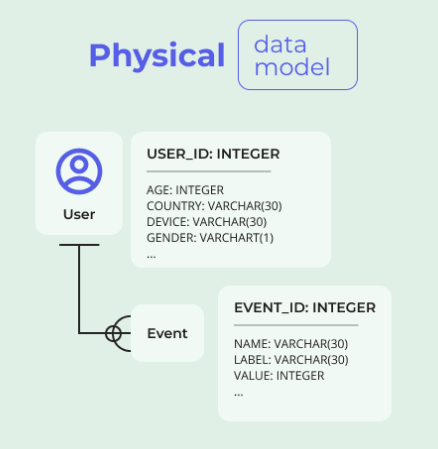

Physical Data Models: Crafting The Implementation Path

The final stage in the data modeling process is the physical data model. This model translates the logical model into a database that can be implemented.

- The model also specifies the data storage method – whether on-disk, in-RAM, or a hybrid solution.

- It devises strategies for effective data distribution, addressing techniques like replications, shards, and partitions.

- It selects a specific database management system (DBMS) that aligns best with the requirements, like Oracle, SQL Server, or MySQL.

With a comprehensive understanding of data models, let’s build on this knowledge and explore some of the data architecture frameworks next.

4 Cutting-Edge Data Architecture Frameworks

Frameworks provide the necessary foundation and guidance for efficient data architecture. These frameworks act as the blueprint for data management and help your organization to align its data objectives with its business goals. Let’s look into some of the key frameworks that are instrumental in shaping this arena.

The Open Group Architecture Framework (TOGAF)

Formulated by The Open Group in 1995, TOGAF serves as a complete methodology for developing an enterprise’s IT architecture with a focus on data architecture. It divides its approach into 4 areas:

- Technical architecture describes the necessary technology infrastructure to support essential applications.

- Business architecture lays out the structure of the business, including organizational hierarchy and strategy.

- Data architecture outlines the life cycle of data assets, from their conceptual stages to their physical manifestations.

- Applications architecture explains how application systems interact with each other and with pivotal business processes.

The Data Management Body Of Knowledge (DAMA-DMBOK2)

DAMA International's DAMA-DMBOK 2 is an extensive guide for managing data. It’s designed to give you all the tools you need to understand and implement effective data management in your organization. Here’s what you’ll find in it:

- Standardized definitions: Consistent terminology for data management.

- Roles and functions: Guidelines specific to various roles in data management.

- Data management deliverables: Clear outcomes for each data management stage.

- Expansive coverage: Information on data architecture, modeling, storage, security, and integration.

Zachman Framework

The Zachman Framework is another tool for organizing data architecture. It was first developed by John Zachman at IBM in 1987. The framework is structured as a matrix with 6 different layers. Each layer corresponds to a different question. Here’s how it works:

- 6 Layers: A matrix divided into 6 distinct layers for systematic data organization.

- Purposeful Questions: Each layer represents a unique question (Why? How? What? Who? Where? When?).

- Structured Data Overview: A clear view of data architecture through systematic mapping across matrix layers.

Federal Enterprise Architecture Framework (FEAF)

Specifically designed for the processes of government agencies, the Federal Enterprise Architecture Framework (FEAF) offers guidance for implementing IT services. This enterprise architecture methodology consists of:

- Data architecture defines how to manage data.

- Business architecture outlines business processes.

- Application architecture shows the relationship between different applications.

- Technology architecture describes the necessary technology infrastructure.

Now that we are familiar with different data architecture frameworks, it's time to see how we can put these theories into practice in building an advanced data architecture.

Building A Robust Data Architecture: A 6-Step Guide

Crafting a successful data architecture can feel like a difficult task. To simplify this process, we’ve divided it into 6 manageable steps.

Step 1: Evaluate Current Tools & Systems

Start by understanding your data and system requirements and assess all the tools and systems your organization currently uses. Know how these systems interrelate and interact with each other. Interview the stakeholders using these systems to learn what works and what needs improvement.

Step 2: Plan Your Data Structure

Ensure your data warehouse has an updated record of stored data. If any data sources aren’t part of the data warehouse, find out the reasons. Evaluate if including these disconnected data sources in the warehouse could be beneficial. You can choose to connect them using a reporting tool if adding them isn’t worthwhile.

Consider incorporating the following into your data warehouses:

- CRM data (Salesforce or Marketo)

- Third-party data (Dun & Bradstreet)

- Digital analytics (Google or Adobe Analytics)

- Email marketing data (Mailchimp or Constant Contact)

- Customer service data (chatbots, Zendesk, Twitter help)

Step 3: Set Clear Business Goals

Always keep your end goals in mind during this process. Understand what questions your organization needs to answer and how you can provide these answers more effectively. Establishing relevant KPIs for each business unit and developing a tagging solution for new metrics are important steps.

Step 4: Maintain Consistent Data Collection

Maintain consistent data collection to avoid compromising analysis and report trustworthiness. Changes in data collection methods can disrupt comparisons over time. Think about how changes might affect your reports before updating data collection methods. If substantial changes are necessary, document them thoroughly.

Make sure data is correctly collected and joined before analysis. Duplication can cause confusion and inaccuracies so devise a process for effectively managing duplicate data.

Step 5: Choose A Data Visualization Tool

The right data visualization tool can make a significant difference. Aim for automated, meaningful reporting that provides actionable insights. Evaluate your tool based on factors such as:

- Budget considerations

- How easily it connects to your data source

- Required analysis/visualization capabilities

- Need for interactivity and automatic updates

- Access limitations based on business groups

- How reports and dashboards will be distributed

- Specific visual reporting constraints (branding, color scheme)

Step 6: Reporting & Analysis

Understand the difference between reporting and analysis. Reporting, largely automated, provides metrics from your data sources. Analysis, on the other hand, adds context to these reported data to help answer questions.

Automating your data architecture process will help you focus on the critical task of analysis. This is where you add value by interpreting trends, identifying causes of spikes or dips, and providing actionable insights to inform your business strategy.

Modern Data Architecture: Envisioning The Future

Modern data architectures are the building blocks for new technologies like artificial intelligence, blockchain, and the Internet of Things (IoT). To use these technologies, we need a modern data architecture to handle their needs.

The hallmark of modern data architectures lies in these 7 distinctive features:

- With standard API interfaces, they facilitate seamless integration, even with legacy systems.

- Native to the cloud, utilizing the cloud's elasticity and high availability for optimal performance.

- Remove dependencies between services and use open standards for interoperability, promoting flexibility.

- Striking the right balance between cost and effectiveness makes the architecture both efficient and easy to navigate.

- Ensure robust, scalable, and portable data pipelines, combining intelligent workflows, cognitive analytics, and real-time integration.

- Enable real-time data validation, classification, management, and data governance for data quality and consistency.

- The structure is developed around common data domains, events, and microservices which establishes uniformity and standardization across the organization.

Utilizing Estuary For A Streamlined Data Architecture

To create an effective data architecture, using tools that simplify complex data processes can prove incredibly valuable. One such tool is our DataOps tool, Estuary Flow. It makes handling streaming ETL (Extract, Transform, Load) and Change Data Capture (CDC) much simpler.

Here’s how Flow can make a difference in your data architecture:

- Schema Inference: It turns unstructured data into structured data, simplifying further data processing.

- Reporting & Monitoring: It provides live reporting and monitoring so you can be sure your data is flowing as it should be.

- Extensibility: It supports the addition of connectors through an open protocol, enabling seamless integration with your existing systems.

- Transformations: With Flow, you can perform real-time SQL and TypeScript transformations on your data for efficient and flexible data manipulation.

- Materializations: Flow lets you maintain real-time views across the systems of your choice. This means you can quickly access up-to-date information whenever you need it.

- Consistent Data Delivery: With Flow, you can have confidence in the consistency of your data. The tool ensures transactional consistency so you have an accurate view of your data.

- Testing & Reliability: Estuary Flow includes built-in unit tests to ensure the accuracy of your data as your pipelines evolve. It’s also built to survive any failure, providing reliability and peace of mind.

- Low System Load: It reduces the strain on your systems by pulling data once using low-impact CDC. This means you can use your data across all your systems without having to re-sync, minimizing system load.

- Scaling and Performance: Estuary Flow is designed to scale with your data and can easily handle high data volumes. This means it can backfill large amounts of data quickly without affecting your system's performance.

- Data Capture: Flow collects your data from various sources including databases and SaaS applications. It can do real-time Change Data Capture (CDC) from databases, integrate with real-time SaaS applications, and even link to batch-based endpoints.

Conclusion

A firm grip on data architecture is a necessity for any business utilizing data for its day-to-day operations. Looking ahead, modern data architecture will continue to evolve, shaping the way businesses handle data.

Remember, data architecture is not just an abstract concept. It is a tangible force that empowers organizations to transform raw data into valuable assets, providing the foundation for informed decision-making, innovation, and sustainable growth.

If you are in the process of developing or upgrading your data architecture, Estuary Flow is a great tool to handle real-time data. It will help you seamlessly integrate various data sources and manage them efficiently, reducing the complexity of building and maintaining data architecture. Sign up here for Estuary Flow (it's free to start!) or reach out to our team to discuss your specific needs.

Author

Popular Articles