Salesforce is one of the, if not the, most popular enterprise SaaS apps today. It’s a multi-faceted and scalable CRM, so most customers see large amounts of data churn in their Salesforce accounts every day.

Getting a reliable real-time data stream out of Salesforce is vital for day-to-day operations and analytics alike, but it can be a challenge. In this post, I’ll outline how Estuary solved this problem so we could offer our customers connectors for both historical and streaming data.

Background: Estuary and Salesforce

First, a bit about Estuary and why we were interested in the specific problem of real-time data ingestion from Salesforce.

We offer Flow, a platform for building streaming data integration with a low-code UI and a variety of connectors. While Flow supports batch-based connectors (we integrate with the open-source Airbyte protocol), we need to create real-time connectors to allow customers to take advantage of the streaming backbone that underlies Flow.

Salesforce has a large set of different APIs, each with its own set of limits and quirks, and serving a specific purpose. To deliver a more comprehensive experience for capturing data from Salesforce, we have to use a mixture of different APIs and methods. More specifically, to capture historical data and real-time updates, we had to get creative and join together two connectors based on two different APIs.

For capturing historical data, we use a slightly modified version of Airbyte’s Salesforce connector which uses the REST Query API. It uses an HTTP API call for every request sent. If we were to use this method for capturing real-time updates, we would quickly exhaust the API limits, since we will need to send a request periodically to check for updates on the entities we are interested in.

Related: all about Salesforce CDC.

Salesforce Streaming APIs

Salesforce also provides a set of Streaming APIs, which are built for the purpose of receiving real-time updates. So we started researching the different Streaming APIs, comparing their methods and limitations to see which one would suit our customers’ needs best.

Here’s a table showing a comparison of the two main APIs’ limits, assuming a basic Salesforce account:

| Streaming API | Max No. Entities | Max Event Deliveries (24h) |

|---|---|---|

| PushTopic | 40* | 50,000 |

| Change Data Capture | 5 | 10,000 |

| * PushTopic has no limit on entities per se; however, it has a limit on the number of channels that can be created. | ||

Change Data Capture is the newer API. However, given its limits, especially the maximum number of entities that can be subscribed to, we had to choose PushTopic.

PushTopic: Challenges

PushTopic comes with its challenges and limitations. There are three main shortcomings that we had to address:

Technology: Comet

PushTopic uses a fairly old technology called Comet which goes as far back as the 2000s. There are not many clients for this protocol. The standard and officially supported client is in Java, so we chose Kotlin to write our connector so we could use the official client implementation. This is the first piece of software that we write at Estuary using Kotlin. With the official client library available, we haven’t had much trouble using the technology though, which has been a delight.

Backfilling historical data

PushTopic doesn’t support backfilling of historical data — it can only go back 24 hours in time.

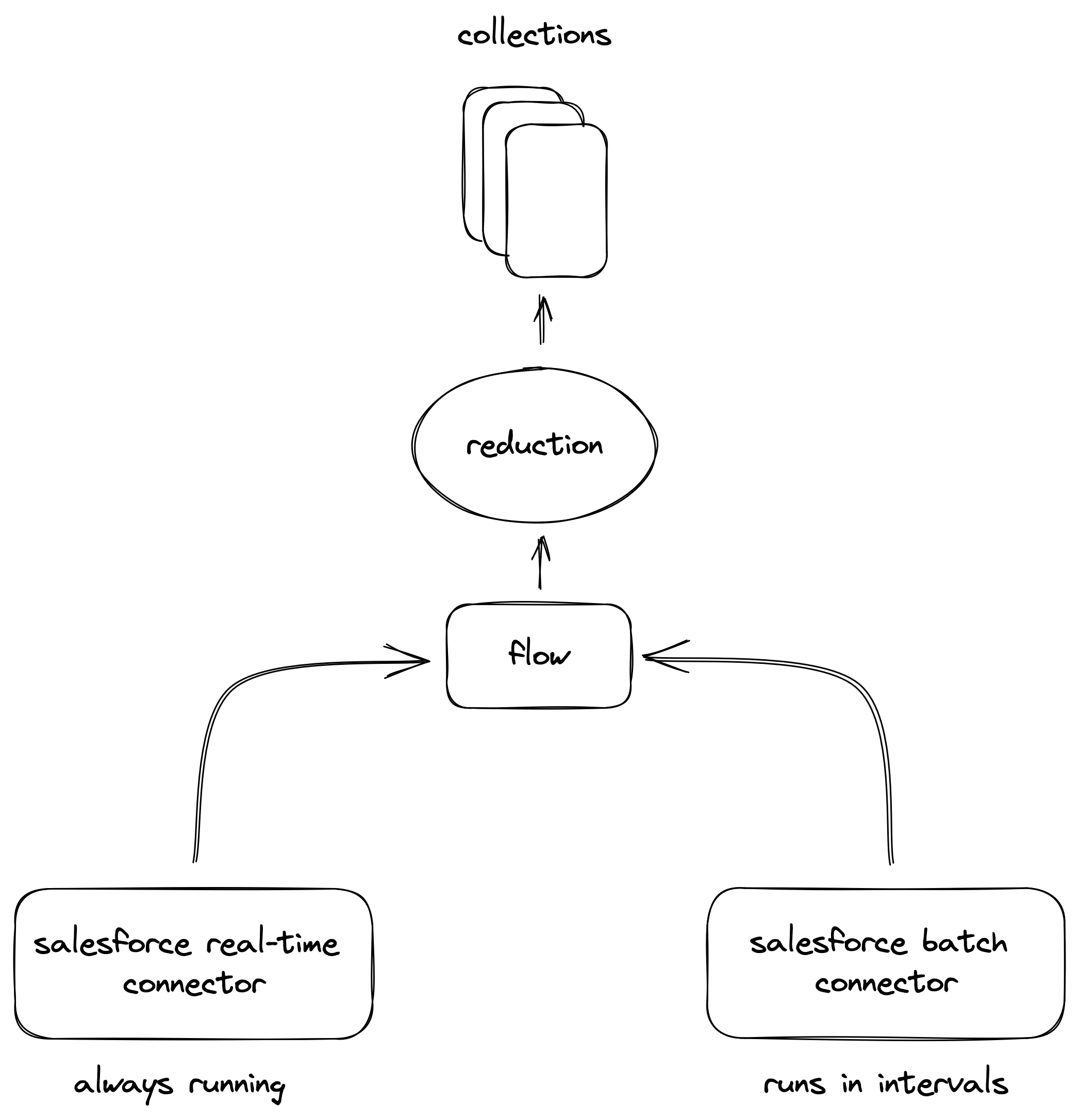

However, given the flexibility provided by Flow’s data reduction strategies, we are able to have our real-time connector running side-by-side with our historical Salesforce connector. Essentially, we run the connectors in parallel, writing data to the same collections of data, and Flow takes care of merging and de-duplicating this data for us.

The real-time connector is running continuously, providing updates as soon as they arrive, whereas the historical backfill connector runs in intervals to ensure full synchrony. The interval at which the historical connector runs is configurable by users.

Textarea fields

PushTopic subscriptions do not support getting real-time updates about textarea fields. This means that we have had to find an alternative way to keep textarea fields in sync. For this purpose, we rely on the historical bulk update connector to sync textarea fields at every interval that it runs.

Conclusion

Capturing real-time data along with historical data from Salesforce can be challenging, but with help of Flow’s powerful and flexible runtime and connector-based integrations, we’ve found a way to give our customers a way to capture real-time data from Salesforce along with full synchronization cycles to ensure data consistency.

You can view the source code for the real-time Salesforce connector here, and the historical connector here. To get set using both together in Flow, you can contact our team.

To chat more about this (and how we’re solving other engineering problems at Estuary) join us on Slack.

Learn more about other types of CDC:

About the author

Mahdi is a software engineer at Estuary with a focus on integrations, working on multiple in-house connectors including Oracle, MongoDB and Salesforce. He has a diverse background including data engineering, devops, full-stack, functional programming and ML.

Popular Articles