For any data-driven business, handling large amounts of data from multiple sources is constant. As data and sources grow, figuring out the best way to manage real-time actions versus control has sparked the batch processing vs stream processing debate. While both methods have their unique strengths, stream processing's real-time capabilities stand out, especially as 80-90% of newly generated data is unstructured.

In this guide, we’ll explore the pros, cons, and practical use cases of batch processing vs stream processing to help you choose the best data strategy.

What is Batch Processing: A Guide To Processing Data In Bulk

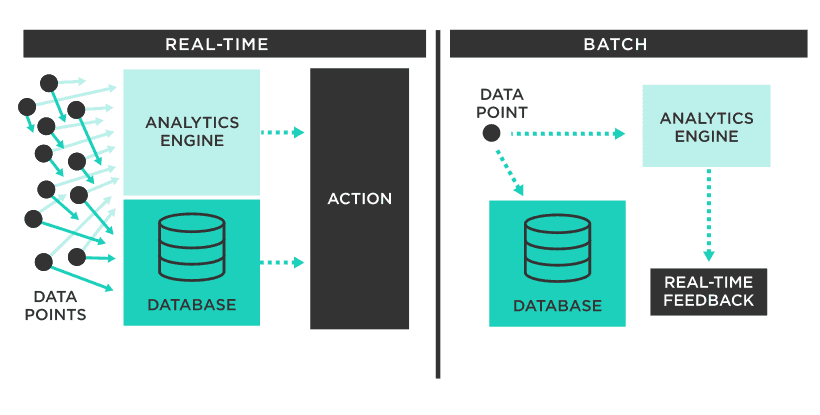

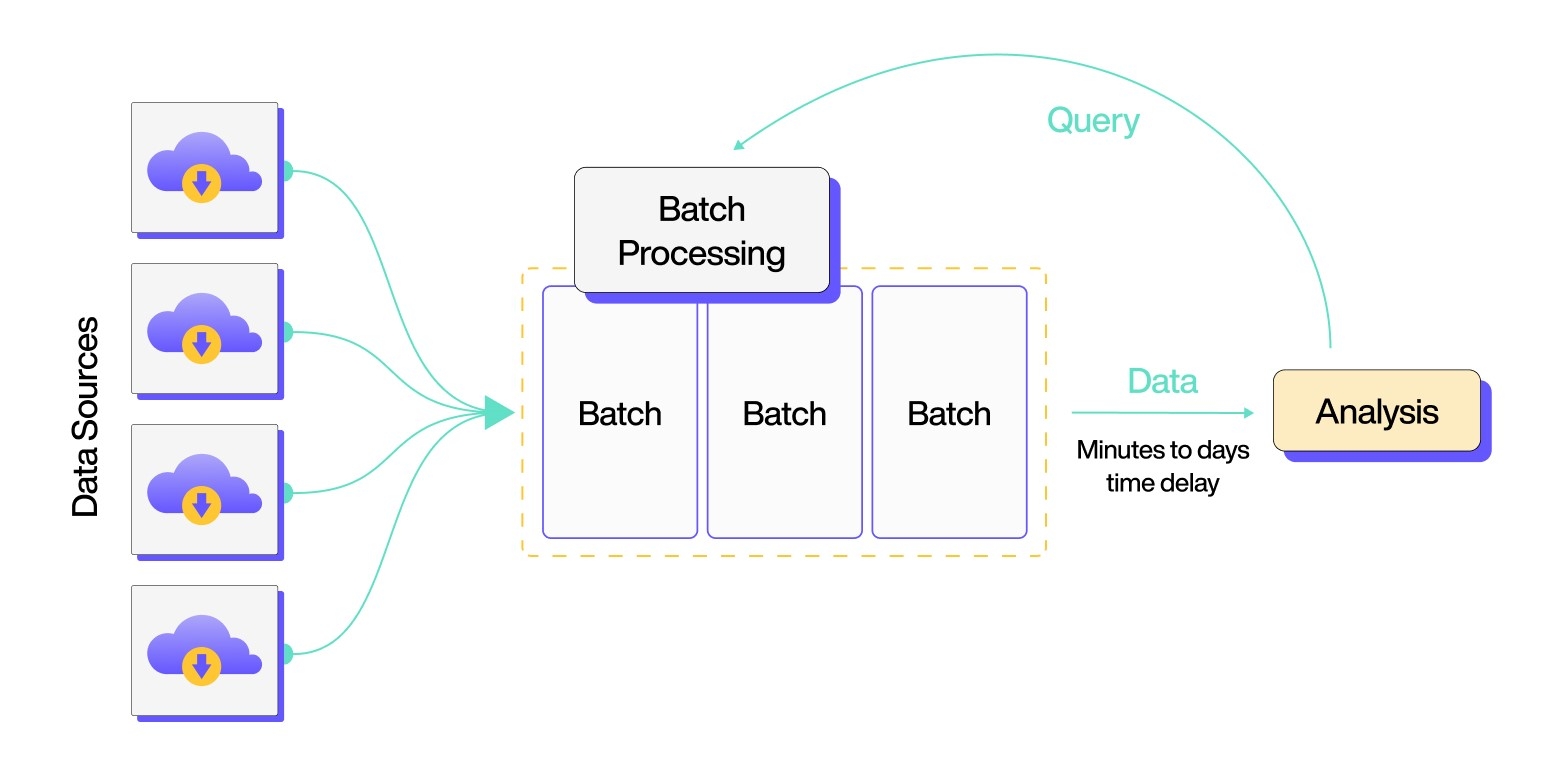

Batch processing is a method employed in data analysis where data is accumulated into groups or 'batches' before it is fed into an analytics system. Here, the batch refers to a collection of data points collected over a specified duration. It’s an approach mainly used when dealing with large data sets or when the project calls for in-depth data analysis.

Batch processing doesn’t instantly feed data into the analytics system. Therefore, the results aren’t real-time and the data has to be stored in storage solutions like file systems or databases. While it may not be the best fit for projects that demand speed or real-time outcomes, many legacy systems solely support batch processing.

Batch Processing Pros

Batch processing, with its automated and systematic approach, provides many advantages for businesses. Let’s look into these benefits to better understand the importance of batch processing in today’s world.

- Offline features: Unlike some systems that require consistent online connectivity, batch processes can be executed at any time, irrespective of online or offline settings.

- Improved data quality: Batch processing enhances the accuracy of data. As the process is mostly automated, it minimizes manual interventions and reduces human errors.

- Hands-off approach: It is designed for minimal supervision. Once the system is operational, it’s self-sufficient. When issues arise, the system sends alerts to the right people, so they don’t have to monitor it constantly.

- Greater efficiency: To smartly manage your tasks, you can schedule processing jobs only when resources are available. This method lets you prioritize urgent tasks while less immediate jobs can be allocated for batch processing.

- Simplified system: There’s no need for specialized hardware, constant system support, or complicated data entry, simplifying the overall process. This method's automatic process and low maintenance requirements make it easy for your team to switch to batch processing.

Batch Processing Cons

While batch processing has its benefits, it’s important to consider its limitations to make a well-rounded decision. Let’s look at some of the most common challenges associated with batch processing.

- Debugging and maintenance: Batch processing systems are challenging to maintain. Even small errors or data issues can escalate quickly and ground the entire system.

- Dependency on IT specialists: Batch processing systems have inherent complexities. So, you’ll often need IT experts to debug and fix them which can be a constraint for business operations.

- Manual interventions: Despite the automation capabilities, batch processing sometimes needs manual tuning to meet specific requirements. These manual interventions cause inconsistencies, potential errors, and increased labor costs.

- Unsuitability for real-time processing: Given its inherent latency, batch processing is not suitable for real-time data handling. This means for tasks that require instantaneous insights or timely data feedback, batch processing might not be the optimal choice.

- Deployment and training: Using batch processing technologies needs special training. It's not just about setting up the technology; you should make sure that managers and staff are ready to handle batch triggers, understand exception notifications, and schedule processing tasks effectively.

What Is Stream Processing: Understanding Real-Time Data Processing

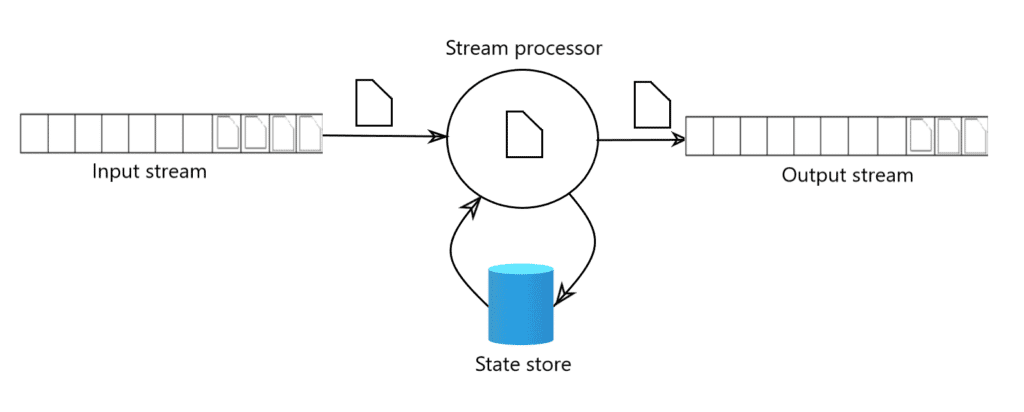

Stream processing is a method that analyzes, filters, transforms, or enhances continuous data streams. As the data flows, it is processed in real-time and then sent to applications, storage systems, or even other stream-processing platforms as data packets.

Processing data in real-time has become increasingly important for many applications. This technique is gaining traction as it blends data from different sources – from stock feeds and website analytics to weather reports and connected gadgets.

With stream processing, you can process data streams as they are created to perform actions like real-time analytics. This real-time aspect can vary, from milliseconds in some applications to minutes in others. The main idea is to package data in short, manageable bursts so the developers can easily combine data from multiple sources.

Stream Processing Pros

Let’s discuss the major benefits of stream processing and understand why it’s becoming an important tool in modern data operations.

- Efficient data handling: Stream processing has a consistent, real-time approach to data analytics. It swiftly identifies and retains the most important information.

- Enhanced data security: Stream processing minimizes

potential discrepancies in data handling while prioritizing secure transmission techniques. As a result, data remains protected and unaltered. - Effortless scalability: As data grows, storage becomes a headache. While batch processing might struggle and require massive changes, stream processing handles these increases smoothly.

- Handling continuous data easily: Stream processing manages endless flows of data. With the rise of IoT, much of its data comes in continuous streams. This makes data stream processing crucial to extract important details from these ongoing data flows.

- Quick real-time analysis: Some databases analyze data in real-time but stream processing is unique. It quickly handles large amounts of information from different places. It's especially helpful in fast-paced situations like stock trading or emergency services where quick responses are needed.

Stream Processing Cons

Stream data processing does come with challenges that can worry some businesses. Let’s look at the most common challenges you can face during stream processing.

- Dealing with fast-arriving Information: Stream processing systems face quick incoming data. Handling this speedy influx can be tough and immediate processing and analysis are needed to stay ahead of the data flow.

- Memory management issues: Since these systems continuously receive a lot of information, they require significant memory resources. The difficulty is in finding the best methods to manage, retain, or dispose of this information.

- Cost implications: The sophisticated infrastructure needed for stream processing comes with a hefty price tag. The initial setup, maintenance, and even potential upgrades can be costly – in terms of money, time, and resources.

- Complexities in query processing: To serve a diverse range of users and applications, a stream processing system should handle multiple standing queries over several incoming data streams. This puts pressure on memory resources and requires creating highly efficient algorithms.

- Complexity in implementation: Stream processing, by its very nature, focuses on managing continuous data in real time. Scaling these systems can be a difficult task. Handling complications like out-of-sequence or missing data can make implementation challenging. This complexity becomes even more magnified when trying to use it on a bigger scale.

How do batch and stream processing stack up against each other? This table provides a quick overview:

| Feature | Batch Processing | Stream Processing |

|---|---|---|

| Data Processing | Processes data in large, pre-defined groups (batches) | Processes data continuously as it arrives in real-time |

| Latency | Higher latency; results are available after the batch is processed | Lower latency; results are available immediately |

| Use Cases | Data warehousing, ETL, reporting, back-end processes | Real-time analytics, fraud detection, monitoring |

| Scalability | Scales well for large volumes of data | Scales well for high-velocity data streams |

| Complexity | Generally simpler to implement | More complex to implement due to real-time nature |

| Fault Tolerance | Less critical; can be restarted if it fails | More critical; needs to be highly resilient |

| Examples | Payroll processing, billing, end-of-day reports | Social media analytics, stock trading, IoT |

Batch vs. Stream Processing: Industry-Wide Examples For Practical Perspectives

Industries today use the power of data in various forms. Understanding when to use batch processing vs stream processing impacts operational efficiency and response times. Here’s how different industries apply both methods in different scenarios:

Healthcare

- Batch processing: Periodic updates consolidate patient medical histories from different departments and provide comprehensive records for future consultations.

- Stream processing: Continuous monitoring of critical patients through ICU systems provides healthcare professionals with real-time health metrics and prompts immediate action upon any anomalies.

Logistics & Supply Chain

- Batch processing: Shipments and deliveries are grouped based on destinations. This helps optimize route planning and resource allocation.

- Stream processing: Real-time tracking of shipments gives immediate status updates to customers and addresses any in-transit issues swiftly.

Telecom & Utilities

- Batch processing: Large groups of bills are consolidated and processed together, making it easy to apply the same rates, discounts, and possible service updates.

- Stream processing: Real-time monitoring of network traffic to detect outages or interruptions. This helps us respond swiftly to infrastructure issues.

Banking

- Batch processing: End-of-day reconciliations involve consolidating and matching transactions in batches, guaranteeing the accuracy of financial ledgers.

- Stream processing: Real-time fraud detection through transaction monitoring helps prevent unauthorized or suspicious activities.

eCommerce

- Batch processing: Order management often employs batch processing where orders are grouped to streamline inventory checks and optimize dispatch schedules.

- Stream processing: Real-time monitoring of user behaviors on platforms lets you provide instant product recommendations for enhancing the online shopping experience.

Marketing

- Batch processing: Bulk promotional emails or newsletters are sent out using batch processing for consistent and timely deliveries to subscribers.

- Stream processing: Real-time sentiment analysis scans online discussions and feedback so brands can gauge and respond swiftly to public opinion.

Retail

- Batch processing: Once the store closes, inventory evaluations refresh stock levels and pinpoint items that need to be replenished.

- Stream processing: Point of Sale (POS) systems process transactions immediately, adjusting inventory and offering sales insights on the spot.

Entertainment & Media

- Batch processing: Daily content updates, like new shows or movies, are uploaded during off-peak hours to minimize disruptions.

- Stream processing: Streaming platforms analyze user watch patterns instantly to provide content recommendations or adjust streaming quality based on bandwidth.

Companies Transitioning From Batch Processing To Stream Processing: 2 Case Studies

Here are 2 case studies of companies that have made the leap from traditional batch processing to dynamic stream processing.

Case Study 1: Digital Media Giant's Real-time Actionable Insights

A renowned online media company that shares articles from many newspapers and magazines faced a major challenge. Their business model included embedding 3 ad blocks on each article’s webpage and purchasing paid redirects to these pages. The goal was to analyze expenses on redirects against earnings from the ads. If an ad campaign was beneficial, they would further invest in it.

Through analytics, they also shortlisted their most favored articles, journalists, and reading trends. However, the existing batch processing system they employed took 50-60 minutes to deliver user engagement data. This made it difficult to handle the continuous data streams in real time.

The company wanted to receive this data within 5 minutes, realizing that delays might mean missed revenue opportunities or excessive promotion of less successful campaigns.

Solution

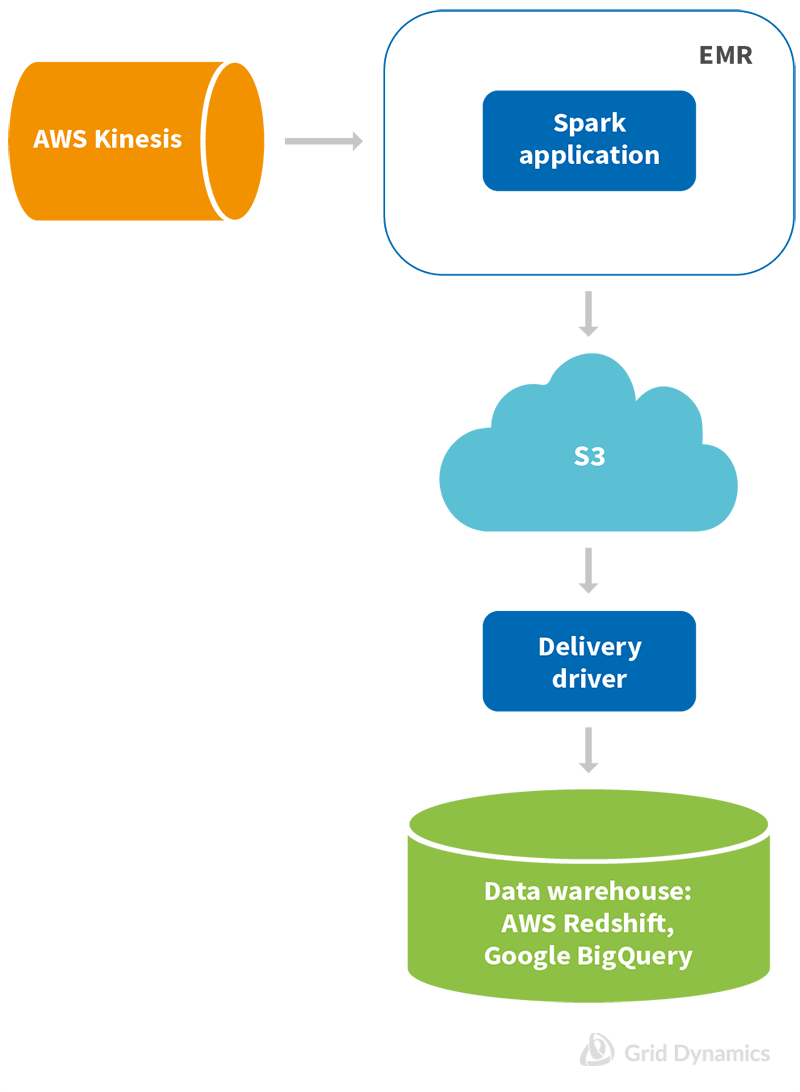

To tackle the challenge head-on, the media company partnered with specialists to develop a streamlined solution.

- Transitioned from batch to stream processing and hosted the platform on the cloud.

- Deployed a low-latency intermediate storage system.

- Used a streaming platform for the Extract-Transform-Load (ETL) process.

- Created a mechanism to deliver data to external warehouses.

Benefits

The transition from batch to stream processing resulted in major advantages, including:

- Time efficiency: The data delivery latency was dramatically reduced from 50-60 minutes to just 5 minutes.

- Simplified monitoring: Overcame the challenges of monitoring a vast cluster with most of the tasks managed by the cloud provider.

- Cost-effective cloud hosting: Transitioned from managing 65 nodes in a data center to efficiently running 18 nodes in the cloud which resulted in lower costs.

- Upgrade ease: Software and hardware upgrades became straightforward and upgrade times were reduced from an entire weekend to a mere 10-minute downtime.

Case Study 2: Netflix – Batch To Stream Processing Transformation

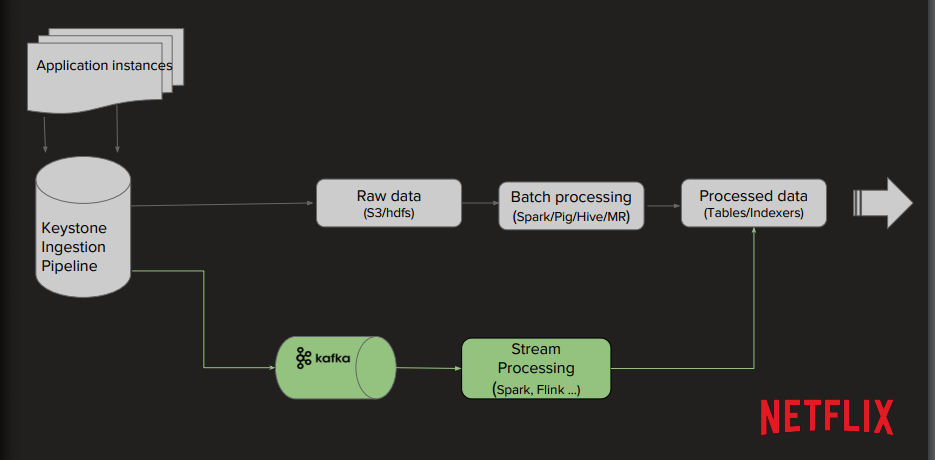

Netflix aims to provide personalized video content anywhere and anytime. For this, it handles 450 billion unique events daily from over 100 million members globally. They previously relied on a traditional batch ETL approach to analyze and process this data which took over 8 hours to complete certain jobs.

This latency impacted their main goal of optimizing the homepage for a personalized experience. This was because when events occurred, it took up to 24 hours to analyze and take action on them. The challenge was not only in managing vast quantities of data but also in real-time auditing, error correction, and integration with real-time systems.

Solution

The stream processing engine had to cater to different Netflix needs, like high throughput, real-time integration with live services, and handling of slowly changing data. After evaluating various options and considering the need for extensive customization of windowing, Netflix chose Apache Flink, backed by supporting technologies like Apache Kafka, Apache Hive, and the Netflix OSS stack.

Benefits

The shift to stream processing brought many benefits, including

- Seamless integration with other real-time systems.

- Enhanced user personalization efforts because of reduced latency.

- Real-time processing reduced the latency between actions and analyses.

- Allowed for improved training of machine-learning algorithms with the most recent data.

- Storage costs were reduced because raw data didn't have to be stored in its original format.

Estuary Flow: A Reliable Solution For Batch & Real-time Data Processing

Estuary Flow is a no-code data pipeline tool known for its impressive real-time data processing capabilities. However, it handles batch data processing just as effectively, especially for managing substantial data quantities or when the need for immediate processing takes a back seat.

How Estuary Flow Excels At Batch Processing

- Scalability: It scales seamlessly with your data, enabling effective batch data processing.

- Schema inference: Flow organizes unstructured data into structured formats through schema inference.

- Reliability: Flow offers unmatched reliability because of its integration with cloud storage and real-time capabilities.

- Transformations: With streaming SQL and Typescript transformations, it refines data to prepare it for subsequent analysis.

- Integration: Flow’s collaboration with Airbyte connectors gives a window into more than 300 batch-oriented endpoints which helps in sourcing data from many different outlets.

Estuary Flow and Real-Time Data Processing Magic

- Fault tolerance: Flow's architecture is fault-tolerant and maintains consistent data streams even when facing system anomalies.

- Real-time database replication: With the capacity to mirror data in real-time, it is beneficial even for databases exceeding 10TB.

- Real-time data capture: It actively captures data from a range of sources like databases and SaaS streams as soon as they surface.

- Real-time data integration: Its intrinsic Salesforce integration combined with connections to other databases makes instant data integration a reality.

- Real-time materializations: It quickly reflects insights from evolving business landscapes and presents almost instantaneous perspectives across varied platforms.

Conclusion

The debate between batch processing vs stream processing will continue to spark ongoing discussions within the technology and business world. As technology continues to evolve, businesses should stay agile and adaptive. Understanding the strengths and limitations of batch and stream processing will empower them to optimize their data infrastructure and make better decisions.

Understanding this dilemma, Estuary Flow offers the best of both worlds. Our platform seamlessly supports both batch and real-time data processing. Its impressive reliability, scalability, and connectivity make it adept at ingesting batch data, transforming it, and readying it for analysis. At the same time, Flow captures and integrates streaming data in real-time for timely action based on fresh insights.

Sign up for free and experience Flow's unparalleled data streaming features designed for your business or get in touch with us for personalized assistance.

Author

Popular Articles